Hunting leaks in GTK+ application used to be fairly simple: you would run your application under Purify (or, later, Valgrind) and the leak reports would pretty much tell you where to go plug.

That was a long time ago. In the meantime, GTK+ has gotten more complex over its iterations with more caches, more inter-object links, and deliberately-unfreed objects. On top of that, Valgrind and Purify are not particular well suited for finding the cause of leaks: by design they will tell you the backtrace of the call that allocated memory which was never leaked. In a ref-counted world that information is often quite insufficient: the leaked widget was allocated by the gui builder — oh goodie! What you really want to know is who holds the extra ref.

Enter the gobject debugger first introduced by Danielle here. After some major internal work, it has become mature.

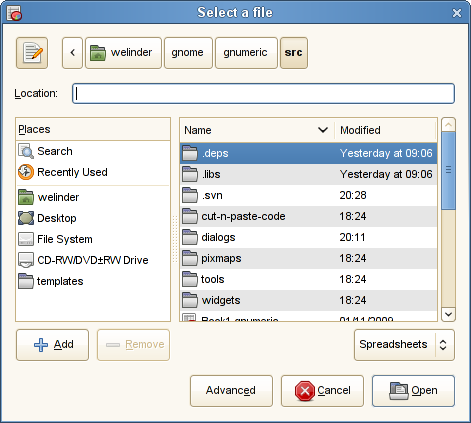

I used this for Gnumeric, which was already one of the strictest leak-policed applications in GTK+ land. We leaked a number of GtkTreeModel/GtkListStore objects, for example. Easily fixed. Also, when touching print code or file choosers we leaked massively: one object per printer and/or two objects per file in the current directory. A sequence of bug reports (646815, 646462, 646446, 646461, 646460, 646458, 646457, and 645483) later, GTK+ is now behaving much better. All but the last of these have been fixed. With this, we are down to leaking about 20 Gtk-related objects: the recent-documents manager, im-module objects, the default icon factory, and theme engines. Basically stuff that GTK+ does not want to release.

Please try this on your applications, especially if they are long-running. I still have to kill gnome-terminal, banshee, metacity, and gvfsd from time to time when they grow to absurd sizes. That doesn’t have to be caused by GObject leaks, but it might very well. (I know some of these samples are obsolete; I am not naive enough to believe their replacements would fare any better.)

This might be a good time to remind people that g_object_get and gtk_tree_model_get will give you a reference when you use them to retrieve GObjects. You need to unref when done. The problem is that it is not immediately clear from the g_object_get/gtk_tree_model_get call whether existing code is getting objects, so a certain knowledge of the code is needed.