Disclaimer: Dear Phoronix, this post is totally for you.

First, a graph

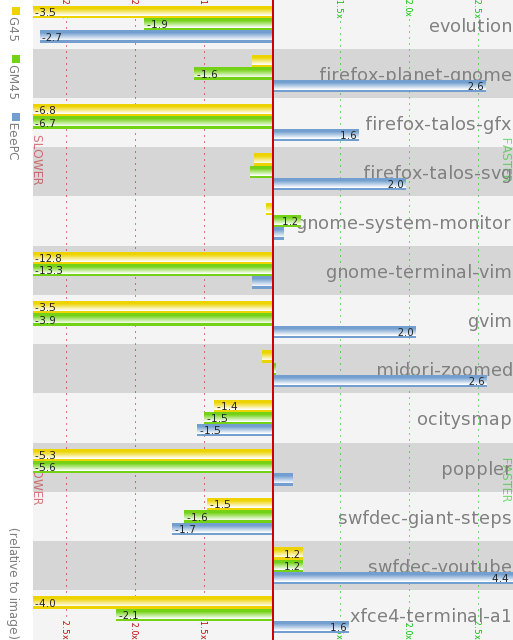

This graph is a comparison of relative performance of the xlib backend on various computers with an Intel GPU. What it shows is the relative performance of using the X server compared to just using the computer’s CPU for rendering the real-world benchmarks we Cairo people compiled. The red bar in the center is the time the CPU renderer takes. If the bar goes to the left, using the X server is slower, if it goes to the right, using the X server is faster. Colors represent the different computers the benchmark ran on (see the legend on the left side), and the numbers tell you how much slower or faster the test ran. So the first number “-3.5” means that the test ran 3.5x slower using the X server than it ran just using the CPU. And the graph shows very impressively that the GPU is somewhere between 13 times slower and 5 times faster than the CPU depending on the task you do. And even for the exact same task, it can be between 7 times slower and 2 times faster depending on the computer it’s running on. So should you use the GPU for what you do?

Well, what’s actually benchmarked here?

The benchmarks are so-called traces recorded using cairo-trace APPLICATION. This tool will be available with Cairo 1.10. It works a bit like strace in that it intercepts all cairo calls of your application. It then records them to a file and that file can later be played back for benchmarking rendering performance. We’ve so far taken various test cases we considered important and added them to our test-suite. They contain everything from watching a Video on Youtube (swfdec-youtube) over running Mozilla’s Talos testsuite to scrolling in gnome-terminal. The cairo-traces README explains them all. The README also explains how to contribute traces. And we’d love to have more useful traces.

But GL!

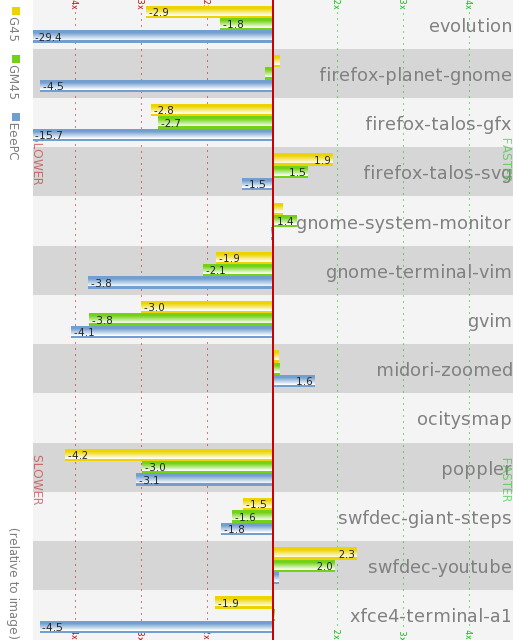

Yes, OpenGL is apparently the solution to all problems with rendering performance. Which is why I worked hard in recent weeks to improve Cairo’s GL backend. The same graph as above, just using GL instead of Xlib looks like this:

What do we learn? Yes, it’s equally confusing, from 30 times slower to 3 times faster. And we have twice as fast and 1.5x slower on the same test depending on hardware. So it’s the same mess all over again. We cannot even say if using the CPU is better or worse than using the GPU on the same machine. Take the EeePC results for the firefox-planet-gnome test: Xlib 2.5x faster, GL is 4.5x slower. Yay.

But that’s only Intel graphics

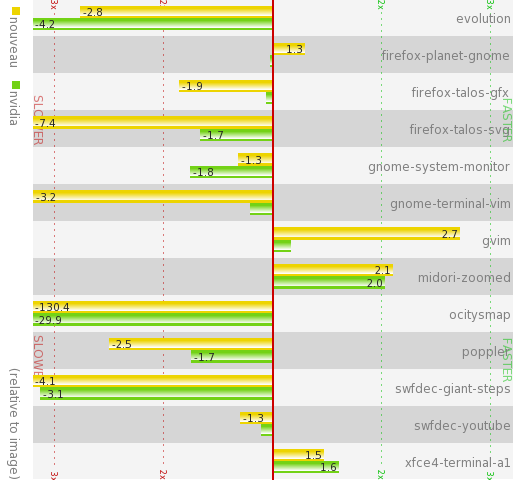

Yeah, we’re all working on Intel chips in Cairo development. Which might be related to the fact that we all either work for Intel or get laptops with Intel GPUs. Fortunately, Chris had a Netbook with an nvidia chip where he ran the tests on – once with the nvidia binary driver and once with the Open Source nouveau driver. Here’s the Xlib results:

Right, that looks as unconclusive as before. Maybe a tad worse than on Intel machines. And nouveau seems to be faster than the binary nvidia driver. Good work! But surely for GL nvidia GPUs will rule the world, in particular with the nvidia binary driver. Let’s have a look:

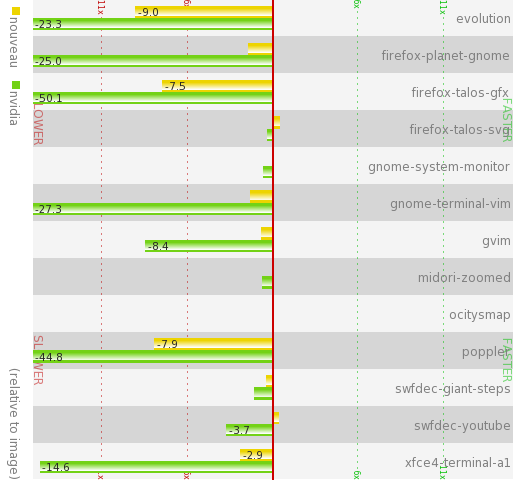

Impressive, isn’t it? GL on nvidia is slow. There’s not a single test where using the CPU would be noticably slower. And this is on a Netbook, where CPUs are slow. And the common knowledge that the binary drivers are better than nouveau aren’t true either: nouveau is significantly better. But then, we had a good laugh because we convinced ourselves that Intel GPUs seem to be a far better choice than nVidia GPUs currently.

That’s not enough data!

Now here’s the problem: To have a really really good laugh, we need to be sure that Intel is better than nVidia and ATI. And we don’t have enough data points to prove that conclusively yet. So if you have a bunch of spare CPU cycles and are not scared of building development code, you could run these benchmarks on your machine and send them to me. That way, you help the Cairo team getting a better overview of where we stand and a good idea of what areas need improvement the most. Here’s what you need to do:

- Make sure your distro is up to date. Recent releases – like Fedora 13 or Ubuntu Lucid – are necessary. If you run development trees of everything, that’s even better.

- Start downloading http://people.freedesktop.org/~company/stuff/cairo-traces.tar.bz2 – it’s a 103MB file that contains the benchmark traces from the cairo-traces repository.

- Install the development backages required to build Cairo. There’s commands like

apt-get build-dep cairooryum-builddep cairothat do that. - Install development packages for OpenGL.

- Check out current Cairo from git using

git clone git://anongit.freedesktop.org/cairo - Run

./autogen.sh --enable-glin the checked out cairo dir. In the summary output at the end, ensure that the Xlib and the GL backends will be built. - Run

make - change into the perf/ directory.

- Run

make cairo-perf-trace - Unpack the cairo-traces.tar.bz2 that hopefully finished downloading by now using

tar xjf cairo-traces.tar.bz2. This will create a benchmark directory containing the traces. - Run

CAIRO_TEST_TARGET=image,xlib,gl ./cairo-perf-trace -r -v -i3 benchmark/* > `git describe`-`date '+%Y%m%d'`.`hostname`.perf. Ideally, you don’t want to use the computer while the benchmark runs. It will output statistics to the terminal while it runs and generate a .perf file, named something like “1.9.8-56-g1373675-20100624.lvs.perf”. - Attach the file into an email and send it to otte@gnome.org, preferrably with information on your CPU, GPU and which distribution and drivers you were running the test on.

Note: The test runs will take a while. Depending on your drivers and CPU, it’ll take between 15 minutes and a day – it takes ~half an hour on my 6months old X200 laptop.

What’s going to happen with all the benchmarks?

Depending on the feedback I get for this, I intend to either weep into a pillow because noone took the time to run the benchmarks, write another blog post with pretty graphics or even do a presentation about it at Linux conferences. Because most of all I intend to make us developers learn from it so that we can improve drivers and libraries so that the tests are 10x faster when you upgrade your distro the next time.

Note: I originally read the nvidia graph wrong and assumed the binary drivers were faster for OpenGL. I double-checked my data: This is not true. nouveau is faster for the Cairo workloads than the nvidia driver.

24 comments ↓

Hi Ben,

Thanks, interesting tests. I’ll be sure to study them more closely in the morning and likely will be linking to your blog post.

Cheers,

Michael Larabel

(Phoronix.com)

The crazy thing is that the benchmarks where the image backend loses are all gradient-bound. And that’s an area where pixman can be really substantially sped up.

Well on my computer I have a GeForce 8400 GS and a 82915G/GV/910GL, but I think that Intel chip is one generation older than you are interested in. Also, they are both awful cards even on Windows.

If you’re interested I can run them sometime though.

What about a hybrid mode where both the CPU and GPU are used to get the maximum amount of performance. X could have some fine-grained options for specifying which operations to run on the CPU and which on the co-processor^W^WGPU and users could benchmark and get some more speed. Hmmmm NUMA.

Sorry, can’t provide results as the xlib firefox-planet-gnome bench killed my X… (radeon driver)

[mi] EQ overflowing. The server is probably stuck in an infinite loop.

Will the benchmark run on Mac OS X? I have Macbook 2006 with Intel GMA950 and rumour has it Mac OS X is doing on-the-fly clang/llvm compilation to emulate missing GL API’s and hence it might be faster than ubuntu on my machine =)

@n: I’m interested in any run of the benchmark, they all can provide surprising insights. Though it’ll probably take a while to run them on your computer. ;)

@foo: Hybrid modes are a complicated thing. First of all, you often don’t know where something is faster, but more importantly, the probably most costly thing to do is transferring data between the CPU and the GPU. So you must decide to do everything on the CPU or everything on the GPU or the transfer costs will kill you.

@Pascal: Uh oh. Be sure to file bugs about it, or you won’t be able to view Planet GNOME soon…

Also, you can skip the test if you don’t include it in the traces to run.

@Dmitrij: Yes it should run on OS X, though I haven’t tested it personally. But we have people on IRC that ran it there. And on Windows. The only thing we don’t have yet is code to support Apple GL, but even comparing our quartz backend to the image backend might provide insightful results.

Well anholt claims that comparing the image to xlib/gl isn’t fair so he ended up comparing gl to xlib, see:

http://anholt.livejournal.com/42146.html

drago01: What Eric claims is that it isn’t entirely fair because the final blit onto the screen is not accounted for. This is most likely a strawman though as a single image upload doesn’t make up for really slow rendering of the image into the buffer. And he knew that, otherwise he’d fixed our benchmark. He just wanted to get rid of the image backend, because then the GL work looks a lot less impressive. ;)

It’s an interesting comparison though as the first graph in Eric’s post shows the performance of the GL backend relative to the image backend 4 months ago, while the yellow and green bar in the second graph in this post show a comparable setup today (though they use different scales). And it shows GL has caught up in quite a few tests, yay!

Also don’t forget that pixman is also not standing still:

http://lists.freedesktop.org/archives/xorg-announce/2010-April/001288.html ;)

So the relative benchmark results may differ a lot depending CPU on whether pixman 0.16.x or 0.18.x was used for CPU rendering in the tests. And to make this CPU vs. GPU race even more exciting, it should be mentioned that pixman does not utilize more than 1 CPU core at the moment.

The latest Intel driver (2.12) (released two days ago, http://lists.freedesktop.org/archives/intel-gfx/2010-June/007218.html) has many 2D performance improvements, it might be worth trying it.

These results are very interesting, and the benchmarks that you have created are IMO quite needed if we want to improve the performance of applications and libraries on Linux.

However, I think your conclusions are inaccurate. The basic conclusion of these results is:

cairo-gl_nvidia_nouveau ran slower than cairo-gl_intel, which ran slower than cairo-xlib_gpu, which ran slower than cairo-xlib_cpu

The leap from this to “GPU is slower than CPU for this workload” or “Nvidia chipsets are slower than Intel chipsets for this workload” makes several assumptions which may or may not be true.

Hmm, I’m not seeing these results with the nvidia driver. With the exception of the ‘ocitysmap’ test, which is pathological because it uses an enormous pixmap that exceeds the GPU’s maximum renderable dimensions, the xlib results were faster across the board on a Fermi with a 3 GHz dual-core Pentium D. On my ION netbook, the only test that was slower was evolution. This is despite the slightly unfair comparison and the notorious difficulty of accelerating RENDER trapezoids. The GPU could probably do a *lot* better with an API that lets the GPU munch on the complete path description instead of a bazillion tiny trapezoids.

Note that cairo from git master requires pixman 0.17.5 or later, which is not available in Ubuntu 10.04. Ubuntu 10.10 has pixman 18.2, which is buildable under Ubuntu 10.04. Also, it would be interesting to see what, if any, effect the use of XCB has.

@Aaron: Yeah, improving the RENDER protocol is the logical next step, unless we decide to move to GL rendering across the board and stop actively developing RENDER. And yes, ocitysmap is a test that checks how well the tiling implementation works so is rather pathological. It’s interesting to know though.

Also, would you mind sending me the results of your benchmark runs?

@Alex: The biggest difference between Xcb and Xlib will be the code that they use two very different implementations inside Cairo. Xlib is the stable backend that has to work and Xcb is the experimental prototyping one. I wouldn’t expect a lot of difference if you just switched the Xlib backend to use Xcb calls or vice versa.

@Benjamin: I seem to recall hearing that XCB hide latency through asynchronous calls, and I also seem to recall Cairo being praised for having an XCB backend. Lets see…here it is: http://vignatti.wordpress.com/2010/06/15/toolkit-please-xlib-xcb/

On the other hand, there’s this: http://julien.danjou.info/blog/index.html#Thoughts%20and%20rambling%20on%20the%20X%20protocol

I’m not sure what to make of all this myself.

Hi, are sure the cairo-perf-trace script is working as expected? I followed the instructions and it failed (with an undefined symbol) when it tried to run the gl backend tests.

I read its contents and I saw that it sets ‘/usr/lib’ before ‘/path/to/cairo/src/.libs/ in LD_LIBRARY_PATH. When i changed the order it started working and the results were considerably different, expecially for the image backend.

In my experience, generally, when using hardware accelerated GL is so much slower than CPU rendering, the problem is not with one of the actual rendering processes (rasterization, texturing, etc). If hardware rendering performance is this abysmal, it’s usually due to either a software fallback in the driver or due to doing things like updating textures through an inefficient mechanism (for example a frame buffer copy), re-generating buffers every time you draw, or using immediate mode. The problem then is, i think, that a GL rendering backend to a complex and full featured library like cairo is likely not easy to actually do efficiently because of very few guarantees about persistence of data in the backend.

With very few excptions, the actual process of drawing antialiased/textured/lit/shaded primitives on pretty much any GPU is blazingly fast (otherwise, why would we have GPUs?) compared to pretty much any CPU. My guess is that the overhead until you actually get to the drawing is so large in your tests, that it completely destroys performance.

I would love to see these benchmarks with an additional ‘copy-to-pixmap’ stage, where it would be necessary to actually see the results…

I’ve certainly found, that in practice, using the image backend is much slower than the xlib backend.

What I’d love to see in cairo would be an ‘I don’t care about accuracy’ mode, where you could have a more efficient, hardware-accelerated backend that may not produce accurate results (but will basically look right). This would be so handy for realtime graphics/visualisations…

[…] fun with benchmarks […]

I finally got around to sending Benjamin my results. I also posted a graph of the results at http://people.freedesktop.org/~aplattner/cairo-perf-trace

I wasn’t sure how he created his graphs, so I just used OpenOffice and graphed them as performance relative to the image backend on the same platform. E.g., a value of 0.5 means it’s half as fast and 2.0 means it’s twice as fast.

I omitted the ocitysmap test because it currently hits a bug that makes it take longer than I wanted to wait.

I ran the benchmarks on a Fermi GF100 in a system with a Pentium D 3 GHz, and on an ION netbook that has an MCP79 with an Atom N280. I ran both of them with the current release driver, 256.35.

$ make cairo-perf-trace

…

cairo-boilerplate-svg.c:243: warning: ‘_cairo_boilerplate_svg_cleanup’ defined but not used

cairo-boilerplate-svg.c:255: warning: ‘_cairo_boilerplate_svg_force_fallbacks’ defined but not used

CC cairo-boilerplate-test-surfaces.lo

CC cairo-boilerplate-constructors.lo

CXXLD libcairoboilerplate.la

libtool: link: unsupported hardcode properties

libtool: link: See the libtool documentation for more information.

libtool: link: Fatal configuration error.

make[1]: *** [libcairoboilerplate.la] Error 1

make[1]: Leaving directory `/home/ciupicri/3rdparty-projects/cairo/boilerplate’

make: *** [../boilerplate/libcairoboilerplate.la] Error 2

I’m using an up2date Fedora 13 x86_64 box (all the installed packages).

@Cristian: That is libtool-speak for “please install a C++ compiler”. I’ve just fixed the code to hopefully not require a C++ compiler anymore.

So if you just “git pull origin” in your cairo checkout, it sshould all work. If not, install g++.

@Benjamin Otte: thanks! The last source code update made everything work, although it would have been nicer if configure would have complained about the missing C++ compiler from the beginning.

Anyway, I started the benchmark, but some of the GL Firefox tests took a lot of time on my Nvidia card (1000 seconds/test!), so I’ll have to run them some other time. The strange thing is that the image and xlib tests run pretty fast, in less than one minute.