tl;dr: Passim is a local caching server that uses mDNS to advertise files by their SHA-256 hash. Named after the Latin word for “here, there and everywhere” it might save a lot of people a lot of money.

Introduction

Much of the software running on your computer that connects to other systems over the Internet needs to periodically download metadata or other information needed to perform other requests.

As part of running the passim/LVFS projects I’ve seen how download this “small” file once per 24h turns into tens of millions of requests per day — which is about ~10TB of bandwidth! Everybody downloads the same file from a CDN, and although a CDN is not super-expensive, it’s certainly not free. Everybody on your local network (perhaps dozens of users in an office) has to download the same 1MB blob of metadata from a CDN over a perhaps-non-free shared internet link.

What if we could download the file from the Internet CDN on one machine, and the next machine on the local network that needs it instead downloads it from the first machine? We could put a limit on the number of times it can be shared, and the maximum age so that we don’t store yesterdays metadata forever, and so that we don’t turn a ThinkPad X220 into a machine distributing 1Gb/s to every other machine in the office. We could cut the CDN traffic by at least one order of magnitude, but possibly much more. This is better for the person paying the cloud bill, the person paying for the internet connection, and the planet as a whole.

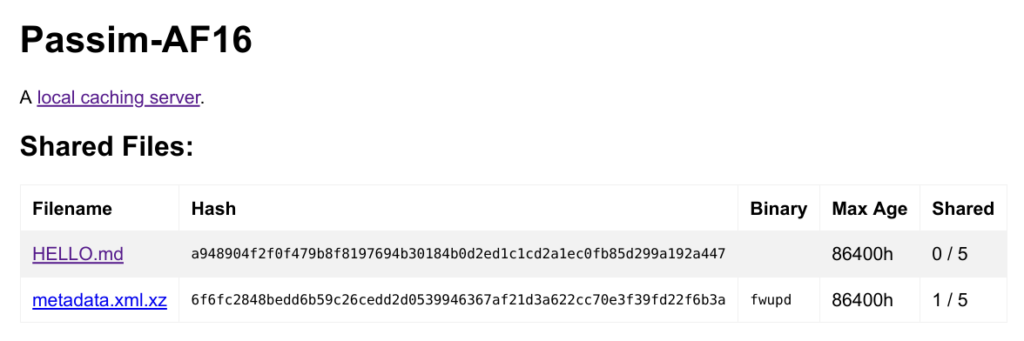

This is what Passim might be. You add automatically or manually add files to the daemon which stores them in /var/lib/passim/data with xattrs set on each file for the max-age and share-limit. When the file has been shared more than the share limit number of times, or is older than the max age it is deleted and not advertised to other clients.

The daemon then advertises the availability of the file as a mDNS service subtype and provides a tiny single-threaded HTTP v1.1 server that supplies the file over HTTPS using a self-signed certificate.

The file is sent when requested from a URL like https://192.168.1.1:27500/filename.xml.gz?sha256=the_hash_value – any file requested without the checksum will not be supplied. Although this is a chicken-and-egg problem where you don’t know the payload checksum until you’ve checked the remote server, this is solved using a tiny <100 byte request to the CDN for the payload checksum (or a .jcat file) and then the multi-megabyte (or multi-gigabyte!) payload can be found using mDNS. Using a Jcat file also means you know the PKCS#7/GPG signature of the thing you’re trying to request. Using a Metalink request would work as well I think.

Sharing Considerations

Here we’ve assuming your local network (aka LAN) is a nice and friendly place, without evil people trying to overwhelm your system or feed you fake files. Although we request files by their hash (and thus can detect tampering) and we hopefully also use a signature, it still uses resources to send a file over the network.

We’ll assume that any network with working mDNS (as implemented in Avahi) is good enough to get metadata from other peers. If Avahi is not running, or mDNS is turned off on the firewall then no files will be shared.

The cached index is available to localhost without any kind of authentication as a webpage on https://localhost:27500/.

Only processes running as UID 0 (a.k.a. root) can publish content to Passim. Before sharing everything, the effects of sharing can be subtle; if you download a security update for a Lenovo P1 Gen 3 laptop and share it with other laptops on your LAN — it also tells any attacker [with a list of all possible firmware updates] on your local network your laptop model and also that you’re running a system firmware that isn’t currently patched against the latest firmware bug.

My recommendation here is only to advertise files that are common to all machines. For instance:

- AdBlocker metadata

- Firmware update metadata

- Remote metadata for update frameworks, e.g. apt-get/dnf etc.

Implementation Considerations

Any client MUST calculate the checksum of the supplied file and verify that it matches. There is no authentication or signing verification done so this step is non-optional. A malicious server could advertise the hash of firmware.xml.gz but actually supply evil-payload.exe — and you do not want that.

Comparisons

The obvious comparison to make is IPFS. I’ll try to make this as fair as possible, although I’m obviously somewhat biased.

IPFS

- Existing project that’s existed for many years tested by many people

- Allows sharing with other users not on your local network

- Not packaged in any distributions and not trivial to install correctly

- Requires a significant time to find resources

- Does not prioritize local clients over remote clients

- Requires a internet-to-IPFS “gateway” which cost me a lot of $$$ for a large number of files

Passim

- New project that’s not even finished

- Only allowed sharing with computers on your local network

- Returns results within 2s

One concern we had specifically with IPFS for firmware were ITAR/EAR legal considerations. e.g. we couldn’t share firmware containing strong encryption with users in some countries — which is actually most of the firmware the LVFS distributes. From an ITAR/EAR point of view Passim would be compliant (as it only shares locally, presumably in the same country) and IPFS certainly is not.

There’s a longer README in the git repo. There’s also a test patch that wires up fwupd with libpassim although it’s not ready for merging. For instance, I think it’s perfectly safe to share metadata but not firmware or distro package payloads – but for some people downloading payloads on a cellular link might be exactly what they want – so it’ll be configurable. For reference Windows Update also shares content (not just metadata) so maybe I’m worrying about nothing, and doing a distro upgrade from the computer next to them is exactly what people need. Small steps perhaps.

Comments welcome.

EDIT 2023-08-22: Made changes to reflect that we went from HTTP 1.0 to HTTP 1.1 with TLS.

There’s also https://en.wikipedia.org/wiki/Content_centric_networking

We tried NDN at Endless. It was quite a while ago, but at the time, things like flow control were novel/experimental research which weren’t implemented in all of the stacks. It seemed like a bit of a dud really. Not sure whether CCN has advanced any, have you tried it?

Super idea! I wanted to do the same as a plugin for dnf during my time at University but never found time for it. And even if I had, no one would have used it. I’d like to see this widely used in the Linux ecosystem ;)

How would a comparison with BitTorrent (with DHTs) look?

What if LVFS supplied the magnet links or torrents?

Sounds a whole stack of software already exists for sinilar purposes?

Torrenting isn’t going to be allowed in many offices — and some ISP forbid it too.

Why doesn’t it cache files in /var/cache/passim instead?

Cool project!

We could do; although /var/cache seems to be for stuff you can recreate easily — and I’m not sure that’s 100% the case here.

Isn’t it exactly a cache if it’s data that you’d otherwise get from a CDN?

PS: Good project!

The nix team desperately need this. Apparently their s3 costs are unmanageable.

How do you select/discover/elect the server amongst the nodes on the network? At Endless in a similar (more complex – sharing ostree repos over http) we experimented with bloom filters and advertising the bytes as an mDNS record so that we’d know which hosts on the network to contact to fetch a certain object.

Sorry for the delay, PTO. We do something super basic and just add the sha256 checksum as a mDNS subtype — so this is going to work for < 1024 "large files" but isn't going to work for ~1,000,000 "small files".

This looks similar to rfc2169.