So, systemd-resolved is enabled by default in Fedora 33. Most users won’t notice the difference, but if you use VPNs — or depend on DNSSEC, more on that at the bottom of this post — then systemd-resolved might be big deal for you. When testing Fedora 33, we found one bug report where a user discovered that systemd-resolved broke his VPN configuration. After this bug was fixed, and nobody reported any further issues, I was pretty confident that migration to systemd-resolved would go smoothly. Then Fedora 33 was released, and I noticed a significant number of users on Ask Fedora and Reddit asking for help with broken VPNs, problems that Fedora 33 beta testers had failed to detect. This was especially surprising to me because Ubuntu has enabled systemd-resolved by default since Ubuntu 16.10, so we were four full years behind Ubuntu here, which should have been plenty of time for any problems to be ironed out. So what went wrong?

First, let’s talk about how things worked before systemd-resolved, so we can see what was wrong and why we needed change. We’ll see how split DNS with systemd-resolved is different than traditional DNS. Finally, we’ll learn how custom VPN software must configure systemd-resolved to avoid problems that result in broken DNS.

I want to note that, although I wrote the Fedora change proposal and have done some evangelism on behalf of systemd-resolved, I’m not a systemd developer and haven’t contributed any code to systemd-resolved.

Traditional DNS with nss-dns

Let’s first see how things worked before systemd-resolved. There are two important configuration files to discuss. The first is /etc/nsswitch.conf, which controls which NSS modules are invoked by glibc when performing name resolution. Note these are glibc Name Service Switch modules, which are totally unrelated to Firefox’s NSS, Network Security Services, which unfortunately uses the same acronym. Also note that, in Fedora (and also Red Hat Enterprise Linux), /etc/nsswitch.conf is managed by authselect and must not be edited directly. If you want to change it, you need to edit /etc/authselect/user-nsswitch.conf instead, then run sudo authselect apply-changes.

Anyway, in Fedora 32, the hosts line in /etc/nsswitch.conf looked like this:

hosts: files mdns4_minimal [NOTFOUND=return] dns myhostname

That means: first invoke nss-files, which looks at /etc/hosts to see if the hostname is hardcoded there. If it’s not, then invoke nss-mdns4_minimal, which uses avahi to implement mDNS resolution. [NOTFOUND=return] means it’s OK for avahi to not be installed; in that case, it just gets ignored./etc/resolv.conf.

Next, let’s look at /etc/resolv.conf. This file contains a list of up to three DNS servers to use. The servers are attempted in order. If the first server in the list is broken, then the second server will be used. If the second server is broken, the third server will be used. If the third server is also broken, then everything fails, because no matter how many servers you list here, all except the first three are ignored. In Fedora 32, /etc/resolv.conf was, by default, a plain file managed by NetworkManager. It might look like this:

# Generated by NetworkManager

nameserver 192.168.122.1

That’s a pretty common example. It means that all DNS requests should be sent to my router. My router must have configured this via DHCP, causing NetworkManager to dutifully add it to /etc/resolv.conf.

Traditional DNS Problems

Traditional DNS is all well and good for a simple case like we had above, but turns out it’s really broken once you start adding VPNs to the mix. Let’s consider two types of VPNs: a privacy VPN that is always enabled and which is the default route for all web traffic, and a corporate VPN that only receives traffic for internal company resources. (To switch between these two different types of VPN configuration, use the checkbox “Use this connection only for resources on its network” at the bottom of the IPv4 and IPv6 tabs of your VPN’s configuration in System Settings.)

Now, what happens if we connect to both VPNs? The VPN that you connect to first gets listed first in /etc/resolv.conf, followed by the VPN that you connect to second, followed by your local DNS server. Assuming the DNS servers are all working properly, that means:

- If you connect to your privacy VPN first and your corporate VPN second, all DNS requests will be sent to your privacy VPN, and you won’t be able to visit internal corporate websites. (This scenario is exactly why I become interested in systemd-resolved. After joining Red Hat, I discovered that I couldn’t access various redhat.com websites if I connected to my VPNs in the wrong order.)

- If you connect to your corporate VPN first and your privacy VPN second, then all your DNS goes to your corporate VPN, and none to your privacy VPN. As that defeats the point of using the privacy VPN, we can be confident it’s not what users expect to happen.

- If you ever connect the VPNs in the opposite order — say, if your connection to one temporarily drops, and you need to reconnect — then you’ll get the opposite behavior. If you don’t notice this pattern behind the failures, it can make problems difficult to reproduce.

You don’t need two VPNs for this to be a problem, of course. Let’s say you have no privacy VPN, only a corporate VPN. Well, your employer may fire you if it notices DNS requests it doesn’t like. If you’re making 30 requests per hour to facebook.com, youtube.com, or more salacious websites, that sure looks like you’re not doing very much work. It’s really never in the employee’s best interests to send more DNS than necessary to an employer.

If you use only a privacy VPN, the failure case is arguably even more severe. Let’s say your privacy VPN’s DNS server temporarily goes offline. Then, because /etc/resolv.conf is a list, glibc will fall back to using your normal DNS, probably either your ISP’s DNS server, or your router that forwards everything to your ISP. And now your DNS query has gone to your ISP. If you’re making the wrong sort of DNS requests in the wrong sort of countries — say, if you’re visiting websites opposed to your government — this could get you imprisoned or executed.

Finally, either type of VPN will break resolution of local domains, e.g. fritz.box, because only your router can resolve that properly, but you’re sending your DNS query to your VPN’s DNS server. So local resources will be broken for as long as you’re connected to a VPN.

All things considered, the status quo prior to systemd-resolved was pretty terrible. The need for something better should be clear. Now let’s look at how systemd-resolved fixes this.

Modern DNS with nss-resolve

First, let’s look at /etc/nsswitch.conf, which looks a bit different in Fedora 33:

hosts: files mdns4_minimal [NOTFOUND=return] resolve [!UNAVAIL=return] myhostname dns

nss-myhostname and nss-dns have switched places, but that’s just a minor change that ensures your local hostname is always local even if your DNS server thinks otherwise. (March 2021 Update: nss-myhostname has been moved before nss-mdns4_minimal for Fedora 34, so our new configuration is files myhostname mdns4_minimal [NOTFOUND=return] resolve [!UNAVAIL=return] dns.)

The important change here is the addition of resolve [!UNAVAIL=return]. nss-resolve uses systemd-resolved to resolve hostnames, via either its varlink API (with systemd 247) or its D-Bus API (with older versions of systemd). If systemd-resolved is running, glibc will stop there, and refuse to continue on to nss-myhostname or nss-dns even if nss-resolve doesn’t return a result, since both nss-myhostname and nss-dns are obsoleted by nss-resolve. But if systemd-resolved is not running, then it continues on (and, if resolving something other than the local hostname, will up using nss-dns and reading /etc/resolv.conf, as before).

Importantly, when nss-resolve is used, glibc does not read /etc/resolv.conf when performing name resolution, so any configuration that you put there is totally ignored. That means any script or program that writes to /etc/resolv.conf is probably broken. /etc/resolv.conf still exists, though: it’s managed by systemd-resolved to maintain compatibility with programs that manually read /etc/resolv.confand do their own name resolution, bypassing glibc. Although systemd-resolved supports several different modes for managing /etc/resolv.conf, the default mode, and the mode used in both Fedora and Ubuntu, is for /etc/resolv.conf to be a symlink to /run/systemd/resolve/stub-resolv.conf, which now looks like this:

# This file is managed by man:systemd-resolved(8). Do not edit.

#

# This is a dynamic resolv.conf file for connecting local clients to the

# internal DNS stub resolver of systemd-resolved. This file lists all

# configured search domains.

#

# Run "resolvectl status" to see details about the uplink DNS servers

# currently in use.

#

# Third party programs should typically not access this file directly, but only

# through the symlink at /etc/resolv.conf. To manage man:resolv.conf(5) in a

# different way, replace this symlink by a static file or a different symlink.

#

# See man:systemd-resolved.service(8) for details about the supported modes of

# operation for /etc/resolv.conf.

nameserver 127.0.0.53

options edns0 trust-ad

search redhat.com lan

The redhat.com search domain is coming from my corporate VPN, but the rest of this /etc/resolv.conf should look like yours. Notably, 127.0.0.53 is systemd-resolved’s local stub responder. This allows programs that manually read /etc/resolv.conf to continue to work without changes: they will just wind up talking to systemd-resolved on 127.0.0.53 rather than directly connecting to your real DNS server, as before.

A Word about Ubuntu

Although Ubuntu has used systemd-resolved for four years now, it has not switched from nss-dns to nss-resolve, contrary to upstream recommendations. This means that on Ubuntu, glibc still reads /etc/resolv.conf, finds 127.0.0.53 listed there, and then makes an IP connection to systemd-resolved rather than talking to it via varlink or D-Bus, as occurs on Fedora. The practical effect is that, on Ubuntu, you can still manually edit /etc/resolv.conf and applications will respond to those changes, unlike Fedora. Of course, that would be a disaster, since it would cause all of your DNS configuration in systemd-resolved to be completely ignored. But it’s still possible on Ubuntu. On Fedora, that won’t work at all.

If you’re using custom VPN software that doesn’t work with systemd-resolved, chances are it probably tries to write to /etc/resolv.conf.

Split DNS with systemd-resolved

OK, so now we’ve looked at how /etc/nsswitch.conf and /etc/resolve.conf have changed, but we haven’t actually explained how split DNS is configured. Instead of sending all your DNS requests to the first server listed in /etc/resolv.conf, systemd-resolved is able to split your DNS on the basis of DNS routing domains.

IP Routing Domains, DNS Routing Domains, and DNS Search Domains: Oh My!

systemd-resolved works with DNS routing domains and DNS search domains. A DNS routing domain determines only which DNS server your DNS query goes to. It doesn’t determine where IP traffic goes to: that would be an IP routing domain. Normally, when people talk about “routing domains,” they probably mean IP routing domains, not DNS routing domains, so be careful not to confuse these two concepts. For the rest of this article, I will use “routing domain” or “DNS domain” to mean DNS routing domain.

A DNS search domain is also different. When you query a name that is only a single label — a domain without any dots — a search domain gets appended to your query. For example, because I’m currently connected to my Red Hat VPN, I have a search domain configured for redhat.com. This means that if I make a query to a domain that is only a single label, redhat.com will be appended to the query. For example, I can query bugzilla and this will be treated as a query for bugzilla.redhat.com. This probably won’t work in your web browser, because web browsers like to convert single-label domains into web searches, but it does work at the DNS level.

In systemd-resolved, each DNS routing domain may or may not be used as a search domain. By default, systemd-resolved will add search domains for every configured routing domain that is not prefixed by a tilde. For example, ~example.com is a routing domain only, while example.com is both a routing domain and a search domain. There is also a global routing domain, ~.

Example Split DNS Configurations

Let’s look at a complex example with three network interfaces:

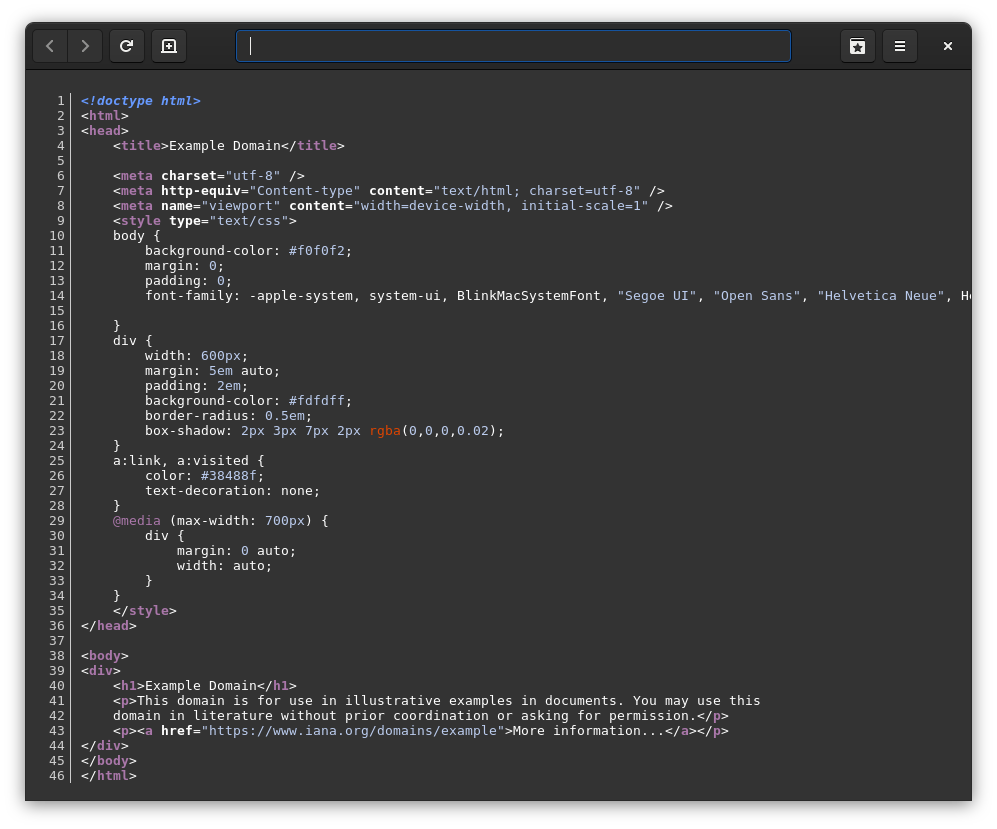

$ resolvectl

Global

Protocols: LLMNR=resolve -mDNS -DNSOverTLS DNSSEC=no/unsupported

resolv.conf mode: stub

Link 2 (enp4s0)

Current Scopes: DNS LLMNR/IPv4 LLMNR/IPv6

Protocols: +DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 192.168.1.1

DNS Servers: 192.168.1.1

DNS Domain: lan

Link 5 (tun0)

Current Scopes: DNS LLMNR/IPv4 LLMNR/IPv6

Protocols: +DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 10.8.0.1

DNS Servers: 10.8.0.1

DNS Domain: ~.

Link 9 (tun1)

Current Scopes: DNS LLMNR/IPv4 LLMNR/IPv6

Protocols: -DefaultRoute +LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 10.9.0.1

DNS Servers: 10.9.0.1 10.9.0.2

DNS Domain: example.com

To simplify this example, I’ve removed several uninteresting network interfaces from the output above: my unused second Ethernet interface, my unused Wi-Fi interface wlp5s0, and two virtual network interfaces that I presume are used by libvirt. This means we only have three interfaces to consider: normal Ethernet enp4s0, the privacy VPN tun0, and the corporate VPN tun1. I’m currently running NetworkManager 1.26.4, so I have also fudged the output a bit to make it look like it would if I were using NetworkManager 1.26.6 — I’ll discuss the difference below — so that this example will be good for the future. Let’s look at a few points of note:

- enp4s0 is configured with +DefaultRoute and no routing domains.

- tun0 is configured with +DefaultRoute and a global routing domain, ~.

- tun1 is configured with -DefaultRoute and a routing domain for example.com. (It also has a search domain for example.com, because it doesn’t start with a tilde.)

systemd-resolved first decides which network interface is most appropriate for your DNS query based on the domain name you are querying, then sends your query to the DNS server associated with that interface. In this case, queries for example.com, foo.example.com, etc. will be sent to 10.9.0.1, since that is the DNS server configured for tun1, which is associated with the domain example.com. All other requests go to 10.8.0.1, since tun0 has the global domain ~. Nothing ever goes to 192.168.1.1, because a privacy VPN is enabled, and that would be a privacy disaster. Very simple, right?

If you do not use a privacy VPN, you will not have any ~. domain configured. In this case, your query will go to all interfaces that have +DefaultRoute. For example, if tun0 were removed from the above configuration, then queries not for example.com would be sent to 192.168.1.1, my router, which is good because tun1 is my corporate VPN and should only receive DNS queries corresponding to its own DNS domains.

Enter NetworkManager

How does systemd-resolved come up with the above configuration? It doesn’t. Everything I wrote in the previous section assumes that you are using NetworkManager, because systemd-resolved doesn’t actually make any decisions about where to send your DNS. That is all the responsibility of higher-level network management software, typically NetworkManager. If you use custom VPN software — anything that’s not a NetworkManager VPN plugin — then that software is also responsible for configuring systemd-resolved and playing nice with NetworkManager.

NetworkManager normally does a very good job of configuring systemd-resolved to work as you would expect, so most users should not need to make any changes. But if your DNS isn’t working as you expect, and you run resolvectl and find that systemd-resolved’s configuration is not what you want, do not report a bug against systemd-resolved! Report a bug against NetworkManager instead (if you’re confident there is a real bug).

If you don’t use NetworkManager, you can still make systemd-resolved do what you want, but you’re on your own. It will not configure itself for you.

NetworkManager 1.26.6

If you’re reading this in December 2020, you’re probably using NetworkManager 1.26.4 or earlier. Things are slightly different here, because NetworkManager recently landed a major behavior change. Previously, NetworkManager would always configure a ~. domain for exactly one network interface. This means that the value of systemd-resolved’s DefaultRoute settings was always ignored, since ~. takes precedence. Accordingly, NetworkManager did not bother to configure DefaultRoute at all. I told you that I fudged the output of the example above a little. In actuality, NetworkManager 1.26.4 has configured +DefaultRoute on my tun1 corporate VPN. That doesn’t make sense, because it should only receive DNS for example.com, but it previously did not matter, because there was previously always a ~. domain on some interface. If you’re not using any VPNs, then your Ethernet or Wi-Fi interface would receive the ~. domain. But since 1.26.6, NetworkManager now only ever configures a ~. domain when you are using a privacy VPN, so the DefaultRoute setting now matters.

Prior to NetworkManager 1.26.6, you could rely on resolvectl domain alone to see where your DNS goes, because there was always a ~. domain. Since NetworkManager 1.26.6 no longer always creates a ~. domain, that no longer works. You’ll need to use look at the full output of resolvectl instead, since that will show you the DefaultRoute settings, which are now important.

My Corporate VPN is Missing a Routing Domain, What Should I Do?

Say your corporate VPN is example.com. You want all requests for example.com to be resolved by the VPN, and they are, because NetworkManager creates an appropriate routing domain for it. But you also want requests for some other domain, say example.org, to be resolved by the VPN as well. What do you do?

Most VPN protocols allow the VPN to tell NetworkManager which domains should be resolved by the VPN. Others allow specifying this in the connection profile that you import into NetworkManager. Sadly, not all VPNs actually do this properly, since it doesn’t matter for traditional non-split DNS. Worse, there is no graphical configuration in GNOME System Settings to fix this. There really should be. But for now, you’ll have to use nmcli to set the ipv4.dns-search and ipv6.dns-search properties of your VPN connection profile. Confusingly, even though that setting says “search,” it also creates a routing domain. Hopefully you never have to mess with this. If you do this, consider contacting your IT department to ask them to fix your VPN configuration to properly declare its DNS routing domains, so you don’t have to fix it manually. (This actually sometimes works!) You might have to do this more than once, if you discover additional domains that need to be resolved by the corporate VPN.

Custom VPN Software

By “custom VPN software,” I mean any VPN that is not a NetworkManager plugin. That includes proprietary VPN applications offered by VPN services, and also packaged software like openvpn or wg-quick, when invoked by something other than NetworkManager.

If your custom VPN software is broken, you could report a bug against your VPN software to ask for support for systemd-resolved, but it’s really best to ditch your custom software and configure your VPN using NetworkManager instead, if possible. There are really only two good reasons to use custom VPN software: if NetworkManager doesn’t have a plugin appropriate for your corporate VPN, or if you need to use Wireguard and your desktop doesn’t support Wireguard yet. (NetworkManager itself supports Wireguard, but GNOME does not yet, because Wireguard is special and not treated the same as other VPNs. Help welcome.)

If you use NetworkManager to configure your VPN, as desktop developers intend for you to do, then NetworkManager will take care of configuring systemd-resolved appropriately. Fedora ships with several NetworkManager VPN plugins installed by default, so the vast majority of VPN users should be able to configure your VPN directly in System Settings. This also allows you to control your VPN using your desktop environment’s VPN integration, rather than using the command line or a custom proprietary application.

OpenVPN users will want to look into using the unofficial update-systemd-resolved script. However, NetworkManager has good support for OpenVPN, and this is totally unnecessary if you configure your VPN with NetworkManager. So it’s probably better to use NetworkManager instead.

Now, what if you maintain custom VPN software and want it to work properly with systemd-resolved, or what if you can’t use NetworkManager for whatever reason? First, stop trying to write to /etc/resolv.conf, at least if it’s managed by systemd-resolved. You’ll instead want to use the systemd-resolved D-Bus API to configure an appropriate routing domain for your VPN interface. Read this documentation. You could also shell out to resolvectl, but it’s probably better to use the D-Bus API unless your VPN is managed by a shell script. Privacy VPNs (or corporate VPNs that wish to eschew split DNS and hijack all the user’s DNS) can also use the resolvconf compatibility script, but note this will only work properly with NetworkManager 1.26.6 and newer, because the best you can do with it is add a global routing domain to a network interface, but that’s not going to work as expected if another network interface already has a global routing domain. Did I mention that you might want to use the D-Bus API instead? With the D-Bus API, you can remove the global routing domain from any other network interfaces, to ensure only your VPN’s interface gets a global routing domain.

Split DNS Without systemd-resolved

Quick tangent: systemd-resolved is not the only software available that implements split DNS. Previously, the most popular solution for this was to use dnsmasq. This has always been available in Fedora, but you had to go out of your way to install and configure it, so almost nobody did. Other custom solutions were possible too — I know one developer who runs Unbound locally — but systemd-resolved and dnsmasq are the only options supported by NetworkManager.

One significant difference between systemd-resolved and dnsmasq is that systemd-resolved, as a system daemon, allows for multiple sources of configuration. In contrast, NetworkManager runs dnsmasq as a subprocess, so only NetworkManager itself is allowed to configure dnsmasq. For most users, this distinction will not matter, but it’s important for custom VPN software.

Servers and DNSSEC

You might have noticed that the rest of this blog post focused pretty much exclusively on desktop use cases. Your server is probably not using a VPN. It’s probably not using mDNS. It’s probably not expected to be able to resolve local hostnames. Conclusion: most servers don’t need split DNS! Servers do benefit from systemd-resolved’s systemwide DNS cache, so running systemd-resolved on servers is still a good idea. But it’s not nearly as important for servers as it is for desktops.

There are some disadvantages for servers as well. First, systemd-resolved is not intended to be used on DNS servers. If you’re running a DNS server, you’ll need to disable systemd-resolved before setting up BIND or Unbound instead. That is one extra step to get your DNS server working relative to before, so enabling systemd-resolved by default is an inconvenience here, but that’s hardly difficult to do, so not a big deal.

However, systemd-resolved currently has several bugs in how it handles DNSSEC, and this is potentially a big deal if you depend on that. If you’re a desktop user, you’ll probably never notice, because DNSSEC on desktops is a total failure. Due to widespread and unfixable compatibility issues, it’s very unlikely that we would be able to enable DNSSEC validation by default in the next 10-15 years. If you have a desktop computer that never leaves your home and a good ISP, or a server sitting in a data center, then you can probably safely turn it on manually in /etc/systemd/resolved.conf, but this is highly inadvisable for laptops. So DNSSEC is currently useful for securing DNS between DNS servers, but not for securing DNS between you devices and your DNS server. (For that, we plan to use DNS over TLS instead.) And we’ve already established that DNS servers should not use systemd-resolved. So what’s the problem?

Well, it turns out DNS servers are not the only server software that expects DNSSEC to work properly. In particular, broken DNSSEC can result in broken mail servers. Other stuff might break too. If you’re running a server that needs functional DNSSEC, you’re going to need to disable systemd-resolved for now. These problems with DNSSEC resulted in some extremely vocal opposition to the Fedora 33 systemd-resolved change proposal, which unfortunately we didn’t properly appreciate until too late in the Fedora 33 development cycle. The good news is that these problems are being treated as bugs to be fixed. In particular, I am keeping an eye on this bug and this bug. Development is currently very active, so I’m hopeful that systemd-resolved’s DNSSEC support will look much better in time for Fedora 34.

Tell Me More!

Wow, you made it to the end of a long blog post, and you still want to know more? Next step is to read my colleague Zbigniew’s Fedora Magazine article, which describes some of the concepts I’ve already mentioned in greater detail. (However, when reading that article, be aware of the NetworkManager 1.26.6 changes I mentioned above. The article predates NetworkManager 1.26.6, so you will see in the examples that a ~. global routing domain is assigned to non-VPN interfaces. That will no longer happen.)

Conclusion

Split DNS is designed to just work, like the rest of the modern Linux desktop, and it should for everyone not using custom VPN software. If you do run into trouble with custom VPN software, the bottom line is to try using a NetworkManager VPN plugin instead, if possible. In the short term, you will also need to disable systemd-resolved if you depend on DNSSEC, but hopefully that won’t be necessary for much longer. Everyone else should hopefully never notice that systemd-resolved is there.

Happy resolving!