my thought experiment on delayed-apply dialogs yesterday got quite a strong response. the response was generally to the effect of “please, oh god, no!“. that’s sort of what i expected :)

the reason i was thinking about this at all is because jon mccann had sent me an email saying that he wanted to use dconf for his gdm rewrite. after a talk on jabber with him i realised that dconf currently has no support for delayed-apply — it has been engineered under the assumption of instant-apply.

jon’s problem is that changes to gdm config might involve starting or stopping x servers and the like. what he really wants is to get a single change notification for a bunch of changes that the user has made (instead of one at a time). he’s not the first person to have requested this. lennart mentioned something similar.

this got me thinking. the solution i came up with was to support an idea of a “transaction” on a given path in the dconf database. there were to be 4 apis for dealing with these transactions:

dconf_transaction_start (const char *path); dconf_transaction_end (const char *path); dconf_transaction_commit (const char *path); dconf_transaction_revert (const char *path);

these “transactions” would be implemented in a very trivial (but perhaps confusing way):

if a process had a transaction registered for a given path (say “/apps/gdm/”) then:

- any writes to a path under it would redirect to /apps/gdm/.working-set/

- for example, writing to /apps/gdm/foo goes to /apps/gdm/.working-set/foo

- any reads from a path under it would redirect similarly, with fallback

- for example, reading /apps/gdm/foo would first try to read from /apps/gdm/.working-set/foo and then from /apps/gdm/foo if the former is unset.

all redirection is done on the client — not the server. the set requests that the client sends to the server are actually explicitly for the keys inside of “.working-set”.

if two people open transactions on conflicting paths then, well, you lose. you could easily get into a situation where /apps/gdm/foo is represented by both /apps/.working-set/gdm/foo and /apps/gdm/.working-set/foo. too bad. lock on the same resource if you require sanity.

commit would mean “copy the all of /apps/gdm/.working-set/ down to /apps/gdm/ and destroy the working set”.

revert would mean “unset everything in /apps/gdm/.working-set/” (ie: destroy the working set).

the idea is that a delayed-apply dialog box would open a transaction on startup and close the transaction on exit. it would continue to read and write keys directly to /apps/gdm/* but because of the open transaction its reads and writes would actually be redirected to the working set. the gdm daemon would see no changes on the actual keys until a commit occured.

the question that inspired yesterday’s blog entry: what is the lifecycle of the working set?

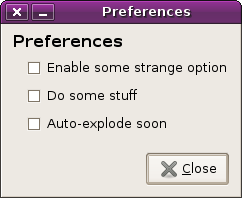

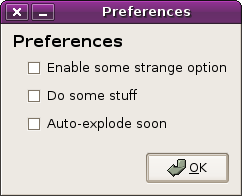

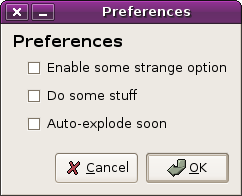

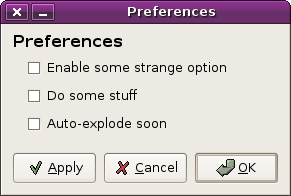

consider a problem with delayed-apply dialogs: what happens if two of them are open? in the instant apply case this is easy: the two dialog boxes affect each other in realtime. if you check something off in one of them then the other updates straight away. for delayed-apply this is very much more difficult.

if the first user applies, do the settings of the second user get wiped out? does the second user ignore the first user’s changes and write their own set over top? do we have some complicated merge operation? do we ask the user what they meant? insanity lies this way.

with the working set idea, the two dialogs would simply both be in on a sort of “shared transaction”. they would see each other’s changes in realtime but the changes would not be visible to gdm until one of them called commit(). it would be impossible to get into a position where you’d have to think about merging inconsistent sets of changes. pretty cool stuff.

under this mode of thinking, obviously if user1 opens the dialog, makes some changes, then user2 opens the dialog (and sees the unapplied changes made by user1) and then user1 closes the dialog, user2’s dialog would still contain the changes in progress.

so the lifecycle of the working set is at least as long as one person has a dialog open.

it’s easy (and probably fitting with existing user expectations) to make the lifecycle of the working set exactly as long as one person has a dialog open. to do this requires that the dconf server track processes and keep some sort of a refcount on how many people are interested in the working set. when the last caller disappears then the working set is automatically destroyed.

it’s obviously a very simple change in code, though, to make the dconf server fail to destroy the working set on the exit of the last dialog. this is what gave me the idea of having a working set of changes that stuck around after you dismissed a dialog.

it’s also a very simple change in code to cause the dconf server to deny the second process’s attempt to open a transaction when a current transaction is open. this sidesteps the whole “two dialog boxes open” problem rather effectively, but is far less fun if the code that is already written is perfectly capable of handling it.

the most useful affect of my blog entry is that it immediately started a discussion on #gnome-hackers. a few minutes after posting, owen asked me if i was around for the whole “72 buttons in the gnome 1.x control centre” mess. havoc joined in with the beating me about the head. together they made some very good points:

- first and foremost, users expect their working set of changes to be tied to the dialog. when the dialog closes they go away. multiple dialogs don’t share the working set. the working set is something that is private to that one little window.

- the multiple-dialogs problem is best solved with a single-instance-app mechanism

- the multiple dialogs thing isn’t even too much of a problem. the last person to click apply wins. this is what most people expect anyway.

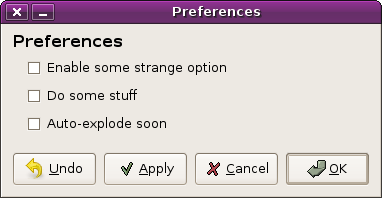

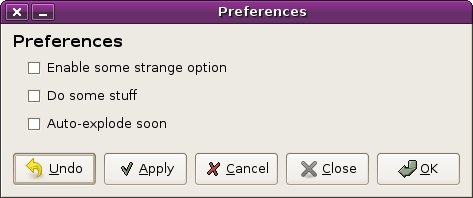

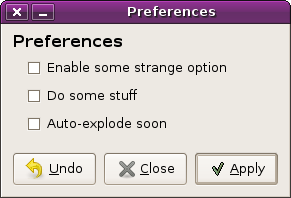

- an undo button isn’t useful enough to be a part of the ui (just close and reopen for those rare circumstances) and an apply button is very questionable on the same grounds

there’s also a fundamental technical problem with my approach. dconf is designed so that everyone in a single process share access to the database through a shared client-side “stack”. if you have multiple libraries in a single process and one of them starts a transaction on the shared stack then the other parts of the process may become confused (imagine the case of a gdm preferences dialog built into the main gdm process). having the entire process enter and exit transactions is clearly undesirable.

the upshot of all of this is that i think i’m not going to do transactions in this way. as a side effect, my ideas for crazy dialogs that share working sets that stick around even after the dialog closes are possibly also dead.

my next post will be about how i intend to support transactions.