Introduction

I have been using Mandrake Mandriva Mageia for a while now. I noticed that Mageia is pretty friendly to new packagers. Every new packager (even if experienced) will get a mentor. That person is there to answer questions and to guide you to become a good packager. Once the mentor decides you’re good enough (time varies), you’ll become a full packager. The ease of joining, together with a lack of bureaucracy made me want to try and help out.

I started out with just packaging random things that people wanted. That had a big drawback: you’re responsible to handle the bugs in those new packages. Some packagers I never use nor care about. Aaargh!

I switched to only package GNOME and a few small things that I use myself (maildrop, archivemail, a few others).

GNOME packaging

Having never packaged for a distribution before, I found it relatively easy. I guess a great benefit is that GNOME is pretty stable. Not too much changes. The things I found annoying:

- Problems related to linking

Other Mageia packagers know how to solve these. I just file a bug and wait for the GNOME maintainer to give me a patch. Sometimes while I am waiting for upstream, another Mageia packager will already add a patch for the problem (no Mageia bugreports involved).

I think packaging as quickly as possible is part of the “release early, release often” thought. People consciously run the development version of a distribution. Although things shouldn’t be broken knowingly, the focus should be on getting the new software to the development version users as quickly as possible.

Something broken? Either have upstream release a new (micro/pico) version; else: add a patch.

- Usage of -Werror

If this gives an error, expect Mageia packagers to add a patch to remove -Werror usage. If you want to be notified of warnings; write a system to notify you of warnings! I find -Werror a waste of time.

- -Werror and deprecations (hugely annoying!)

Fortunately, there is some gcc switch to not error out on deprecations. Most modules seem to use that now, fortunately.

- New modules

Example: Boxes. Loads of new dependencies. Plus some already packaged software needs new configure options. This can easily take a week.

Boredom

My main issue with packaging GNOME that it consists of loads of tarballs and that most of the work is really, really boring. I mean that usually you just:

- Download the new tarball

- Update the version number in the .spec file and change release to 1

- Submit the spec file to the Mageia build system

Note that I completely ignore a lot of things:

- Stable distribution

I’ve been packaging for Cauldron (“unstable” / “rawhide” / “Factory”). The process for submitting updates to the stable version is (obviously) very different.

- Build errors

I don’t test. I just rely on the Mageia build system to bomb out.

- New major versions of libraries

In Mageia we package per major version. New major? Doesn’t matter, build system will bomb out (the spec file looks for the major).

- Major functionality changes

Usually noticeable by the version number. This way of packaging is also nice because the distribution can actually have the same library packaged with multiple major versions. Although we do recompile everything immediately, this avoids a lot of headache when something doesn’t compile anymore.

- Testing the software

Any packager in Mageia can add patches, so if something is totally broken there a lot of of people who can fix it. In practice I almost never test things before submitting. If there is a problem, better to have anyone inform upstream asap. From the bugs that have been filed, it usually are things I wouldn’t have noticed anyway.

Avoid boredom, script it!

To avoid getting overly bored, I instead wrote a script to automate changing the .spec file. I’d watch my ftp-release-list folder and look at all the incoming emails. Based on that I’d:

- Call my script to increase the version number and reset the release

- Call a Mageia command to commit all the changes

- Call a Mageia command to submit the new package

This was nice, but quickly became boring as well. Usually I’d just call my script, check nothing, then commit and submit and wait for either an email about the new RPM, or an failure email.

Submit it already!

I changed my script and added a --submit option. This would make my script call the commit + submit commands automatically (and abort as soon as something failed).

Now I was submitting as soon as I saw a new email in ftp-release-list. I made another script to actually download the tarball from master.gnome.org to avoid the master.gnome.org vs ftp.gnome.org lag. There is about a 5 minute difference between the ftp-release-list email and when it actually appears on ftp.gnome.org. Downloading directly from master.gnome.org would avoid that lag.

Patches which do not apply

As I was submitting everything to the Mageia build system, I noticed that some builds were failing just because a patch had been merged. That’s something I could’ve checked myself. This was annoying as it can take a while before the Mageia build system notifies you that there is a problem. Time that is basically wasted; I want the tarball provided as a rpm package asap. So another addition to the script to make it verify that the %prep stage actually succeeds. This ensured that I’d notice immediately if a patch wouldn’t apply. As a result, the number of obviously incorrect Mageia submissions decreased (probably making the Mageia sysadmins happy), but more importantly: this decreased the time it takes before a tarball is available as rpm.

Funda Wang

There was another problem. During the time I was sleeping Funda Wang was awake, busy packaging all the GNOME tarballs. Leaving nothing for me to do.

The only way to solve this was to link my script directly to ftp-release-list. To do that I’d had to solve a few problems:

- Package names can be different from a tarball name

I already solved that partly in the script. I decided to have the script bomb out in case a tarball is used within multiple packages (e.g. gtk+ tarball is used by 2 packages; gtk+2.0 and gtk+3.0. So the script would handle NetworkManager (tarball) vs networkmanager (package), but not gtk+.

- Version number changes

I added code to have the script judge the version number change according to the way GNOME uses version numbers. GNOME versions are mostly in the form of x.y.z. In case y is odd, it is a development version. To judge version numbers it basically comes down to: changes in x is bad and automatically going from from a even y to an odd y is bad as well.

- Verify tarball SHA256 hash

I wanted to be sure that the downloaded tarball had the same SHA256 hash as what was advised by ftp-release-list. So I wrote some code to do that.

- Be informed what the script is doing

Everything that the script does based on ftp-release-list is automatically sent as a followup in the same folder as the ftp-release-list emails.

- Wait before downloading

The script doesn’t have access to master.gnome.org. So it had to wait a little bit before trying to download the new tarball. I decided on 5 minutes. This quickly failed because maildrop doesn’t allow a delivery command to last longer than 5 minutes. A os.fork() addition solved that issue.

Reading logs is boring

Having my script send followups to the original ftp-release-list emails was nice. But that meant I was reading every followup to check if the script was doing what it should. After a few emails, this became too cumbersome.

I changed the script to add “(ERROR)” to the subject line in case of errors. After a while, I noticed most errors were due to the same problems. I didn’t need to actually see the entire email, just knowing the error message was enough. As an enhancement, I ensured the subject line actually contained the error message. To determine the error message from commands that were run, I assumed if a command fails due to an error (noticeable by the exit code), that the last line would have the error message. This is a pretty reliably assumption.

Waiting 5 minutes?

Before downloading a tarball, the script would wait 5 minutes. Obvious, because of mirror lag. I noticed a few problems with that:

- Resubmitting ftp-release-list emails

Every so often I’d fix a cause for the script to fail. I’d then pass the original ftp-release-list email again to the script. The script would still wait 5 minutes. The entire wait was unneeded, and increased the change that another packager would package the tarball meanwhile (and, yeah, this happened).

- Lag sometimes more than 5 minutes

Although 95% of all tarballs were available within 5 minutes, some tarballs weren’t yet available.

- ftp-release-list lag

Sometimes the ftp-release-list email takes a few minutes to arrive (instead of the same second). Thus making the script wait way more than needed.

To solve these problems I changed the script to

- Make use of the ftp-release-list Date: field

The script uses the date specified in the Date: header to wait until 5 minutes after the date specified in the Date: field. If the same email is processed again, the script determines that there is no need to wait. It helps that I know both the GNOME server and my machine are synced to NTP.

- Repeat download for up to 10 minutes

I enhanced the script to repeatedly try and download the file for up to 10 minutes in 30 second intervals.

- Start initial attempt after 3 minutes

As the script would retry the download anyway, I decreased the initial waiting time to 3 minutes (instead of 5 initially). This to that the package is available asap, but it also minimizes the time to notice errors (e.g. merged patches).

Automatically packaging gtk+

The script only handles 1 package for every tarball. Having the script fail for gtk+ really bothered me. Partly as some modules needed a newer gtk+, and they were failing while gtk+ was released already (and could’ve been packages). Secondly, a script which doesn’t handle gtk+ is just bad.

I solved this by having the script look at all the possible packages. Then ignore any package which has a version newer than the just released tarball (e.g. if gtk+ 2.24.11 is released, ignore the package which has gtk+ version 3.3.18). Then just take the package(s) which either have the same or are closest to the new version.

The version number change is still judged later on (as explained previously: basically: don’t automatically change major versions or upgrade to a development version). Furthermore, a library which changes its major will result in a failure. So this should be pretty much fine.

Which patch fails to apply?

Ensuring that patches apply is good. But when that fails, I had to run the command again and ask for log output.

As a very common reason for a patch not to apply anymore is because it has been merged (or was taken from) upstream, seeing that in the log output would makes things much easier.

Today

Above explains how I developed the script until today. The result is a 858 lines long script. If you want to look at it, I put it in Mageia svn.

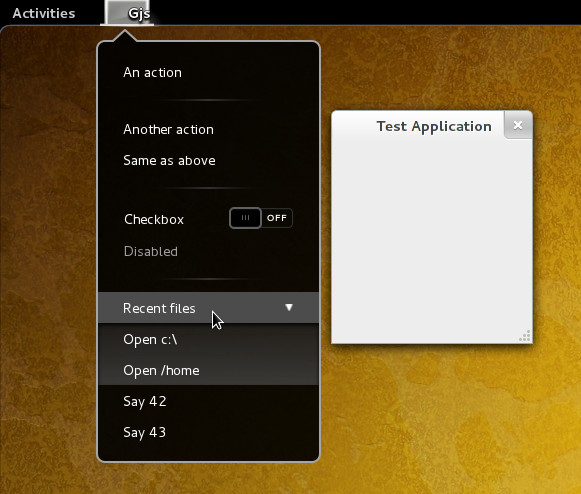

Above screenshot shows the various automated replies to ftp-release-list emails (aside from other emails). If you look closely, you’ll see that Mageia hasn’t packaged gnome-dvb-daemon. Furthermore, the initial GDM was rejected as it concerned a stable->unstable change. Patches failed to apply for: gnome-documents, banshee. Lastly, all the “FREEZE” error messages are because Mageia is in version freeze and I’m not allowed to submit new packages during a version freeze. Lastly, the script didn’t respond yet to the release of the atk and file-roller tarballs (it has meanwhile).

The nicest thing is the time difference between the ftp-release-list email and the response. In that time, the script has downloaded the tarball, uploaded it to Mageia and performed various checks in between. Building a package should take less than 10 minutes, tops. It then needs to be uploaded to the Mageia mirrors. The slowest tier 1 mirror only checks for new files once per hour. Meaning 30 minutes delay on average. All in all, it should be quite manageable to provide most GNOME tarballs to Mageia Cauldron users within 1 hour.

Further boredom avoidance

I still have various things which still annoy me:

- Updating BuildRequires

configure.{ac,in} has PKG_CHECK_MODULES to check for BuildRequires. That should just be automatically synchronized with whatever is in the spec file. Not to sure what to do with BuildRequires which Mageia doesn’t want/need. I’m thinking of still keeping these in the .spec, but put them in a %if 0, %endif block.

- Merged patches

Ideally you just remove them from the spec and be done with it. Not sure how to determine that it was merged from the script (exact “patch” return code + how to call “patch”; some patches want -p1, some -p0, etc). Furthermore, some patches require autoreconf as well as additional BuildRequires (gettext-devel). Those additions should be removed as well. I’m wondering if I either should just ignore that, or add some special comment to the .spec file to inform the script on what should be done. I’ll wait with this until I have a bit more experience with merged patches.

- Mirror lag

GNOME sysadmin thing. Not really a priority.

- Build ordering

Evolution wanting latest (maybe unreleased) evolution-data-server. GNOME shell wanting latest (maybe unreleased) Mutter. NetworkManager-vpnc wanting latest NetworkManager.