May 20, 2009

community, freesoftware, maemo

6 Comments

Fabrizio Capobianco of Funambol wondered recently if there are too many mobile Linux platforms.

The context was the recent announcement of oFono by Intel and Nokia, and some confusion and misunderstanding about what oFono represents. Apparently, several people in the media thought that oFono would be Yet Another Complete Stack, and Fabrizio took the bait too.

As far as I can tell, oFono is a component of a mobile stack, supplying the kind of high-level API for telephony functions which Gstreamer does for multimedia applications. If you look at it like this, it is a natural compliment to Moblin and Maemo and potentially a candidate technology for inclusion in the GNOME Mobile module set.

Which brings me to my main point. Fabrizio mentions five platforms besides oFono in his article: Android, LiMo, Symbian, Maemo and Moblin. First, Symbian is not Linux. Of the other four, LiMo, Maemo and Moblin share a bunch of technology in their platforms. Common components across the three are: The Linux kernel (duh), DBus, Xorg, GTK+, GConf, Gstreamer, BlueZ, SQLite… For the most part, they use the same build tools. The differences are in the middleware and application layers of the platform, but the APIs that developers are mostly building against are the same across all three.

Maemo and Moblin share even more technology, as well as having very solid community roots. Nokia have invested heavily in getting their developers working upstream, as has Intel. They are both leveraging community projects right through the stack, and focusing on differentiation at the top, in the user experience. The same goes for Ubuntu Netbook Edition (the nearest thing that Moblin has to a direct competitor at the moment).

So where is the massive diversity in mobile platforms? Right now, there is Android in smartphones, LiMo targeting smartphones, Maemo in personal internet tablets and Moblin on netbooks. And except for Android, they are all leveraging the work being done by projects like GNOME, rather than re-inventing the wheel. This is not fragmentation, it is adaptability. It is the basic system being tailored to very specific use-cases by groups who decide to use an existing code base rather than starting from scratch. It is, in a word, what rocks about Linux and free software in general.

May 18, 2009

community

3 Comments

Recently I’ve had a number of conversations with potential clients which have reinforced someting which I have felt for some time. Companies don’t know how to evaluate the risk associated with free software projects.

First, a background assumption. Most software built in the world, by a large margin, is in-house software.

IT departments of big corporations have long procurement proceses where they evaluate the cost of adopting a piece of infrastructure or software, including a detailed risk analysis. They ask a long list of questions, including some of these.

- How much will the software cost over 5 years?

- What service package do we need?

- How much will it cost us to migrate to a competing solution?

- Is the company selling us this software going to go out of business?

- If it does, can we get the source code?

- How much will it cost us to maintain the software for the next 5 years, if we do?

- How much time & money will it cost to build an equivalent solution in-house?

There are others, but the nub of the issue is there: you want to know what the chances are that the worst will happen, and how much the scenario will cost you. Companies are very good at evaluating the risk associated with commercial software – I would not be surprised to learn that there are actuarial tables that you can apply, knowing how much a company makes, how old it is and how many employees it has which can tell you its probability of still being alive in 1, 3 and 5 years.

Companies built on free software projects are harder to gauge. Many “fauxpen source” companies have integrated “community” in their sales pitch as an argument for risk mitigation. The implicit message is: “You’re not just buying software from this small ISV, if you choose us you get this whole community too, so you’re covered if the worst happens”. At OSBC, I heard one panellist say “Open Source is the ultimate source escrow” – you don’t have to wait until the worst happens to get the code, you can get it right now, befor buying the product.

This is a nice argument indeed. But for many company-driven projects, it’s simply not the case that the community will fill the void. The risk involved in the free software solution is only slightly smaller than buying a commercial software solution.

And what of community-driven projects, like GNOME? How do you evaluate the risk there? There isn’t even a company involved.

There are a number of ways to evaluate legal risk of adopting free software – Black Duck and Fossology come to mind. But very little has been written about evaluating the community risks associated with free software adoption. This is closely associated to the community metrics work I have pointed to in the past – Pia Waugh and Randy Metcalfe’s paper is still a reference in this area, as is Siobhan O’Mahony and Joel West’s paper “The Role of Participation Architecture in Growing Sponsored Open Source Communities”.

This is a topic that I have been working on for a while now in fits and starts – but I think the time has come to get the basic ideas I use to evaluate risk associated with free software projects down on paper and out in the ether. In the meantime, what kinds of criteria do my 3 readers think I should be keeping in mind when thinking about this issue? Do you have references to related work that I might not have heard about yet?

May 11, 2009

running

12 Comments

Yesterday, on my second serious attempt (previously I injured myself 4 weeks before the race) I finally ran a marathon in Geneva, Switzerland.

Since getting injured in 2007, I’ve taken up running fairly seriously, joined a club, and this time round I was fairly conscientious about my training, getting in most of my long runs, speed work & pace runs as planned. I thought I was prepared, but I don’t think anything can prepare you for actually running 42.195 kilometers at race pace. Athletes will tell you that the marathon is one of the hardest events out there because it’s not just a long-distance race, it’s also a race where you have to run fast all the time. But until you’ve done it, it’s hard to appreciate what they mean.

This year, the club chose the Geneva marathon as a club outing, and around 40 club members signed up for either the marathon or the half-marathon on the banks of Lac Leman, and I couldn’t resist signing up for the marathon.

I wasn’t in perfect health, since I’ve been feeling some twinges in my right hip & hamstring for the past couple of weeks, but during taper before the race I’ve been taking it very easy, and I felt pretty good the day before. With the club we met up on Saturday 9th after lunch, and drove to Geneva to get our race numbers, and then to the hotel in Annemasse for a “special marathon runner’s” dinner (which had a little too much lardons, vinaigrette & buttery sauce to be called a true marathon runner meal), last minute preparations for the big day, and a good night’s rest.

Up early, light breakfast, back into Geneva for the race. Arrived at 7am, lots of marathon runners around, and the excitement levels are starting to climb. After the usual formalities (vaseline under armpits and between thighs, taped nipples, visit to toilet) we made our way to the starting line for the 8am start.

Nice pace from the start – a little fast, even, but by the 3rd kilometer I’d settled into my race pace, at around 4’40 per kilometer (aiming for 3h20 with a couple of minutes margin). Walked across every water station to get two or three good mouthfuls of water and banana without upsetting my tummy. Around kilometer 7, I started to feel a little twinge in the hamstring and piriformis/pyramidal muscle, and I felt like I might be in for a long day. It didn’t start affecting me for a while, but by kilometer 16, I was starting to feel muscles seize up in my hip in reaction to the pain.

First half completed on schedule, 1h38’55, and I was feeling pretty good. Not long afterwards, every step was getting painful. Around kilometer 26, I decided (or was my body deciding for me?) to ease off on the pace a little and I started running kilometers at 4’50 to 5′.

They talk about the wall, but you don’t know what they mean until you hit it. Around kilometer 32, I found out. At first, I welcomed the feeling of heavy legs – it drowned out the pain from my hip, and here was a familiar sensation I thought I could manage. But as the kilometers wore on, and my pace dropped, I was having a harder and harder time putting one foot in front of the other. Starting again after walking across a water stop at kilometers 33 and 38 was hard – it was pure will that got me going again. My pace was slipping – from 5′ to 5’30 – one kilometer I ran in 6′. It looked like I was barely going to finish in 3’30, if I made it to the end at all.

Then a club-mate who was on a slower pace caught up to me, tapped me on the shoulder, and said “Hang on to me, we’ll finish together” (“accroche toi, on termine ensemble”). A life-saver. Manna from heaven. I picked up speed to match him – if only for 100m. After that, I said to myself, I’ll try to keep this up for another kilometer. When we passed the marker for 40k, I said I’d make it to 41 with him, and let him off for the last straight. And when we got to the final straight, I summoned up everything I had left to go for the last 1200m.

In the end, I covered those last 3200m in an average of 4’35 per kilometer – which just went to teach me that those 5km when I was feeling sorry for myself were more mental blockage than anything else, and I was able to overcome my body screaming out at me to stop.

The record will show that I ran 3h26’33 for my first marathon, but that doesn’t come close to telling the story.

Afterwards, I got a massage, drank a lot of water, ate some banana, and, feeling emptied & drained, a wave of emotion overcame me when I realised what I’d done.

Congratulations to the other first-time marathon runners who ran with me yesterday, and thank you Paco, I’ll never forget that you got me to the end of my first marathon.

Update: The marathon organisers had a video camera recording everyone’s arrival during the race. I discovered this afterwards, otherwise I might have been slightly more restrained after crossing the line.

You can see me arriving here, and Paco, who arrived a few seconds after me, here – for the extended sound-track.

May 7, 2009

community, freesoftware, gnome, maemo

4 Comments

A few weeks ago I pointed out some similarities between community software projects and critical mass. After watching Chelsea-Barcelona last night – an entertaining match for many of the wrong reasons and a few of the right ones – I wanted to share another analogy that could perhaps be useful in analysing free software projects. What can we learn from football clubs?

Before you roll your eyes, hear me out for a second. I’m a firm believer that building software is just like building anything else. And free software communities share lots of similarities with other communities. And football clubs are big communities of people with shared passions.

Football clubs share quite a few features with software development. Like with free software, there are different degrees of involvement: the star players and managers on the field, the back-room staff, physiotherapists, trainers and administrators, the business development and marketing people who help grease the wheels and make the club profitable, and then the supporters. If we look at the star players, they are often somewhat mercenary – they help their club to win becauise they get paid for it. Similarly, in many free software projects, many of the core developers are hired to develop – this doesn’t mean they’re not passionate about the project, but Stormy’s presentation about the relationship between money and volunteer efforts, “would you do it again for free?” rings true.

Even within the supporters, you have different levels of involvement – members of supporter clubs and lifetime ticket holders, the people who wouldn’t miss a match unless they were on their death bed, people who are bringing their son to the first match of his life in the big stadium, and the armchair fans, who “follow” their team but never get closer than the television screen.

The importance of the various groups echoes free software projects too – those fanatical supporters may think that the club couldn’t survive without them, and they might be right, but the club needs trainers, back-room staff and players more. In the free software world, we see many passionate users getting “involved” in the community by sending lots of email to mailing lists suggesting improvements, but we need people hacking code, translating the software and in general “doing stuff” more than we need this kind of input. The input is welcome, and without our users the software we produce would be irrelevant, but the contribution of a supporter needs to be weighed against the work done by core developers, the “stars” of our community.

Drogba shares the love

Football clubs breed a club culture, like software projects. For years West Ham was known for having the ‘ardest players in the league, with the ‘ardest supporters – the “West ‘Am Barmy Army”. Other clubs have built a culture of respect for authority – this is particularly true in a sport like rugby. More and more the culture in football is one of disrespect for authority. Clubs like Manchester United have gotten away with en masse intimidation of match officials when decisions didn’t go their way. I was ashamed to see players I have admired from afar – John Terry, Didier Drogba, Michael Ballack, in the heat of the moment show the utmost of disrespect for the referee. That culture goes right through the club – when supporters see their heroes outraged and aggressive, they get that way too. The referee in question has received death threats today.

Another similarity is the need for a sense of identity and leadership. Football fans walk around adorned in their club’s colours, it gives them a sense of identity, a shared passion. And so do free software developers – and the more obscure the t-shirt you’re wearing the better. “I was at the first GUADEC” to a GNOME hacker is like saying “I was in Istanbul” for a Liverpool supporter.

This is belonging

So – given the similarities – spheres of influence and involvement, with lots of different roles needed to make a successful club, a common culture and identity, what can we learn from football clubs?

A few ideas:

- Recruitment: Football clubs work very very hard to ensure a steady stream of talented individuals coming up through the ranks. They have academies where they grow new talent, scouts, reserve teams and feeder clubs where they keep an eye on promising talent, and they will buy a star away from a competing club based on his reputation and track record.

- Teams have natural lifecycles: When old leaders come to the end of the road, managers often have trouble filling the leadership void. Often, it’s not one player leaving, but a group of friends who have played together for years. Teams have natural lifecycles, but good teams manage to see further ahead, and are constantly looking to renew the team, so that they don’t end up in a situation where they lose 5 or 6 key players in one season

- Build belonging: Supporters want to show their sense of belonging, and people who don’t have the skillz to be on the field still want to wear their team colours, and share their passion for the team. Merchandising is one way to do that, but not the only way. We should look at the way clubs cultivate their user groups and create a passionate following

- Leaders decide the culture: We owe it to ourselves to systematically grow a nurturing culture at the heart of our project – core developers, thought leaders, anyone who is a figurehead within the project. If we are polite and respectful to each other, considerate of the feelings of those we deal with and sensitive to how our words will be received, our supporters will follow suit.

Are there any other dodgy analogies that we can make with free software develoment communities? Any other lessons we might be able to draw from this one?

April 20, 2009

freesoftware

8 Comments

In an effort to further perturb the free software market which has been threatening its middleware, application server and database markets recently, the management in Oracle has come up with the masterly stroke of buying Sun Microsystems, and with it a chief competitor, MySQL.

Oracle had already announced their intention to undermine MySQL a few years ago when they bought InnoDB, the ACID database engine used by MySQL, just 18 months before their licensing agreement with the Swedes was due to expire. If you don’t understand why a licensing agreement was needed, you need to think about what licence MySQL was distributing InnoDB under when selling commercial MySQL licences.

My thought at the time was that Oracle could just refuse to renew the licensing arrangement, leaving MySQL without a revenue stream. The problem became moot when Sun bought MySQL, and less critical when MySQL announced they were working on an alternative ACID engine.

Oracle have been trying to undermine free software vendors for a while now, including its launch of Unbreakable Linux to compete directly with Red Hat. Red Hat had previously purchased JBoss, a product competing directly with WebLogic, Oracle’s application server product.

I figure that Oracle will do what some people were suggesting Sun should have done: wrap up Sun into three different entities: storage, servers and software. They have an interest in keeping the storage unit around – there’s considerable synergy possible there for a database company.

Within the software business unit, they will probably drop OpenOffice.org pretty quickly, and I can’t see them maintaining support for OpenSolaris or GNOME. They will keep selling and supporting Solaris as a cash cow for years to come, in the same way IBM did with the Informix database server some years ago, and Java will be a valuable asset to them.

I don’t think Oracle has a strategic interest in becoming a hardware vendor, however, and I can’t imagine a very big percentage of their client base is using SPARC systems these days, so I don’t see them keeping the server business around for long.

Interesting days ahead in the free software world! From the point of view of MySQL, it will be interesting to see if some ex-MySQL employees take the old GPL code and keep the project going under a different company & different name, or if Drizzle (or one of the other forks) gets critical mass as a community-run project to take over a sizeable chunk of the install base. For Oracle, it will be interesting to see if they start trying to move existing MySQL customers over to Oracle, or if they maintain both products, or if they EOL all support on MySQL altogether and force people into a choice. I imagine that the most likely scenario is that they will maintain support staff, cut development staff, and let the product die a slow and painful death.

April 15, 2009

gimp, inkscape, libre graphics meeting, scribus

No Comments

The Libre Graphics Meeting fundraiser has been inching higher in recent weeks, and we are very close to the symbolic level of $5,000 raised, with less than a week left in the fundraising drive.

I am sure we will manage to get past $5,000, but I wonder if we will do it today? To help us put on this great conference, and help get some passionate and deserving free software hackers together, you still have time to give to the campaign. (Update 2019-03-29: Pledgie is no more – for an explanation why, read this).

Thanks very much to the great Free Art and Free Culture community out there for your support!

Update: Less than 2 hours after posting, Mark Wielaard pushed us over the edge with a donation bringing us to exactly $5000. Thanks Mark! Next stop: $6000.

April 8, 2009

community, freesoftware, gnome

18 Comments

Daniel Chalef and Matthew Aslett responded to my suggestion at OSBC that copyright assignment was unnecessary, and potentially harmful, to building a core community around your project. Daniel wrote that he even got the impression that I thought requesting copyright assignment was “somewhat evil”. This seems like a good opportunity for me to clarify exactly what I think about copyright assignment for free software projects.

First: copyright assignment is usually unnecessary.

Most of the most vibrant and diverse communities around do not have copyright assignment in place. GIMP, GNOME, KDE, Inkscape, Scribus and the Linux kernel all get along just fine without requesting copyright assignment (joint or otherwise) from new contributors.

There are some reasons why copyright assignment might be useful, and Matthew mentions them. Relicencing your software is easier when you own everything, and extremely difficult if you don’t. Defending copyright infringement is potentially easier if there is a single copyright holder. The Linux kernel is pretty much set as GPL v2, because even creating a list of all of the copyright holders would be problematic. Getting their agreement to change licence would be nigh on impossible.

Not quite 100% impossible, though, as Mozilla has shown. The relicencing effort of Mozilla took considerable time and resources, and I’m sure the people involved would be delighted not to have needed to go through it. But it is possible.

There is another reason proponents say that a JCA is useful: client indemnification. I happen to think that this is a straw man. Enterprise has embraced Linux, GNOME, Apache and any number of other projects without the need for indemnification. And those clients who do need indemnification can get it from companies like IBM, Sun, Red Hat and others. Owning all the copyright might give more credibility to your client indemnification, but it’s certainly not necessary.

There is a conflation of issues going on with customer indemnification too. What is more important than the ownership of the code is the origin of the code. I would certainly agree that projects should follow decent due dilligence procedures to ensure that a submission is the submitter’s own work, and that he has the right or permission to submit the code under your project’s licence. But this is independent of copyright assignment.

Daniel mentions Mozilla as an example of a non-vendor-led-project requiring copyright assignment – he is mistaken. The Mozilla Committer’s Agreement (pdf) requires a new committer to do due dilligence on the origin of code he contributes, and not commit code which he is not authorised to do. But they do not require joint copyright assignment. Also note when the agreement gets signed – not on your first patch, but when you are becoming a core committer – when you are getting right to the top of the Mozilla food chain.

Second: Copyright assignment is potentially harmful.

It is right and proper that a new contributor to your project jump through some hoops to learn the ways of the community. Communities are layered according to involvement, and the trust which they earn through their involvement. You don’t give the keys to the office to a new employee on day one. What you do on day one is show someone around, introduce them to everyone, let them know what the values of your community are.

Now, what does someone learn about the values of your community if, once they have gone to the effort to modify the software to add a new feature, had their patch reviewed by your committers and met your coding standards, the very next thing you do is send them a legal form that they need to print, sign, and return (and incidentally, agree with) before you will integrate their code in your project?

The hoops that people should be made to jump through are cultural and technical. Learn the tone, meet the core members, learn how to use the tools, the coding conventions, and familiarise yourself with the vision of the community. The role of community members at this stage is to welcome and teach. The equivalent of showing someone around on the first day.

Every additional difficulty which a new contributor experiences is an additional reason for him to not stick around. If someone doesn’t make the effort to familiarise himself with your community processes and tools, then it’s probably not a big deal if he leaves – he wasn’t a good match for the project. But if someone walks away for another reason, something that you could change, something that you can do away with without changing the nature of the community, then that’s a loss.

Among the most common superfluous barriers to entry that you find in free software projects are complicated build systems or uncommon tools, long delays in having questions answered and patches reviewed, and unnecessary bureaucracy around contributing. A JCA fits squarely into that third category.

In a word, the core principle is: To build a vibrant core developer community independent of your company, have as few barriers to contributing as possible.

There is another issue at play here, one which might not be welcomed by the vendors driving the communities where I think a JCA requirement does the most harm. That issue is trust.

One of the things I said at OSBC during my presentation is that companies aren’t community members – their employees might be. Communities are made up of people, individual personalities, quirks, beliefs. While we often assign human characteristics to companies, companies don’t believe. They don’t have morals. The personality of a company can change with the board of directors.

Luis Villa once wrote “what if the corporate winds change? … At that point, all the community has is the license, and [the company]’s licensing choices … When [the company] actually trusts communities, and signals as such by treating the community as equals […] then the community should (and I think will) trust them back. But not until then.”

Luis touches on an important point. Trust is the currency we live & die by. And companies earn trust by the licencing choices they make. The Apache Foundation, Python Software Foundation and Free Software Foundation are community-run non-profits. As well as their licence choices, we also have their by-laws, their membership rules and their history. They are trusted entities. In a fundamental way, assigning or sharing copyright with a non-profit with a healthy governance structure is different from sharing copyright with a company.

There are many cases of companies taking community code and forking commercial versions off it, keeping some code just for themselves. Trolltech, SugarCRM and Digium notably release a commercial version which is different from their GPL edition (Update: Several people have written in to tell me that this is no longer the case with Trolltech, since they were bought by Nokia and QT was relicenced under the LGPL – it appeared that people felt clarification was necessary, although the original point stands – Trolltech did sell a commercial QT different from their GPL “community” edition).

There are even cases of companies withdrawing from the community completely and forking commercial-only versions of software which had previously released under the GPL. A recent example is Novell‘s sale of Netmail to Messaging Architects, resulting in the creation of the Bongo project, forked off the last GPL release available. In 2001, Sunspire (since defunct) decided to release future versions of Tuxracer as a commercial game, resulting in the creation of Planet Penguin Racer, among others, off the last GPL version. Xara dipped their toes releasing most of their core product under the GPL, but decided after a few years that the experiment had failed. Xara Xtreme continues with a community effort to port the rendering engine to Cairo, but to my knowledge, no-one from Xara is working on that effort.

Examples like these show that companies can not be trusted to continue developing the software indefinitely as free software. So as an external developer being asked to sign a JCA, you have to ask yourself the question whether you are prepared to allow the company driving the project the ability to build a commercial product with your code in there. At best, that question constitutes another barrier to entry.

At OSBC, I was pointing out some of the down sides of choices that people are making without even questioning them. JCAs are good for some things, but bad at building a big developer community. What I always say is that you first need to know what you want from your community, and set up the rules appropriately. Nothing is inherently evil in this area, and of course the copyright holder has the right to set the rules of the game. What is important is to be aware of the trade-offs which come from those choices.

To summarise where I stand, copyright assignment or sharing agreements are usually unnecessary, potentially harmful if you are trying to build a vibrant core developer community, by making bureaucracy and the trust of your company core issues for new contributors. There are situations where a JCA is merited, but this comes at a cost, in terms of the number of external contributors you will attract.

Updates: Most of the comments tended to concentrate on two things which I had said, but not emphasised enough. I have tried to clarify slightly where appropriate in the text. First, Trolltech used to distribute a commercial and community edition of QT which were different, but as the QT Software Group in Nokia, this is no longer the case (showing that licencing can change after an acquisition (for the better), as it happens. Second, assigning copyright to a non-profit is, I think, a less controversial proposition for most people because of the extra trust afforded to non-profits through their by-laws, governance structure and not-for-profit status. And it is worth pointing out that KDE eV has a voluntary joint copyright assignment for contributors that they encourage people to sign – Aaron Seigo pointed this out. I think it’s a neat way to make future relicencing easier without adding the initial barrier to entry.

April 1, 2009

gnome

1 Comment

Benjamin Otte’s post today asking how decisions get made (in the context of GNOME) made me think of something I remarked last week.

Last Friday, I attended Critical Mass in San Francisco with Jacob Berkman, and had a blast.

I'm forever blowing bubbles...

The observation I made was that Critical Mass’s decision making felt a little bit like GNOME’s.

We all met up around 18h on the Embarcadero, all with a common goal – cycle around for a few hours together. Different people had different motivations – for most it was to have fun and meet friends, for some, to raise awareness of cycling as a means to getting around, for others, a more general statement on lifestyle.

After a while standing around, you could feel the crowd getting antsy – people wanted to get underway, and they were just waiting for someone to show the way. For no particular reason, someone who was only a leader insofar as he was the first one to start cycling, we got underway.

After that, thing proceeded more or less smoothly, most of the time. But every now and again, at an intersection, the whole thing would just stop. A group at the front would circle to block the intersection, and those of us behind had no choice but to stop, and wait for someone to get us going again.

Eventually, with Jacob, Heewa and Marie-Anne, we went up to the front to avoid getting blocked up like this, and to set the pace a bit.

When we got to the front, we still stopped every now and again to let people catch up, to keep the group together, and then set off again. Sometimes, we were followed, and sometimes, we’d go 50 yards or so, and for no discernible reason, we were leaders without followers – just two guys out taking a walk. At one intersection, we were stopped for fully 20 minutes. On 3 or 4 different occasions, small groups set off to try to get things underway again, and yet they weren’t followed. Until finally, as if some secret handshake were made, we set off again.

This is the kind of decision making you get when you have a mob with no clearly identified leadership – the direction is set by those who take the lead, and sometimes the ones who take the lead don’t get followed.

It’ll be interesting to see, in the myriad of projects setting direction for the GNOME project at the moment, which group(s) impose their vision and get us cycling on another few blocks, until once again we find ourselves circling an intersection. Will it be Zeitgeist/Mayanna? GNOME Shell? Clutter? Telepathy? GNOME Online Desktop? Or some other small group that captures the imagination of a critical mass of people so that everyone else just follows along?

To be honest, I don’t know – I’m stuck before the intersection, waiting for people to follow somebody, anybody, and let me get moving again.

April 1, 2009

community, gnome, maemo

4 Comments

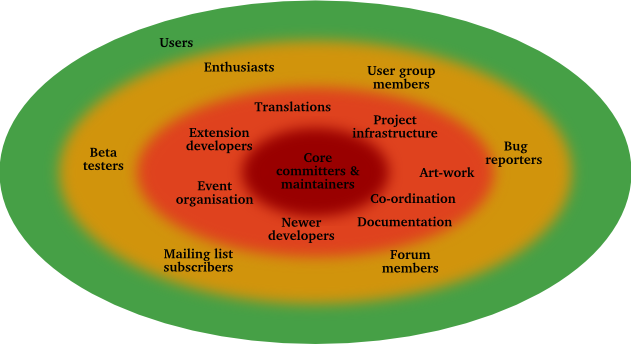

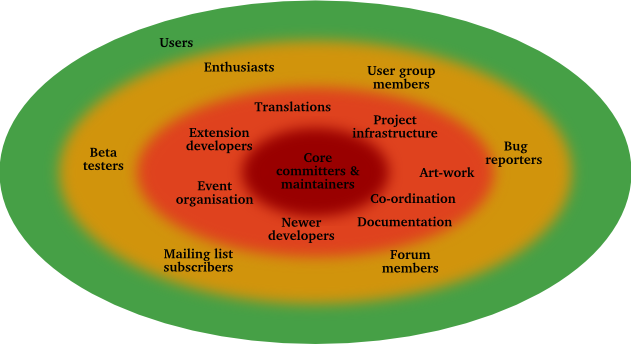

Way back in the Maemo Summit in September 08, Jay Sullivan put up a slide which talked about spheres of participation in Mozilla. I threw together a rough approximation of what was in that:

Spheres of participation

Communities are like onions or planets, with, at their core, the leaders who set the tone and direction for the community. Around this core,many more people contribute to the project with their time and energy, with code, artwork, translations, or with other skills. And beyond, we have forum members, mailing list participants, people on IRC, the people who gravitate to the community because of its vision and tone, and who make the project immeasurably better with their enthusiasm, but who individually don’t commit as much time, or feel as much ownership of the project, as those I mentioned before. Finally, the there is user community, the people who benefit from the work of the community, and which is orders of magnitude bigger.

The boundaries between these levels of involvement are diffuse and ill-defined. People move closer to the center or further away from it as time goes on. A core maintainer gets married, has a child, changes jobs, and suddenly he doesn’t have the time to contribute to the project and influence its direction. A young student discovers the community, and goes from newbie to core committer in a few months.

But the key characteristic to remember is that at the inside we have people who have gained the trust of a large part of the community, and significantly, of the other members of the community at the core. Contribution breeds trust, and trust is the currency upon which communities are built.

Last week as OSBC, I heard different companies claim that they had very large communities of developers, but when I asked about this, they meant people contributing translations, signed up to user forums, downloading SDKs, or developing plug-ins or extensions. When I bore down a little and asked how many were committers to the core product, the impression I got was always the same: very few – because these companies, built up around a niche product, build their business case around being the developer of the product, they require copyright assignment to the core, and becoming a core committer is difficult, if not impossible, if you don’t work for the company behind the project.

So I ask myself, what is the best way to count the size of your developer community? Do you include the orange ring, including translators, documenters, artists, bug triagers and developers who submit patches, but aren’t yet “core” developers? Just the red ring, including maintainers and committers to core modules? Or would you also include all those volunteers who give of their time to answer questions on forums and on mailing lists, who learn about and evangelise your product?

For a project like GNOME, I think of our community in the largest terms possible. I want someone who is a foundation member, who comes along to show off GNOME at a trade show, but has never developed a GNOME application, to feel like part of the GNOME community. I consider translators and sysadmins, board members and event organisers a vital part of the family. But none of these set the direction for the project – for that, we look to project maintainers, the release team, and the long-time leading lights of the project.

I think that you need to separate out the three distinct groups. Your core developers set the direction of the project. Your developer community might include extension developers, documenters, and other people who improve the project, but who do not set the direction for the core (some companies might call this their “ecosystem”). Finally, we have the contributor community, which includes people signed on to mailing lists and forums.

When considering how viable a community project is, calculate the bus factor for the red bit, your core developers. Is there one core maintainer? One company hiring all of the core developers? Then the risk involved in adopting the project goes up correspondingly. The breadth of your contributor community will not immediately fill the gap left if all the core developers disappear.

March 27, 2009

General

2 Comments

As promised during my presentation yesterday, here are the various publications I linked to for information on evaluating community governance patterns (and anti-patterns):

And, for French speakers, a bonus link (although the language is dense academic):

« Previous Entries Next Entries »