So, what’s new in Epiphany 3.20?

First off: overlay scrollbars. Because web sites have the ability to style their scrollbars (which you’ve probably noticed on Google sites), WebKit embedders cannot use a normal GtkScrolledWindow to display content; instead, WebKit has to paint the scrollbars itself. Hence, when overlay scrollbars appeared in GTK+ 3.16, WebKit applications were left out. Carlos García Campos spent some time to work on this, and the result speaks for itself (if you fullscreen this video to see it properly):

Overlay scrollbars did not actually require any changes in Epiphany itself — all applications using an up-to-date version of WebKit will immediately benefit — but I mention it here as it’s one of the most noticeable changes. Read about other WebKit improvements, like the new Faster Than Light FTL/B3 JavaScript compilation tier, on Carlos’s blog.

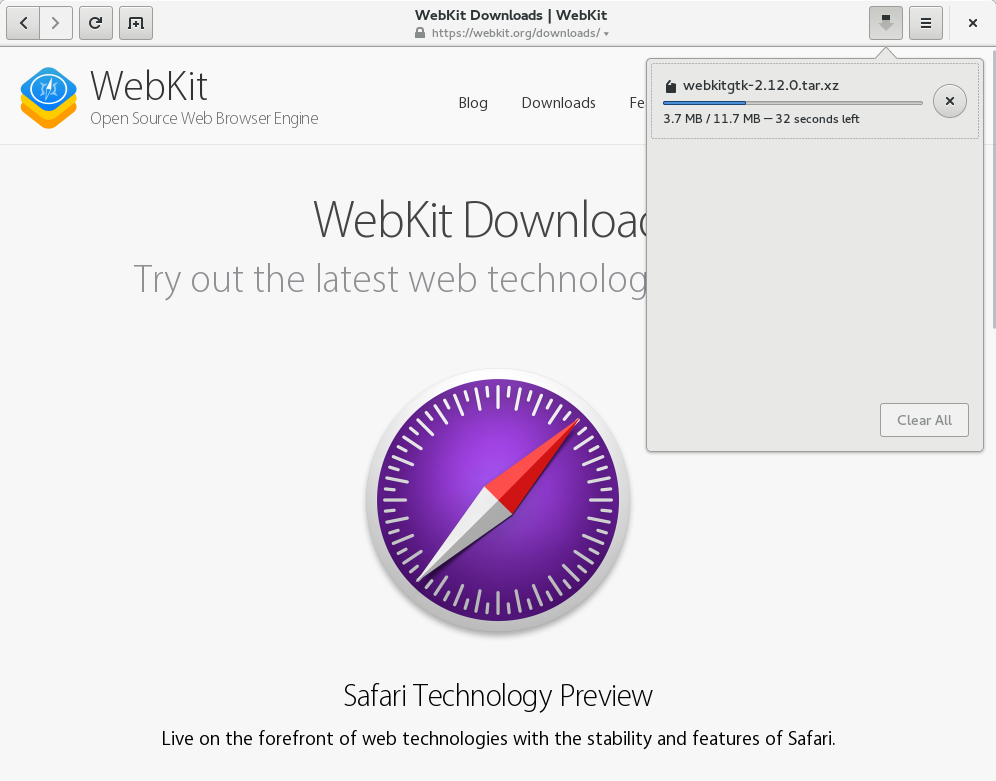

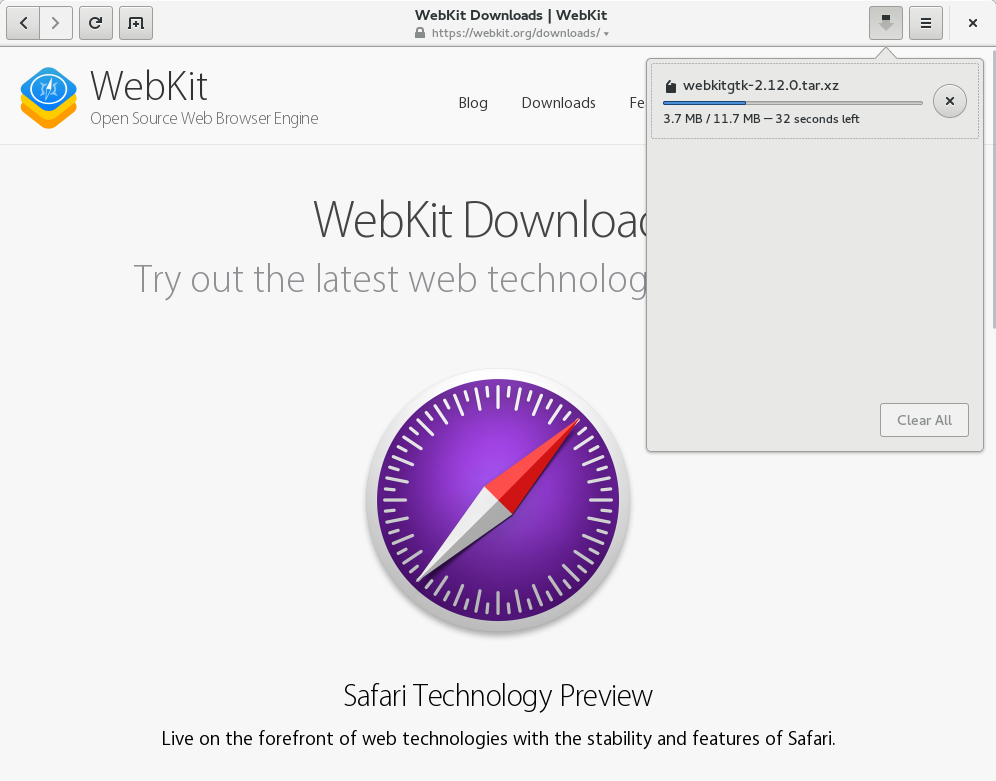

Next up, there is a new downloads manager, also by Carlos García Campos. This replaces the old downloads bar that used to appear at the bottom of the screen:

I flipped the switch in Epiphany to enable WebGL:

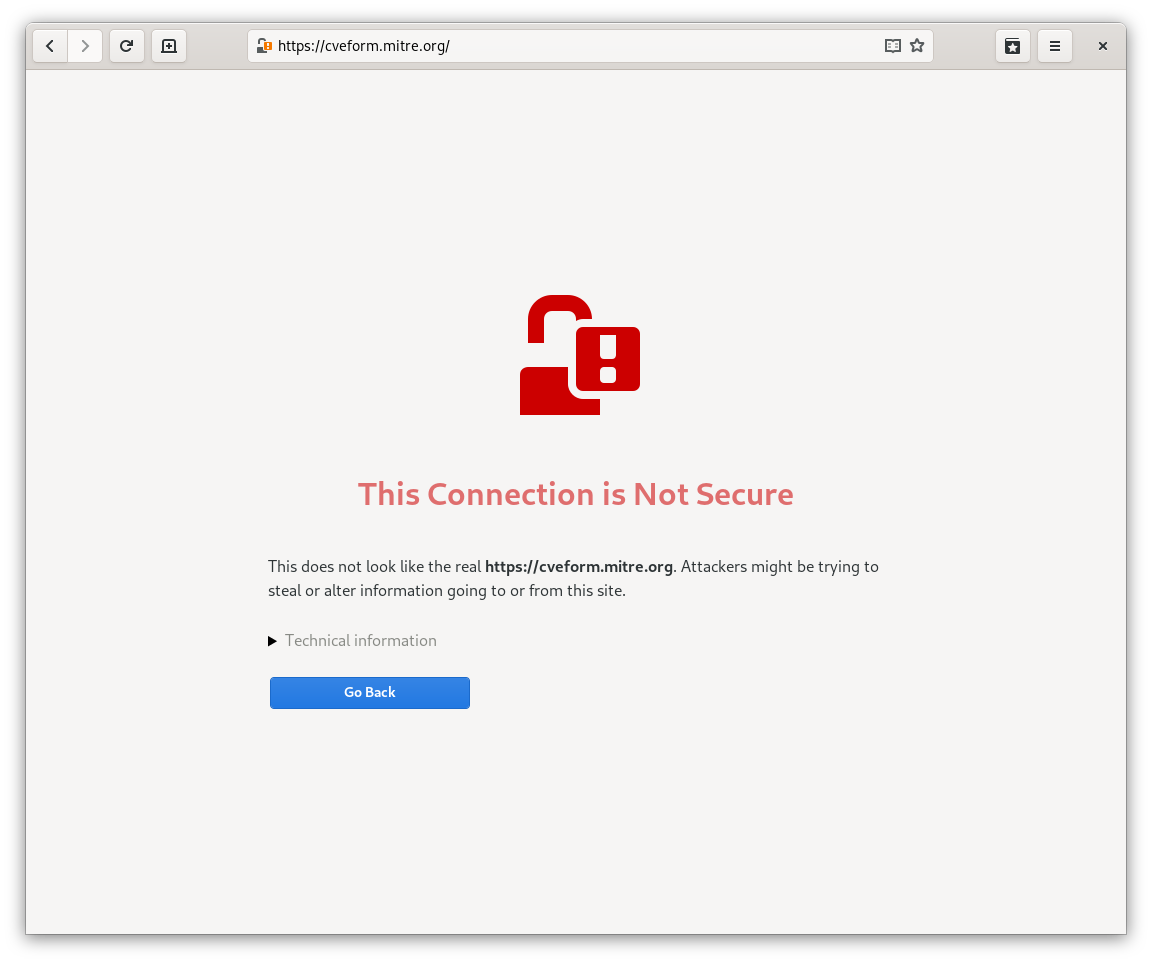

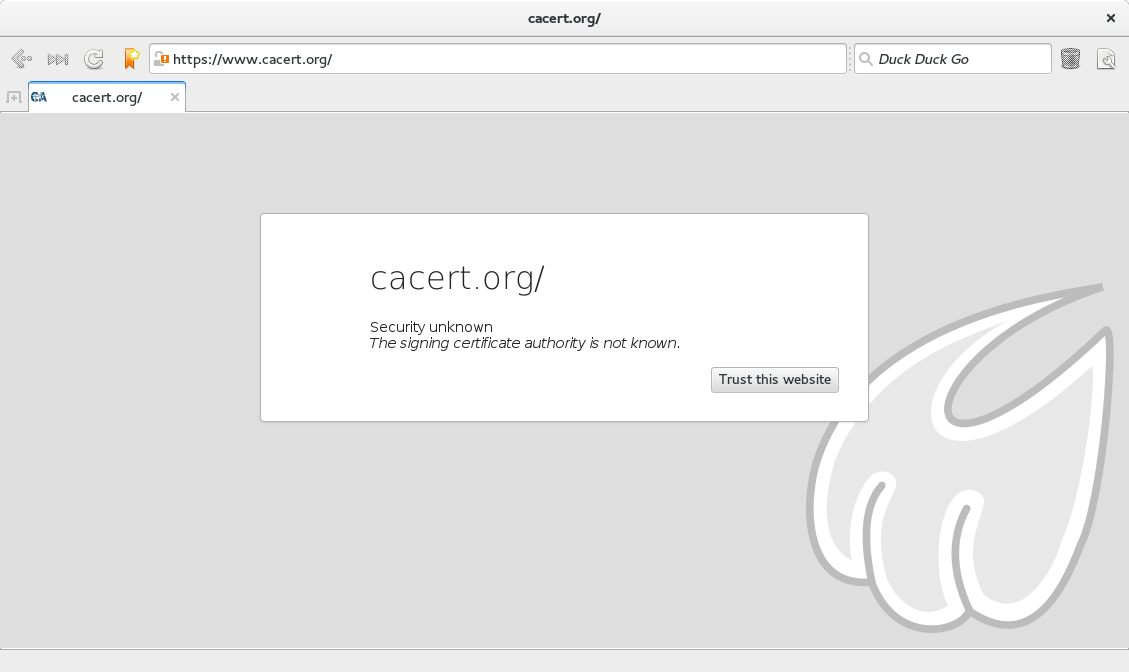

If you watched that video in fullscreen, you might have noticed that page is marked as insecure, even though it doesn’t use HTTPS. Like most browsers, we used to have several confusing security states. Pages with mixed content received a security warning that all users ignored, but pages with no security at all received no such warning. That’s pretty dumb, which is why Firefox and Chrome have been talking about changing this for a year or so now. I went ahead and implemented it. We now have exactly two security states: secure and insecure. If your page loads any content not over HTTPS, it will be marked as insecure. The vast majority of pages will be displayed as insecure, but it’s no less than such sites deserve. I’m not concerned at all about “warning fatigue,” because users are not generally expected to take any action on seeing these warnings. In the future, we will take this further, and use the insecure indicator for sites that use SHA-1 certificates.

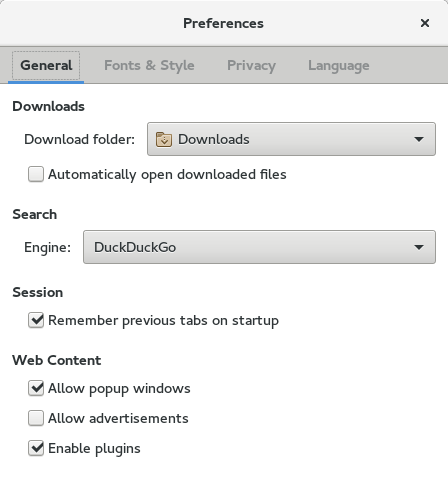

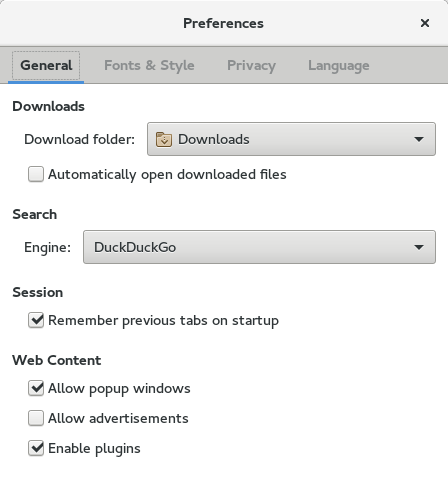

Moving on. By popular request, I exposed the previously-hidden setting to disable session restore in the preferences dialog, as “Remember previous tabs on startup:”

Meanwhile, Carlos worked in both WebKit and Epiphany to greatly improve session restoration. Previously, Epiphany would save the URLs of the pages loaded in each tab, and when started it would load each URL in a new tab, but you wouldn’t have any history for those tabs, for example, and the state of the tab would otherwise be lost. Carlos worked on serializing the WebKit session state and exposing it in the WebKitGTK+ API, allowing us to restore full back/forward history for each tab, plus details like your scroll position on each tab. Thanks to Carlos, we also now make use of this functionality when reopening closed tabs, so your reopened tab will have a full back/forward list of history, and also when opening new tabs, so the new tab will inherit the history of the tab it was opened from (a feature that we had in the past, but lost when we switched to WebKit2).

Interestingly, we found the session restoration was at first too good: it would restore the page really exactly as you last viewed it, without refreshing the content at all. This means that if, for example, you were viewing a page in Bugzilla, then when starting the browser, you would miss any new comments from the last time you loaded the page until you refresh the page manually. This is actually the current behavior in Safari; it’s desirable on iOS to make the browser launch instantly, but questionable for desktop Safari. Carlos decided to always refresh the page content when restoring the session for WebKitGTK+.

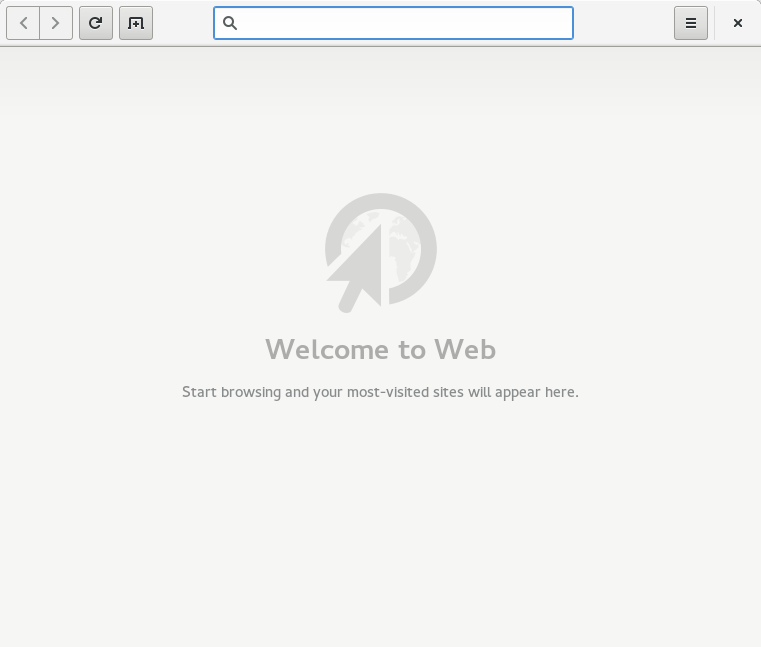

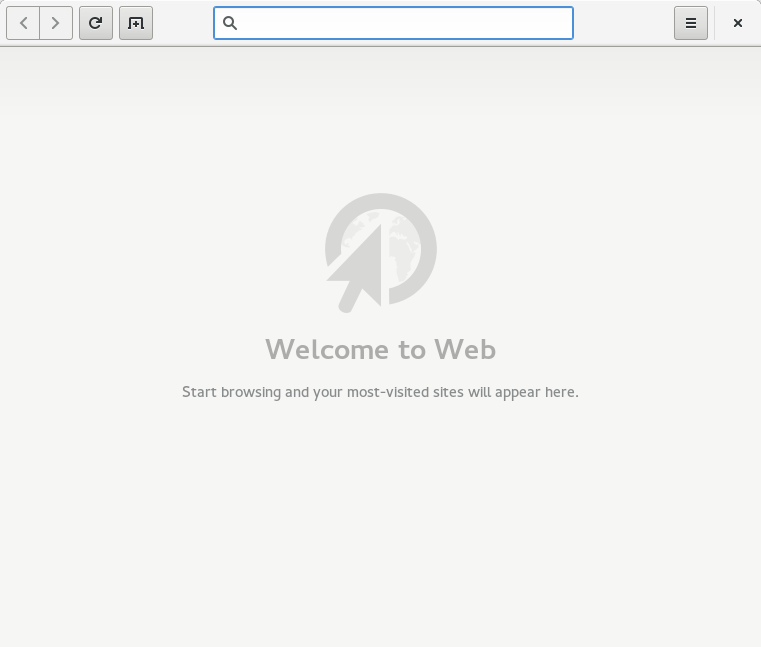

Last, and perhaps least, there’s a new empty state displayed for new users, developed by Lorenzo Tilve and polished up by me, so that we don’t greet new users with a completely empty overview (where your most-visited sites are normally displayed):

That, plus a bundle of the usual bugfixes, significant code cleanups, and internal architectual improvements (e.g. I converted the communication between the UI process and the web process extension to use private D-Bus connections instead of the session bus). The best things have not changed: it still starts up about 5-20 times faster than Firefox in my unscientific testing; I expect you’ll find similar results.

Enjoy!