PipeWire has already made great strides forward in terms of improving the audio handling situation on Linux, but one of the original goals was to also bring along the video side of the house. In fact in the first few releases of Fedora Workstation where we shipped PipeWire we solely enabled it as a tool to handle screen sharing for Wayland and Flatpaks. So with PipeWire having stabilized a lot for audio now we feel the time has come to go back to the video side of PipeWire and work to improve the state-of-art for video capture handling under Linux. Wim Taymans did a presentation to our team inside Red Hat on the 30th of September talking about the current state of the world and where we need to go to move forward. I thought the information and ideas in his presentation deserved wider distribution so this blog post is building on that presentation to share it more widely and also hopefully rally the community to support us in this endeavour.

The current state of video capture, usually webcams, handling on Linux is basically the v4l2 kernel API. It has served us well for a lot of years, but we believe that just like you don’t write audio applications directly to the ALSA API anymore, you should neither write video applications directly to the v4l2 kernel API anymore. With PipeWire we can offer a lot more flexibility, security and power for video handling, just like it does for audio. The v4l2 API is an open/ioctl/mmap/read/write/close based API, meant for a single application to access at a time. There is a library called libv4l2, but nobody uses it because it causes more problems than it solves (no mmap, slow conversions, quirks). But there is no need to rely on the kernel API anymore as there are GStreamer and PipeWire plugins for v4l2 allowing you to access it using the GStreamer or PipeWire API instead. So our goal is not to replace v4l2, just as it is not our goal to replace ALSA, v4l2 and ALSA are still the kernel driver layer for video and audio.

It is also worth considering that new cameras are getting more and more complicated and thus configuring them are getting more complicated. Driving this change is a new set of cameras on the way often called MIPI cameras, as they adhere to the API standards set by the MiPI Alliance. Partly driven by this V4l2 is in active development with a Codec API addition, statefull/stateless, DMABUF, request API and also adding a Media Controller (MC) Graph with nodes, ports, links of processing blocks. This means that the threshold for an application developer to use these APIs directly is getting very high in addition to the aforementioned issues of single application access, the security issues of direct kernel access and so on.

Of course we are not the only ones seeing the growing complexity of cameras as a challenge for developers and thus libcamera has been developed to make interacting with these cameras easier. Libcamera provides unified API for setup and capture for cameras, it hides the complexity of modern camera devices, it is supported for ChromeOS, Android and Linux.

One way to describe libcamera is as the MESA of cameras. Libcamera provides hooks to run (out-of-process) vendor extensions like for image processing or enhancement. Using libcamera is considering pretty much a requirement for embedded systems these days, but also newer Intel chips will also have IPUs configurable with media controllers.

Libcamera is still under heavy development upstream and do not yet have a stable ABI, but they did add a .so version very recently which will make packaging in Fedora and elsewhere a lot simpler. In fact we have builds in Fedora ready now. Libcamera also ships with a set of GStreamer plugins which means you should be able to get for instance Cheese working through libcamera in theory (although as we will go into, we think this is the wrong approach).

Before I go further an important thing to be aware of here is that unlike on ALSA, where PipeWire can provide a virtual ALSA device to provide backwards compatibility with older applications using the ALSA API directly, there is no such option possible for v4l2. So application developers will need to port to something new here, be that libcamera or PipeWire. So what do we feel is the right way forward?

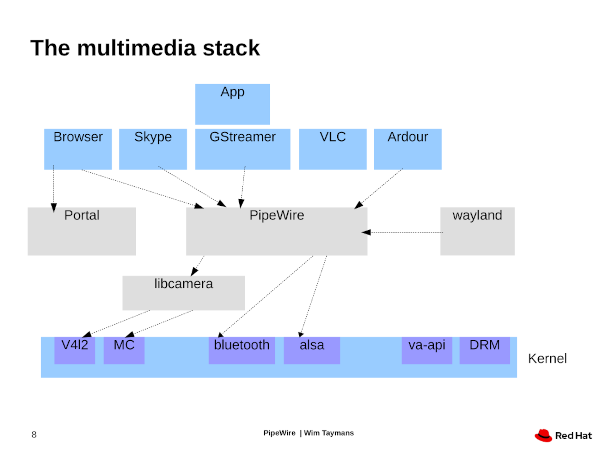

How we envision the Linux multimedia stack going forward

Above you see an illustration of what we believe should be how the stack looks going forward. If you made this drawing of what the current state is, then thanks to our backwards compatibility with ALSA, PulseAudio and Jack, all the applications would be pointing at PipeWire for their audio handling like they are in the illustration you see above, but all the video handling from most applications would be pointing directly at v4l2 in this diagram. At the same time we don’t want applications to port to libcamera either as it doesn’t offer a lot of the flexibility than using PipeWire will, but instead what we propose is that all applications target PipeWire in combination with the video camera portal API. Be aware that the video portal is not an alternative or a abstraction of the PipeWire API, it is just a way to set up the connection to PipeWire that has the added bonus of working if your application is shipping as a Flatpak or another type of desktop container. PipeWire would then be in charge of talking to libcamera or v42l for video, just like PipeWire is in charge of talking with ALSA on the audio side. Having PipeWire be the central hub means we get a lot of the same advantages for video that we get for audio. For instance as the application developer you interact with PipeWire regardless of if what you want is a screen capture, a camera feed or a video being played back. Multiple applications can share the same camera and at the same time there are security provided to avoid the camera being used without your knowledge to spy on you. And also we can have patchbay applications that supports video pipelines and not just audio, like Carla provides for Jack applications. To be clear this feature will not come for ‘free’ from Jack patchbays since Jack only does audio, but hopefully new PipeWire patchbays like Helvum can add video support.

So what about GStreamer you might ask. Well GStreamer is a great way to write multimedia applications and we strongly recommend it, but we do not recommend your GStreamer application using the v4l2 or libcamera plugins, instead we recommend that you use the PipeWire plugins, this is of course a little different from the audio side where PipeWire supports the PulseAudio and Jack APIs and thus you don’t need to port, but by targeting the PipeWire plugins in GStreamer your GStreamer application will get the full PipeWire featureset.

So what is our plan of action>

So we will start putting the pieces in place for this step by step in Fedora Workstation. We have already started on this by working on the libcamera support in PipeWire and packaging libcamera for Fedora. We will set it up so that PipeWire can have option to switch between v4l2 and libcamera, so that most users can keep using the v4l2 through PipeWire for the time being, while we work with upstream and the community to mature libcamera and its PipeWire backend. We will also enable device discoverer for PipeWire.

We are also working on maturing the GStreamer elements for PipeWire for the video capture usecase as we expect a lot of application developers will just be using GStreamer as opposed to targeting PipeWire directly. We will start with Cheese as our initial testbed for this work as it is a fairly simple application, using Cheese as a proof of concept to have it use PipeWire for camera access. We are still trying to decide if we will make Cheese speak directly with PipeWire, or have it talk to PipeWire through the pipewiresrc GStreamer plugin, but have their pro and cons in the context of testing and verifying this.

We will also start working with the Chromium and Firefox projects to have them use the Camera portal and PipeWire for camera support just like we did work with them through WebRTC for the screen sharing support using PipeWire.

There are a few major items we are still trying to decide upon in terms of the interaction between PipeWire and the Camera portal API. It would be tempting to see if we can hide the Camera portal API behind the PipeWire API, or failing that at least hide it for people using the GStreamer plugin. That way all applications get the portal support for free when porting to GStreamer instead of requiring using the Camera portal API as a second step. On the other side you need to set up the screen sharing portal yourself, so it would probably make things more consistent if we left it to application developers to do for camera access too.

What do we want from the community here?

First step is just help us with testing as we roll this out in Fedora Workstation and Cheese. While libcamera was written motivated by MIPI cameras, all webcams are meant to work through it, and thus all webcams are meant to work with PipeWire using the libcamera backend. At the moment that is not the case and thus community testing and feedback is critical for helping us and the libcamera community to mature libcamera. We hope that by allowing you to easily configure PipeWire to use the libcamera backend (and switch back after you are done testing) we can get a lot of you to test and let us what what cameras are not working well yet.

A little further down the road please start planning moving any application you maintain or contribute to away from v4l2 API and towards PipeWire. If your application is a GStreamer application the transition should be fairly simple going from the v4l2 plugins to the pipewire plugins, but beyond that you should familiarize yourself with the Camera portal API and the PipeWire API for accessing cameras.

For further news and information on PipeWire follow our @PipeWireP twitter account and for general news and information about what we are doing in Fedora Workstation make sure to follow me on twitter @cfkschaller.

This is extremely well times as I had read some questions on sandboxing etc for this and asks others recently.

Will there be ways to prevent video device access outside the use of portals?

It may not be suitable for workstation yet, but that could be a key feature on something like silverblue – knowledge that the only access to the device is through the portal and anything not using it is blocked.

I’m suffering a lot with screencasting on Wayland with a Radeon GPU and Fedora 34 Workstation.

I hope to see DMA-BUF supported for Radeon GPUs soon.

Enabling DMA-BUF on all the major GPU architectures is definitely on our priority list, working with relevant parties to make it happen.

The reason why it was disabled for AMD in Gnome was that many applications did not handle DMA-BUF correctly but still advertised support for it. This should be fixed by now for all major applications, notably WebRTC based ones like Firefox and Chromium (very recently, not in stable yet). Thus it’s pretty safe we can re-enable DMA-BUF for all hardware in Gnome 42.

I don’t quite understand how dmabuf can help you here. Dmabuf is located at gfx card but you need to download the screen data to ram, encode it and send via network (webrtc).

The dmabuf option here just moves GPU -> CPU transfer step from compositor to client.

It may help when HW encoding is used (screen frames are encoded on GPU) but I’m not aware of any WebRTC client which does it.

hello,

I’ currently using OpenCV (itself using v4l2 or maybe gstreamer) for video, and SDL + alsa (in background) for audio and video recording.

Would you mind to add some words about portability with pipewire ?

FYI, my problem is to write code on Linux, and provide a windows version. That’s the reason why I’m using SDL2. (see: https://framagit.org/ericb/miniDart).

Last, thanks a lot for speaking about video + audio on Linux, because it was a total nightmare (e.g. have a look at https://github.com/ebachard/Linux_Alsa_Audio_Record )

Best regards,

Eric Bachar

If you want to improve the stack, then start with pipewire itself. It has been a net negative for me and many others, and I’d gladly switch back to pulseaudio given the choice. It has brought zero improvement to sound quality or stability, but is a bloated resource hog. It’s currently using over 1GB of RAM and around 15% of my CPU cycles. There is literally no excuse for a sound server to be doing that. I despise everything about it. It’s a backwards step for Linux. Either fix its problems or can the project and go back to something that works properly.

You’ve checked that bugs have been logged (or started your own) for your issues on the freedesktop issue tracker right? https://gitlab.freedesktop.org/pipewire/pipewire/-/issues

Sad to hear things are not working out for you, but if you want to switch back to PulseAudio you are aware that you can just run this command right?

“sudo dnf swap –allowerasing pipewire-pulseaudio pulseaudio”

@Tet

What is the point with a comment like this? Unless you reported any of your claimed problems, which sounds extreme to say the least, it’s completely pointless. No action from Fedora, RedHat or any of the Pipewire developers will come out of it. Please create and point to an actual bug report for these issues in Pipewire.

Yup… it does not work for me also when used under virtualbox on macos or windows 10. It got better, but not even an youtube video plays without noise and delays. :D… but moving in the right direction.

Plot twist; Carla can do video https://nextcloud.falktx.com/s/LFDBEgBPZw6rxGs though that’s older and has more a basis in JACK https://github.com/falkTX/Carla/commit/25f16982b36ff13e9d94c5e6bbfaf72b29b3d0e8

What is the plan for supporting non-v4l2 mipi devices? For instance, many SoC vendors (e.g. Ambarella, HiSilicon, etc.) commonly used in embedded camera products no not provide v4l2 drivers but rather their own driver with a custom set of ioctl calls and dma resources.

While libcamera doesn’t require V4L2 (there’s a hard dependency on the media controller API for device enumeration, but even that could be lifted is needed), we require kernel drivers to be merged in the mainline kernel. As V4L2 is today the only upstream Linux camera kernel API, it’s today a hard requirement. The way forward is to get Ambarella and other vendors to be better Linux kernel community citizens and contribute code upstream.

With PipeWire, you can write your own plugin to “insert” a camera into the system. So you can certainly write it over any proprietary API. For example, I intend to write one to be able to add RTSP cameras as if they were native cameras to applications.

Great to hear this! Concerning Firefox and Chromium (WebRTC): Just wanted to let you know that I did some initial work on that in https://bugzilla.mozilla.org/show_bug.cgi?id=1724900 which, once ready, could be upstreamed (https://bugs.chromium.org/p/webrtc/issues/detail?id=13177). Please ping me there if you also start something so we don’t duplicate any work :)