Back a couple of months ago, I was given the opportunity to talk at LAS about search in GNOME, and the ideas floating around to improve it. Part of the talk was dedicated to touting the benefits of TinySPARQL as the base for filesystem search, and how in solving the crazy LocalSearch usecases we ended up with a very versatile tool for managing application data, either application-private or shared with other peers.

It was no one else than our (then) future ED in a trench coat (I figure!) who forced my hand in the question round into teasing an application I had been playing with, to showcase how TinySPARQL should be used in modern applications. Now, after finally having spent some more time on it, I feel it’s up to a decent enough level of polish to introduce it more formally.

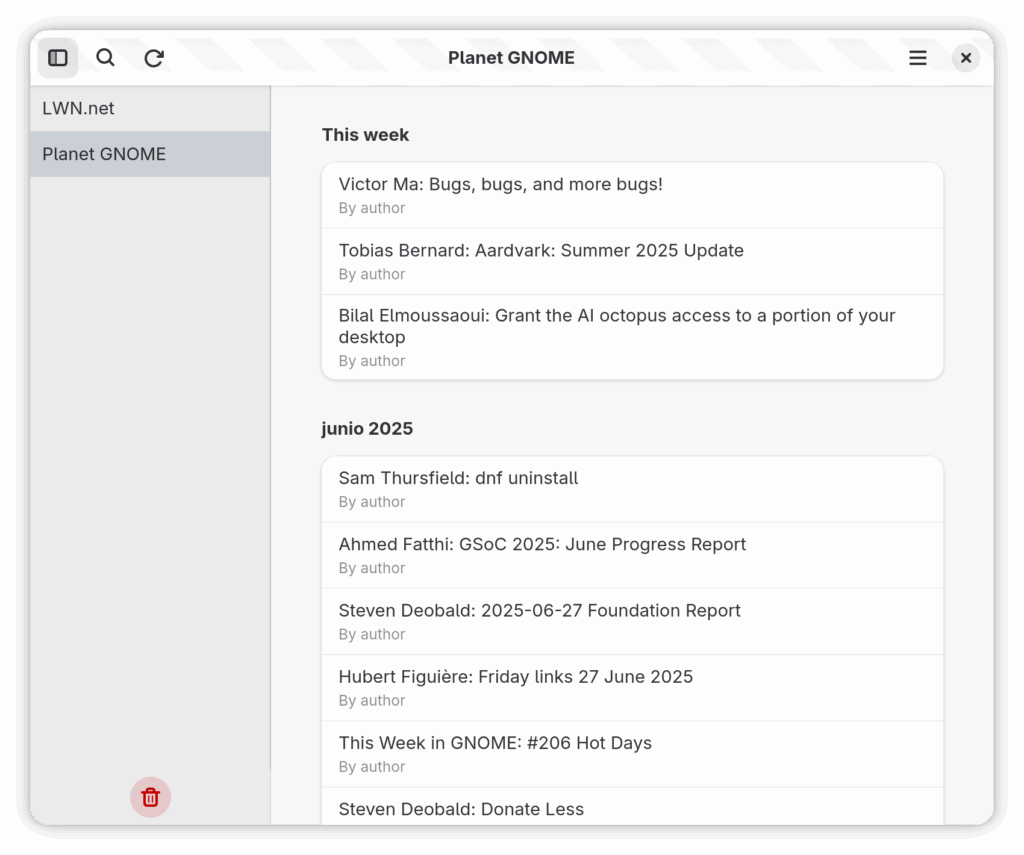

Behold Rissole

Rissole is a simple RSS feed reader, intended let you read articles in a distraction free way, and to keep them all for posterity. It also sports a extremely responsive full-text search over all those articles, even on huge data sets. It is built as a flatpak, you can ATM download it from CI to try it, meanwhile it reaches flathub and GNOME Circle (?). Your contributions are welcome!

So, let’s break down how it works, and what does TinySPARQL bring to the table.

Structuring the data

The first thing a database needs is a definition about how the data is structured. TinySPARQL is strongly based on RDF principles, and depends on RDF Schema for these data definitions. You have the internet at your fingertips to read more about these, but the gist is that it allows the declaration of data in a object-oriented manner, with classes and inheritance:

mfo:FeedMessage a rdfs:Class ;

rdfs:subClassOf mfo:FeedElement .

mfo:Enclosure a rdfs:Class ;

rdfs:subClassOf mfo:FeedElement .

One can declare properties on these classes:

mfo:downloadedTime a rdf:Property ;

nrl:maxCardinality 1 ;

rdfs:domain mfo:FeedMessage ;

rdfs:range xsd:dateTime .

And make some of these properties point to other entities of specific (sub)types, this is the key that makes TinySPARQL a graph database:

mfo:enclosureList a rdf:Property ;

rdfs:domain mfo:FeedMessage ;

rdfs:range mfo:Enclosure .

In practical terms, a database needs some guidance on what data access patterns are most expected. Being a RSS reader, sorting things by date will be prominent, and we want full-text search on content. So we declare it on these properties:

nie:plainTextContent a rdf:Property ;

nrl:maxCardinality 1 ;

rdfs:domain nie:InformationElement ;

rdfs:range xsd:string ;

nrl:fulltextIndexed true .

nie:contentLastModified a rdf:Property ;

nrl:maxCardinality 1 ;

nrl:indexed true ;

rdfs:subPropertyOf nie:informationElementDate ;

rdfs:domain nie:InformationElement ;

rdfs:range xsd:dateTime .

The full set of definitions will declare what is permitted for the database to contain, the class hierarchy and their properties, how do resources of a specific class interrelate with other classes… In essence, how the information graph is allowed to grow. This is its ontology (semi-literally, its view of the world, whoooah duude). You can read more in detail how these declarations work at the TinySPARQL documentation.

This information is kept in files separated from code, built in as a GResource in the application binary, and used during initialization to create a database at a location in control of the application:

let mut store_path = glib::user_data_dir();

store_path.push("rissole");

store_path.push("db");

obj.imp()

.connection

.set(tsparql::SparqlConnection::new(

tsparql::SparqlConnectionFlags::NONE,

Some(&gio::File::for_path(store_path)),

Some(&gio::File::for_uri(

"resource:///com/github/garnacho/Rissole/ontology",

)),

gio::Cancellable::NONE,

)?)

.unwrap();

So there’s a first advantage right here, compared to other libraries and approaches: The application only has to declare this ontology without much (or any) further code to support supporting code, compare to going through the design/normalization steps for your database design, and. having to CREATE TABLE your way to it with SQLite.

Handling structure updates

If you are developing an application that needs to store a non-trivial amount of data. It often comes as a second thought how to deal with new data being necessary, stored data being no longer necessary, and other post-deployment data/schema migrations. Rarely things come up exactly right at the first try.

With few documented exceptions, TinySPARQL is able to handle these changes to the database structure by itself, applying the necessary changes to convert a pre-existing database into the new format declared by the application. This also happens at initialization time, from the application-provided ontology.

But of course, besides the data structure, there might also be data content that might some kind of conversion or migration, this is where an application might still need some supporting code. Even then, SPARQL offers the necessary syntax to convert data, from small to big, from minor to radical changes. With the CONSTRUCT query form, you can generate any RDF graph from any other RDF graph.

For Rissole, I’ve gone with a subset of the Nepomuk ontology, which does contain much embedded knowledge about the best ways to lay data in a graph database. As such I don’t expect major changes or gotchas in the data, but this remains a possibility for the future, e.g. if we were to move to another emerging ontology, or any other less radical data migrations that might crop up.

So here’s the second advantage, compare to having to ALTER TABLE your way to new database schemas, or handle data migration for each individual table, and ensuring you will not paint yourself into a corner in the future.

Querying data

Now we have a database! We can write the queries that will feed the application UI. Of course, the language to write these in is SPARQL, there are plenty of resources over it on the internet, and TinySPARQL has its own tutorial in the documentation.

One feature that sets TinySPARQL apart from other SPARQL engines in terms of developer experience is the support for parameterized values in SPARQL queries, through a little bit of non-standard syntax and the TrackerSparqlStatement API, you can compile SPARQL queries into reusable statements, which can be executed with different arguments, and will compile to an intermediate representation, resulting in faster execution when reused. Statements are also the way to go in terms of security, in order to avoid query injection situations. This is e.g. Rissole (simplified) search query:

SELECT

?urn

?title

{

?urn a mfo:FeedMessage ;

nie:title ?title ;

fts:match ~match .

}

Which allows me to funnel a GtkEntry content right away in the ~match without caring about character escaping or other validation. These queries may also be stored in GResource, and live as separate files in the project tree, and be loaded/compiled early during application startup once, so they are reusable during the rest of the application lifetime:

fn load_statement(&self, query: &str) -> tsparql::SparqlStatement {

let base_path = "/com/github/garnacho/Rissole/queries/";

let stmt = self

.imp()

.connection

.get()

.unwrap()

.load_statement_from_gresource(&(base_path.to_owned() + query), gio::Cancellable::NONE)

.unwrap()

.expect(&format!("Failed to load {}", query));

stmt

}

...

// Pre-loading an statement

obj.imp()

.search_entries

.set(obj.load_statement("search_entries.rq"))

.unwrap();

...

// Running a search

pub fn search(&self, search_terms: &str) -> tsparql::SparqlCursor {

let stmt = self.imp().search_entries.get().unwrap();

stmt.bind_string("match", search_terms);

stmt.execute(gio::Cancellable::NONE).unwrap()

}

This data is of course all introspectable with the gresource CLI tool, and I can run these queries from a file using the tinysparql query CLI command, either on the application database itself, or on a separate in-memory testing database created through e.g.tinysparql endpoint --ontology-path ./src/ontology --dbus-service=a.b.c.

Here’s the third advantage for application development. Queries are 100% separate from code, introspectable, and able to be run standalone for testing, while the code remains highly semantic.

Inserting and updating data

When inserting data, we have two major pieces of API to help with the task, each with their own strengths:

These can be either executed standalone, or combined/accumulated in a TrackerBatch for a transactional behavior. Batches do improve performance by clustering writes to the database, and database stability by making these changes either succeed or fail atomically (TinySPARQL is fully ACID).

This interaction is the most application dependent (concretely, retrieving the data to insert to the database), but here is some links to Rissole code for reference, using TrackerResource to store RSS feed data, and using TrackerSparqlStatement to delete RSS feeds.

And here is the fourth advantage for your application, an async friendly mechanism to efficiently manage large amounts of data, ready for use.

Full-text search

For some reason, there tends to be some magical thinking revolving databases and how these make things fast. And the most damned pattern of all can be typically at the heart of search UIs: substring matching. What feels wonderful during initial development in small datasets soon slows to a crawl in larger ones. See, an index is little more than a tree, you can look up exact items on relatively low big O, lookup by prefix with slightly higher one, and for anything else (substring, suffix) there will be nothing to do but a linear search. Sure, the database engine will comply, however painstakingly.

What makes full-text search fundamentally different? This is a specialized index that performs an effort to pre-tokenize the text, so that each parsed word and term is represented individually, and can be looked up independently (either prefix or exact matches). At the expense of a slightly higher insertion cost (i.e. the usually scarce operation), this provides response times measured in milliseconds when searching for terms (i.e. the usual operation) regardless of their position in the text, even on really large data sets. Of course this is a gross simplification (SQLite has extensive documentation about the details), but I hopefully shined enough light into why full-text search can make things fast in a way a traditional index can not.

I am largely parroting a SQLite feature here, and yes, this might also be available for you if using SQLite, but the fact that I’ve already taught you in this post how to use it in TinySPARQL (declaring nrl:fulltextIndexed on the searchable properties, using fts:match in queries to match on them) does again have quite some contrast with rolling your own database creation code. So here’s another advantage.

Backups and other bulk operations

After you got your data stored, is it enshrined? Are there forward plans to get the data back again out of there? Is the backup strategy cp?

TinySPARQL (and the SPARQL/RDF combo at its core) boldly says no. Data is fully introspectable, and the query language is powerful enough to extract even full data dumps at a single query, if you wished so. This is for example available through the command line with tinysparql export and tinysparql import, the full database content can be serialized into any of the supported RDF formats, and can be either post-processed or snapshot into other SPARQL databases from there.

A “small” detail I have not mentioned so far is the (optional) major network transparency of TinySPARQL, since for the most part it is irrelevant on usecases like Rissole. Coming from web standards, of course network awareness is a big component of SPARQL. In TinySPARQL, creating an endpoint to publicly access a database is an explicit choice of made through API, and so it is possible to access other endpoints either from a dedicated connection or by extending your local queries. Why do I bring this up here? I talked at Guadec 2023 about Emergence, a local-first oriented data synchronization mechanism between devices owned by the same user. Network transparency sits at the heart of this mechanism, which could make Rissole able to synchronize data between devices, or any other application that made use of it.

And this is the last advantage I’ll bring up today, a solid standards-based forward plan to the stored data.

Closing note

If you develop an application that does need to store data, future you might appreciate some forward thinking on how to handle a lifetime’s worth of it. More artisan solutions like SQLite or file-based storage might set you up quickly for other funnier development and thus be a temptation, but will likely decrease rapidly in performance unless you know very well what you are doing, and will certainly increase your project’s technical debt over time.

TinySPARQL wraps all major advantages of SQLite with a versatile data model and query language strongly based on open standards. The degree of separation between the data model and the code makes both neater and more easily testable. And it’s got forward plans in terms of future data changes, backups, and migrations.

As everything is always subject to improvement, there’s some things that could do for a better developer experience:

- Query and schema definition files could be linted/validated as a preprocess step when embedding in a GResource, just as we validate GtkBuilder files

- TinySPARQL’s builtin web IDE started during last year’s GSoC should move forward, so we have an alternative to the CLI

- There could be graphical ways to visualize and edit these schemas

- Similar thing, but to visually browse a database content

- I would not dislike if some of these were implemented in/tied to GNOME Builder

- It would be nice to have a more direct way to funnel the results of a SPARQL query into UI. Sadly, the GListModel interface API mandates random access and does not play nice with cursor-alike APIs as it is common with databases. This at least excludes making TrackerSparqlCursor just implement GListModel.

- A more streamlined website to teach and showcase these benefits, currently tinysparql.org points to the developer documentation (extensive otoh, but does not make a great landing page).

Even though the developer experience would be more buttered up, there’s a solid core that is already a leap compared to other more artisan solutions, in a few areas. I would also like to point out that Rissole is not the first instance here, there are also Polari and Health using TinySPARQL databases this way, and mostly up-to-date in these best practices. Rissole is just my shiny new excuse to talk about this in detail, other application developers might appreciate the resource, and I’d wish it became one of many, so Emergence finally has a worthwhile purpose.

Last but not least, I would like to thank Kristi, Anisa, and all organizers at LAS for a great conference.