A month ago, GNOME was hit by a patent troll. We’re fighting, but need some money to fund the legal defense, and counterclaim. I just donated, and if you use or develop free software you should too.

Synaptics CX Audio Support

A couple of weeks ago, Synaptics (who now own Conexant) sent me 22,000+ lines of LGPLv2+ licensed C++ that was capable of updating the firmware of all the CXxxxx audio devices that exist in various laptops and peripherals. Most of last week was spent reading the code, and refactoring it to be a CX audio plugin in fwupd. There were a few things I could do to reduce the code size considerably:

- Use the abstractions shared with all the other plugins, e.g. SREC file format processing, data chunking and low level USB HID

- Drop support for hardware families which are no longer supported and not likely to receive updates

- Remove the layers of abstractions and the macros-of-macros-of-macros so common with a codebase age measured in decades

- Use helper objects in GLib and GObject rather than having to create everything from scratch

So, after all that we got down to a 1377 line fwupd plugin which is a 16x code reduction. It’s broadly comparable in functionality to the 22,000 line code drop but only works in fwupd as a plugin rather than as a standalone updater. To add support for new hardware to the plugin all we have to do is add an entry to the quirk file, which tells us which CX family the specific USB VID/PID is using. The rest is auto-detected.

I can’t tell you the OEM or the hardware all this work is being driven by, but eagle-eyed readers will work it out :) In some cases you might see an extra device appear in fwupdmgr get-devices if you’re running the soon-to-be-released fwupd 1.3.2 and hopefully we can get firmware updates which use this new device on the LVFS some time this year.

GNOME Firmware 3.34.0 Release

This morning I tagged the newest fwupd release, 1.3.1. There are a lot of new things in this release and a whole lot of polishing, so I encourage you to read the release notes if this kind of thing interests you.

Anyway, to the point of this post. With the new fwupd 1.3.1 you can now build just the libfwupd library, which makes it easy to build GNOME Firmware (old name: gnome-firmware-updater) in Flathub. I tagged the first official release 3.34.0 to celebrate the recent GNOME release, and to indicate that it’s ready for use by end users. I guess it’s important to note this is just a random app hacked together by 3 engineers and not something lovelingly designed by the official design team. All UX mistakes are my own :)

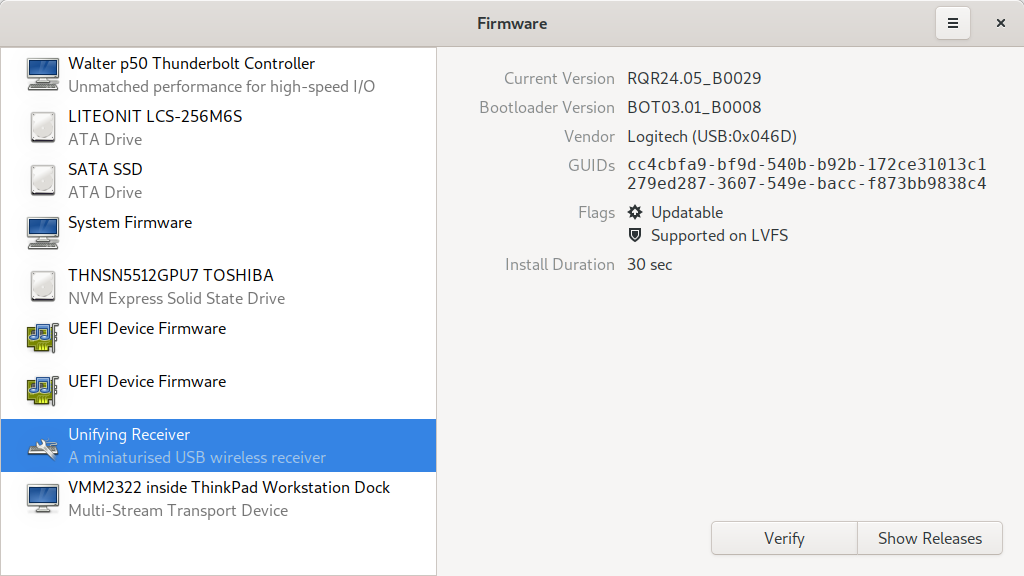

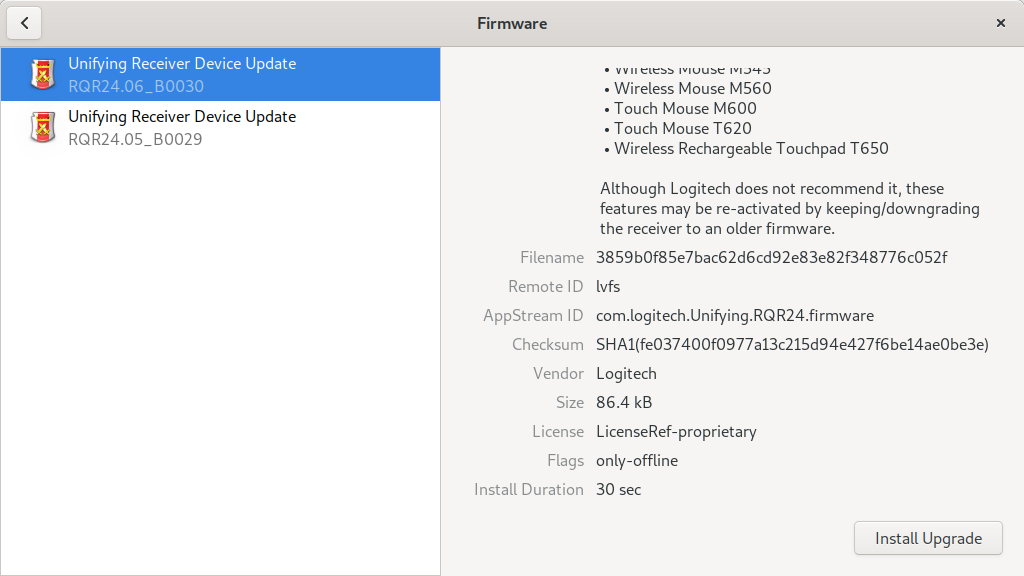

GNOME Firmware is designed to be a not-installed-by-default power-user tool to investigate, upgrade, downgrade and re-install firmware.

GNOME Software will continue to be used for updates as before. Vendor helpdesks can ask users to install GNOME Firmware rather than getting them to look at command line output.

We need to polish up GNOME Firmware going forwards, and add the last few features we need. If this interests you, please send email and I’ll explain what needs doing. We also need translations, although that can perhaps wait until GNOME Firmware moves to GNOME proper, rather than just being a repo in my personal GitLab. If anyone does want to translate it before then, please open merge requests, and be sure to file issues if any of the strings are difficult to translate or ambigious. Please also file issues (or even better merge requests!) if it doesn’t build or work for you.

If you just want to try out a new application, it takes 10 seconds to install it from Flathub.

Please welcome Acer to the LVFS

Acer has now officialy joined the LVFS, promoting the Aspire A315 firmware to stable.

Acer has been testing the LVFS for some time and now all the legal and technical checks have been completed. Other models will follow soon!

Realizing that I’m not Super Human: Part 1

Most of the content on this blog is technical in nature, as is my twitter feed. I wanted to step to one side, and talk a bit about one of the little things I’ve learned about my body: I’m not super human any more.

I’m one of those people that have been really lucky with my general physical and mental health over the years. I used to play a lot of rugby and got the odd injury, but nothing a long hot bath couldn’t fix. Modulo catching the flu a few years ago I don’t really get ill very much.

About this time last year I began to get a small amount of back pain when sitting for a long time, or when walking around for over an hour or so. This was the first warning. Over the next few months this got worse to the point it was now an electrical tingling all down one leg whenever I did “too much” walking or playing with the kids. I self-diagnosed this as some kind of sciatica and didn’t pay too much attention to it. This was the second warning sign. After my back finally “went pop” a couple of times in one week leaving me unable to walk properly at all, I finally went to a private physiotherapist and asked for some advice. Luckily for me this was all covered as part of my Red Hat compensation package and I didn’t have to pay a thing, which I know really isn’t the case if you’re paying for healthcare yourself.

The Physio did quite a lot of tests and then announced that my posture was, put bluntly, total crap. There was no magic pill nor any special sports massage to make it better, but everything could be fixed with a little bit of hard work. I had to make some immediate changes: my comfy armchair was out, a standing desk was in. 10 hours sitting in a chair coding was bad, hourly breaks were enforced. I was given some exercises to do every day (which I did) and after about 6 weeks of visits I was discharged as the tingling had gone and the back pain was much less. The physio suggested I do a weekly Pilates class to further improve my posture and to keep everything where it should be.

This was waaaay outside my comfort zone, as I’d never done any kind of exercise or group class before. I went to a group class and immediately realized I was at least two orders of magnitude less capable than everyone else. I could barely touch my knees when they could all touch the floor. The instructor was really kind and showed me all the positions and things to do and not do, but I still felt a bit weird in a class of mostly middle aged women dragging them all down to my level. I asked the instructor if he did 1:1 classes and he said yes; Since then I’ve been doing a 1 hour Pilates class every other week and, against all odds, I’m actually quite enjoying it now. My posture is much better; when I run I feel less like I’m flopping about and now have a stable “core” of muscle holding me all together. I can throw my children around in the park, and not worry about discs in my back bulging to the point of rupture. My breathing and concentration has improved, and if anything I guess I’m slightly more productive with hourly breaks.

Talking to other men, it seems quite a few people also do Pilates, but for some reason are a bit embarrassed to admit it to other people. I suppose I was initially too, but not now. My wife does Yoga, and I guess to me Pilates feels like a more physical Yoga without all the spiritual stuff mixed in. I’m not quite a card-carrying evangelist, but I really would recommend you try Pilates if you sit at a desk all day hunched over an editor all day, like I used to. Doing 1:1 classes is expensive (about £80/month) but it is 100% worth it with the results I’ve had so far.

So, the conclusion: I’m not Super Human any more, but that’s okay. If you’ve read this far – shoulders back, chin up, and get back to coding. If you’re interested, want an awesome instructor and you live in West London, give Ash a call.

GNOME Firmware Updater

A few months ago, Dell asked if I’d like to co-mentor an intern over the summer. The task was to create a GTK “power user” application for managing firmware. The idea being that someone like Dell support could ask the user to run a little application and then read back firmware versions or downgrade to an older firmware version rather than getting them to use the command line. GNOME and KDE software centers deliberately show a “simple” view of firmware, only showing devices when updates are pending.

In June I was introduced to Andrew Schwenn, who was our intern for the summer. This blog isn’t about Andrew, but I will say he did amazingly well and was soon up to speed filing excellent pull requests even with a grumpy anally-retentive maintainer like me. Andrew has finished his internship now, but I wouldn’t be surprised if we work again with him in the future. Most of the work so far is from Andrew, so I can’t claim too much credit here.

GNOME Firmware Updater was designed in the style of a GNOME Control Center panel, and all the code is written in a way to make a port very simple indeed if that’s what we actually want. At the moment it’s a seporate project and binary, as we’re still prototyping the UI and working out what kind of UX we want from a power user tool. It’s mostly complete and a few weeks away from it’s first release. When it does get an official release, I’ll be sure to upload it to Flathub to make it easy for the world to install. If this sounds interesting to you the code is here. I don’t have a huge amount of time to dedicate to this power user tool, but please open pull requests or issues if there’s something you’d like to see fixed.

Musings on the Microsoft Component Firmware Update (CFU) Protocol

CFU is a new specification from Microsoft designed to be the one true protocol for updating hardware. No vendor seems to be shipping hardware supporting CFU (yet?), although I’ve had two peripheral vendors ask my opinion which is why I’m posting here.

CFU has a bazaar pre-download phase before sending the firmware to the microcontroller so the uC can check if the firmware is required and compatible. CFU also requires devices to be able to transfer the entire new transfer mode in runtime mode. The pre-download “offer” allows the uC to check any sub-components attached (e.g. other devices attached to the SoC) and forces it to do dep resolution in case sub-components have to be updated in a specific order.

Pushing the dep resolution down to the uC means the uC has to do all the version comparisons and also know all the logic with regard to protocol incompatibilities. You could be in a position where the uC firmware needs to be updated so that it “knows” about the new protocol restrictions, which are needed to update the uC and the things attached in the right order in a subsequent update. If we always update the uC to the latest, the probably-factory-default running version doesn’t know about the new restrictions.

The other issue with this is that the peripheral is unaware of the other devices in the system, so for instance couldn’t only install a new firmware version for only new builds of Windows for example. Something that we support in fwupd is being able to restrict the peripheral device firmware to a specific SMBIOS CHID or a system firmware vendor, which lets vendors solve the “same hardware in different chassis, with custom firmware” problem. I don’t see how that could be possible using CFU unless I misunderstand the new .inf features. All the dependency resolution should be in the metadata layer (e.g. in the .inf file) rather than being pushed down to the hardware running the old firmware.

What is possibly the biggest failure I see is the doubling of flash storage required to do an runtime transfer, the extra power budget of being woken up to process the “offer” and enough bulk power to stay alive if “unplugged” during a A/B swap. Realistically it’s an extra few dollars for a ARM uC to act as a CFU “bridge” for legacy silicon and IP, which I can’t see as appealing to an ODM given they make other strange choices just to save a few cents on a BOM. I suppose the CFU “bridge” could also do firmware signing/encryption but then you still have a physical trace on the PCB with easy-to-read/write unsigned firmware. CFU could have defined a standardized way to encrypt and sign firmware, but they kinda handwave it away letting the vendors do what they think is best, and we all know how that plays out.

CFU downloads in the runtime mode, but from experience, most of the devices can transfer a few hundred Kb in less than ~200ms. Erasing flash is typically the slowest thing, typically less than 2s, writing next at ~1s both done in the bootloader phase. I’ve not seen a single device that can do a flash-addr-swap to be able to do the A/B solution they’ve optimized for, with the exception of enterprise UEFI firmware which CFU can’t update anyway.

By far the longest process in the whole update step is the USB re-enumeration (up to twice) which we have to allow 5s (!!!) for in fwupd due to slow hubs and other buggy hardware. So, CFU doubles the flash size requirement for millions of device to save ~5 seconds for a procedure which might be done once or twice in the devices lifetime. It’s also not the transfer that’s the limitation even over bluetooth as if the dep resolution is “higher up” you only need to send the firmware to the device when it needs an update, rather that every time you scan the device.

I’m similarly unimpressed with the no-user-interaction idea where firmware updates just happen in the background, as the user really needs to know when the device is going to disappear and re-appear for 5 seconds (even CFU has to re-enumerate…) — image it happening during a presentation or as the machine is about to have the lid shut to go into S3.

EDIT: See the new fwupd plugin.

GNOME Software in Fedora will no longer support snapd

In my slightly infamous email to fedora-devel I stated that I would turn off the snapd support in the gnome-software package for Fedora 31. A lot of people agreed with the technical reasons, but failed to understand the bigger picture and asked me to explain myself.

I wanted to tell a little, fictional, story:

In 2012 the ISO institute started working on a cross-vendor petrol reference vehicle to reduce the amount of R&D different companies had to do to build and sell a modern, and safe, saloon car.

Almost immediately, Mercedes joins ISO, and starts selling the ISO car. Fiat joins in 2013, Peugeot in 2014 and General Motors finally joins in 2015 and adds support for Diesel engines. BMW, who had been trying to maintain the previous chassis they designed on their own (sold as “BMW Kar Koncept”), finally adopts the ISO car also in 2015. BMW versions of the ISO car use BMW-specific transmission oil as it doesn’t trust oil from the ISO consortium.

Mercedes looks to the future, and adds high-voltage battery support to the ISO reference car also in 2015, adding the required additional wiring and regenerative braking support. All the other members of the consortium can use their own high voltage batteries, or use the reference battery. The battery can be charged with electricity from any provider.

In 2016 BMW stops marketing the “ISO Car” like all the other vendors, and instead starts calling it “BMW Car” instead. At about the same time BMW adds support for hydrogen engines to the reference vehicle. All the other vendors can ship the ISO car with a Hydrogen engine, but all the hydrogen must be purchased from a BMW-certified dealer. If any vendor other than BMW uses the hydrogen engines, they can’t use the BMW-specific heat shield which protects the fuel tank from exploding in the event on a collision.

In 2017 Mercedes adds traction control and power steering to the ISO reference car. It is enabled almost immediately and used by nearly all the vendors with no royalties and many customer lives are saved.

In 2018 BMW decides that actually producing vendor-specific oil for it’s cars is quite a lot of extra work, and tells all customers existing transmission oil has to be thrown away, but now all customers can get free oil from the ISO consortium. The ISO consortium distributes a lot more oil, but also has to deal with a lot more customer queries about transmission failures.

In 2019 BMW builds a special cut-down ISO car, but physically removes all the petrol and electric functionality from the frame. It is rebranded as “Kar by BMW”. It then sends a private note to the chair of the ISO consortium that it’s not going to be using ISO car in 2020, and that it’s designing a completely new “Kar” that only supports hydrogen engines and does not have traction control or seatbelts. The explanation given was that BMW wanted a vehicle that was tailored specifically for hydrogen engines. Any BMW customers using petrol or electricity in their car must switch to hydrogen by 2020.

The BMW engineers that used to work on ISO Car have been shifted to work on Kar, although have committed to also work on Car if it’s not too much extra work. BMW still want to be officially part of the consortium and to be able to sell the ISO Car as an extra vehicle to the customer that provides all the engine types (as some customers don’t like hydrogen engines), but doesn’t want to be seen to support anything other than a hydrogen-based future. It’s also unclear whether the extra vehicle sold to customers would be the “ISO Car” or the “BMW Car”.

One ISO consortium member asks whether they should remove hydrogen engine support from the ISO car as they feel BMW is not playing fair. Another consortium member thinks that the extra functionality could just be disabled by default and any unused functionality should certainly be removed. All members of the consortium feel like BMW has pushed them too far. Mercedes stop selling the hydrogen ISO Car model stating it’s not safe without the heat shield, and because BMW isn’t going to be supporting the ISO Car in 2020.

Fun with the ODRS, part 2

For the last few days I’ve been working on the ODRS, the review server used by GNOME Software and other open source software centers. I had to do a lot of work initially to get the codebase up to modern standards, but now it has unit tests (86% coverage!), full CI and is using the latest versions of everything. All this refactoring allowed me to add some extra new features we’ve needed for a while.

The first feature changes how we do moderation. The way the ODRS works means that any unauthenticated user can mark a review for moderation for any reason in just one click. This means that it’s no longer shown to any other user and requires a moderator to perform one of three actions:

- Decide it’s okay, and clear the reported counter back to zero

- Decide it’s not very good, and either modify it or delete it

- Decide it’s spam or in any way hateful, and delete all the reviews from the submitter, adding them to the user blocklist

For the last few years it’s been mostly me deciding on the ~3k marked-for-moderatation reviews with the help of Google Translate. Let me tell you, after all that my threshold for dealing with internet trolls is super low. There are already over 60 blocked users on the ODRS, although they’ll never really know they are shouting into /dev/null…

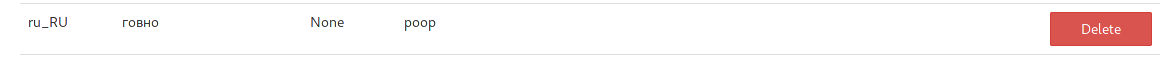

One change I’ve made here is that it now takes two “reports” of a review before it needs moderation; the logic being that a lot of reports seem accidental and a really bad review is already normally reported by multiple people in the few days after it’s been posted. The other change is that we now have a locale-specific “bad word list” that submitted reports are checked against at submission time. If they are flagged, the moderator has to decide on the action before it’s ever shown to other users. This has already correctly flagged 5 reviews in the couple of days since it was deployed. If you contributed to the spreadsheet with “bad words” for your country I’m very grateful. That bad word list will be available as a JSON dump on the ODRS on Monday in case it’s useful to other people. I fully expect it’ll grow and change over time.

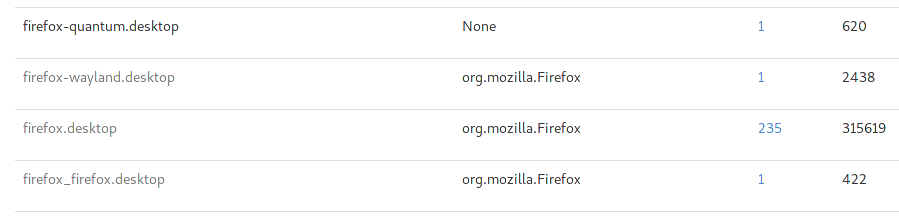

The other big change is dealing with different application IDs. Over the last decade some applications have moved from “launchable-style” inkscape.desktop IDs to AppStream-style IDs like org.inkscape.Inkscape.desktop and are even reported in different forms, e.g. the Flathub-inspired org.inkscape.Inkscape and the Snappy io.snapcraft.inkscape-tIrcA87dMWthuDORCCRU0VpidK5SBVOc. Until today a review submitted against the old desktop ID wouldn’t match for the Flatpak one, and now it does. The same happens when we get the star ratings which means that apps that change ID don’t start with a clean slate and inherit all the positivity of the old version. Of course, the usual per-request ordering and filtering is done, so older versions than the one requested might be shown lower than newer versions anyway.

This is also your monthly reminder to use <provides><id>oldname.desktop</id></provides> in your metainfo.xml file if you change your desktop ID. That includes you Flathub and Snapcraft maintainers too. If you do that client side then you at least probably get the right reviews if the software center does the right thing, but doing it server side as well makes really sure you’re getting the reviews and ratings you want in all cases.

If all this sounds interesting, and you’d like to know more about the ODRS development, or would like to be a moderator for your language, please join the mailing list and I’ll post there next week when I’ve made the moderator experience nicer than it is now. It’ll also be the place to request help, guidance and also ask for new features.

Initial Fun with the Open Desktop Ratings Service: Swearing!

The ODRS is the service that produces ratings and reviews for gnome-software. I built the service a few years ago, and it’s been dutifully trucking on ever since. There are over 25,000 reviews, 50k votes, and over 4k different applications reviewed. Over half a million clients get application reviews every single day.

Recently it’s been showing signs of needing work, and so I’ve spent a few days converting it to Python 3, then to SQLAlchemy, and then fixing all the broken stuff that we’ve lived with for a while (e.g. no emoji support because we were not using utf8mb4…). Part of the new work will be making it easier to flag and then moderate reviews, and that needs your help. Although any unauthenticated user can report a review for any reason, some reviews should be automatically marked at submission if they contain known bad words. There is almost no reason to write a review in locale en_GB and use the word fuck and so I think marking that review as needing moderation before it’s shown to thousands of people is a sensible thing to do.

To this to work, I can’t just use a blacklist of words as some words are only really vulgar in some regions, and some are perfectly valid words in other languages. For this reason I need the blacklist to be keyed to the submitted locale.

This is where I need your help. If you can spare 2 minutes, and know a lot of dirty words in your language can you please add them to this spreadsheet. Much appreciated.