Focus stealing prevention exists for two main reasons: One is security, since we need to prevent rogue apps from deceiving users into e.g. typing their password into another window. If apps can silently claim keyboard focus and open their own window over the currently focused one, this enables phishing and other similar attacks. The other is user experience: Even if an app isn’t maliciously taking over your focus, it can be annoying to have a new window popping up while you’re typing something and have half your sentence end up in the wrong app.

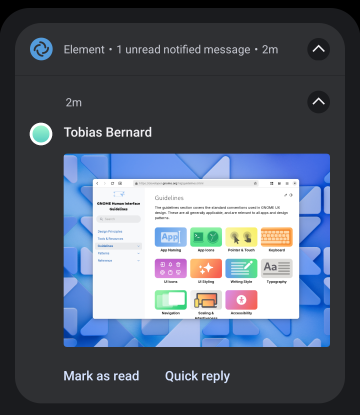

At the same time there are cases where you want apps to be able to request focus, for example when clicking a link in a chat app and wanting it to open in the browser. In this case you want the focus to move to the browser window.

This is why our compositor library mutter implements focus stealing prevention mechanisms, which allow the currently focused app to request that a specific other app be allowed to claim focus now.

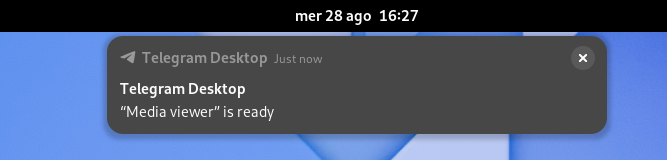

<App> is ready??

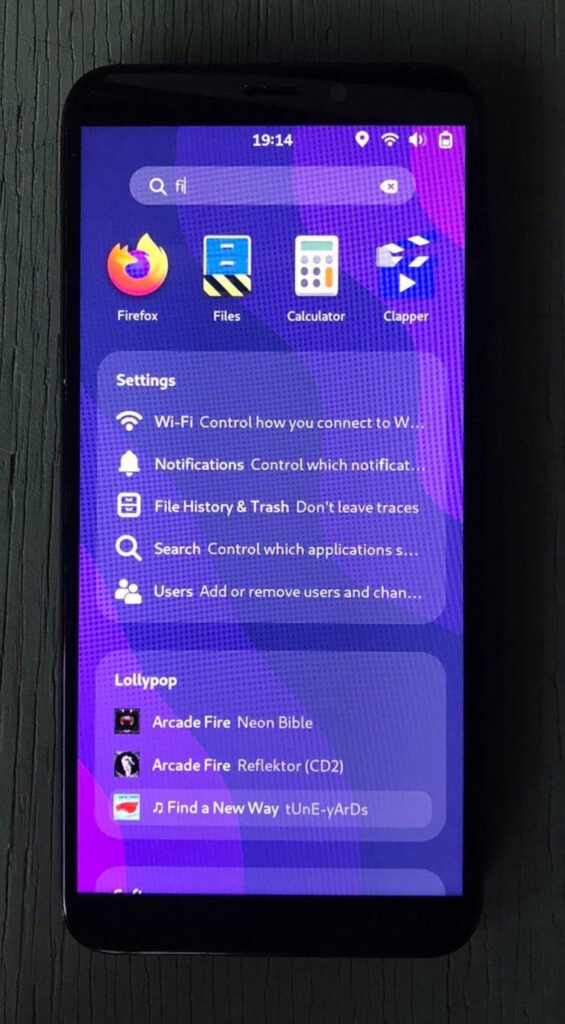

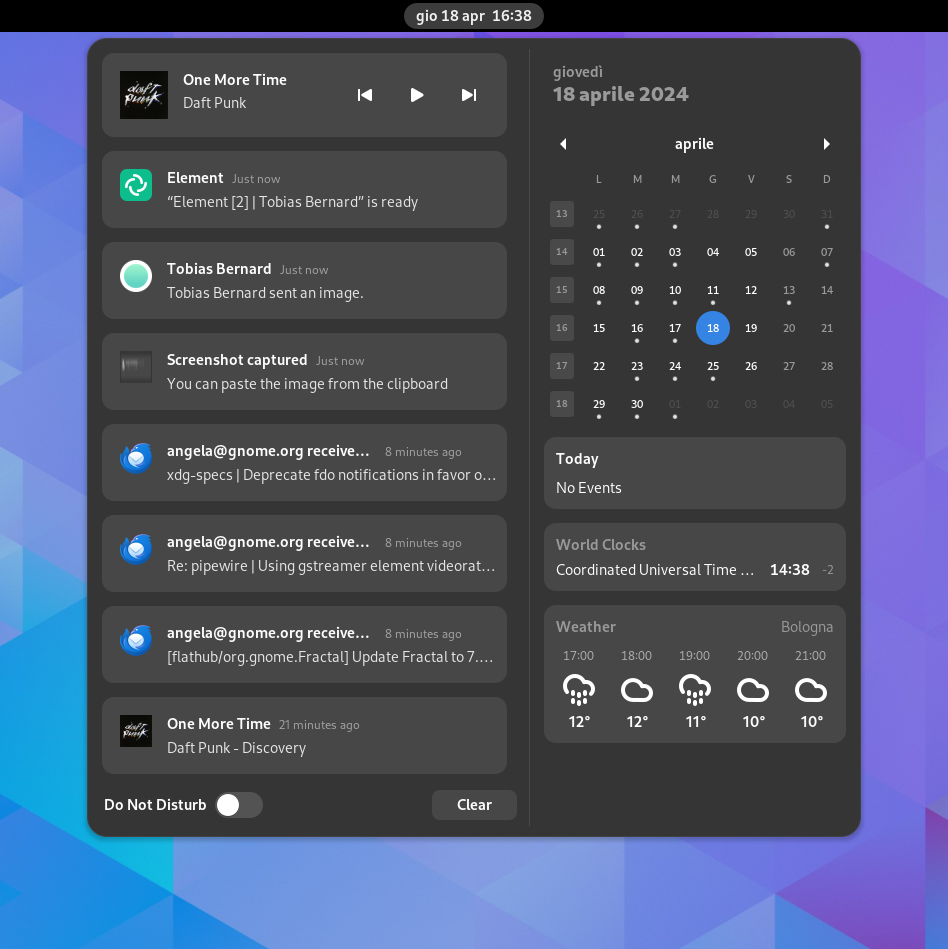

Most users have probably seen an “<App> is ready” notification in GNOME Shell at some point. Unfortunately this notification doesn’t really explain why it’s being shown and what’s happening, which may cause confusion.

Because of this there have been proposals to disable focus stealing prevention until it works better (mutter issue 673), and a number of GNOME Shell extensions).

These are the main cases where the notification is shown:

- A new window is opened and either the launcher app, or the launched app doesn’t implement the XDG Activation protocol or the startup notification specification

- An app requests focus for one of its windows, but was not activated in a valid way (e.g. because it wasn’t started by a user action)

- An app requests focus for a new window, but it’s slow to start and in the meantime there are additional user interactions. In this case we don’t want to interrupt, and show the notification so people can switch at their convenience.

- An app is launched from an environment that isn’t able to use the XDG Activation protocol (e.g. a terminal)

The protocol responsible for this, XDG Activation, the Wayland equivalent to the X11-specific startup notification spec was introduced somewhat recently (2020), and needs to be adopted by UI toolkits. GNOME 46 and 47 saw a few fixes and the feature was polished both in the client toolkit side (GTK and xdg-desktop-portal, as well as in the compositor implementation mutter, but there are still cases where XDG activation isn’t hooked up properly.

How XDG activation works

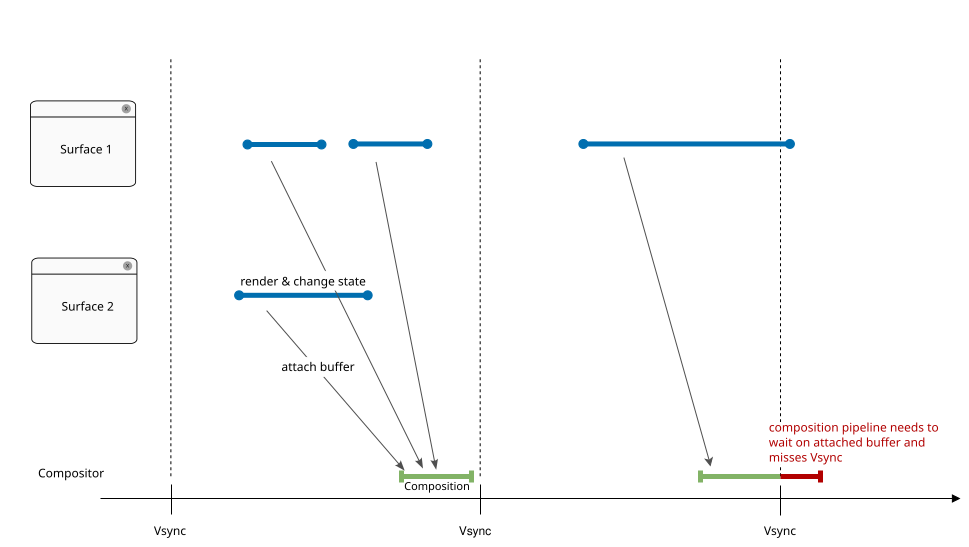

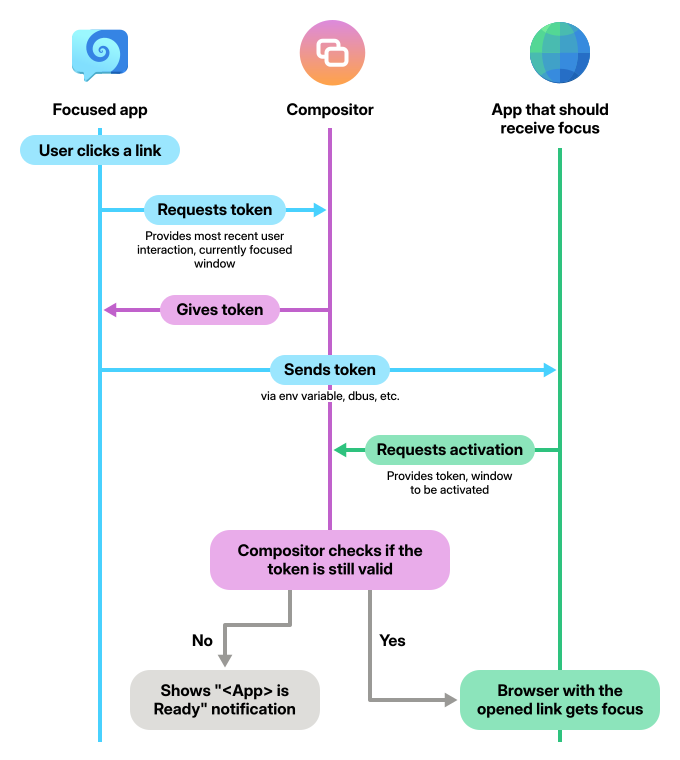

The way the protocol works is that the currently focused app asks the compositor to create a token linked to the focused window (Wayland surface) and the most recent user interaction (an input event serial associated with a seat).

This token is then used by the app that should receive focus when it requests to be activated. In GNOME Shell, activation means that the the window receives focus and is placed on top of other windows. An activation token may still be rejected, for example if the window linked to the token doesn’t have focus or when the linked user interaction isn’t recent enough.

In addition to handling focus, GNOME Shell also tracks app launching. Until the new app window is actually shown, GNOME Shell uses a “loading spinner” mouse cursor to indicate to the user that the app is loading. If the app doesn’t implement the XDG Activation protocol, the loading indicator only disappears after a timeout because GNOME Shell doesn’t know that the application finished loading and has presented the target window.

The protocol doesn’t define how tokens are given to the target app. One reason for this is because it depends on how the app is started. The main options are:

- Setting the

XDG_ACTIVATION_TOKENenvironment variable - D-Bus Activation using the

platform-datafield, which contains the activation token XDG portalsthat will launch an app (e.g. theOpenURIorOpenFileportals)

The target app then needs to collect the token and use it to have its window activated to receive focus and to signal to the compositor that it started successfully.

Not smart enough

When I started looking into how our focus prevention mechanism works to investigate the issues mentioned above, I was initially pretty confused. There were a lot of cases where the focus window switch worked fine, but other times it wouldn’t. I realized quickly that with existing windows, the “<App> is ready” notification is shown, but new window would get focus immediately.

This struck me as odd: Why are new windows allowed to do whatever, but existing windows are restricted in the way they can take over focus?

I first thought this was some sort of bug, but then I discovered that the behavior was by design: Mutter has a gsettings property called focus-new-windows that controls the focus stealing prevention mechanism. This property can be strict or smart (the latter being the default).

smartmeans that in most cases new windows get focus (even without asking for it) and are raised to the top of the window stackstrictmeans they get focus (are “activated”, in technical terms) only when they are actually supposed to

The smart mode exists in part because there are some cases where our current focus prevention system does not work well. These issues include:

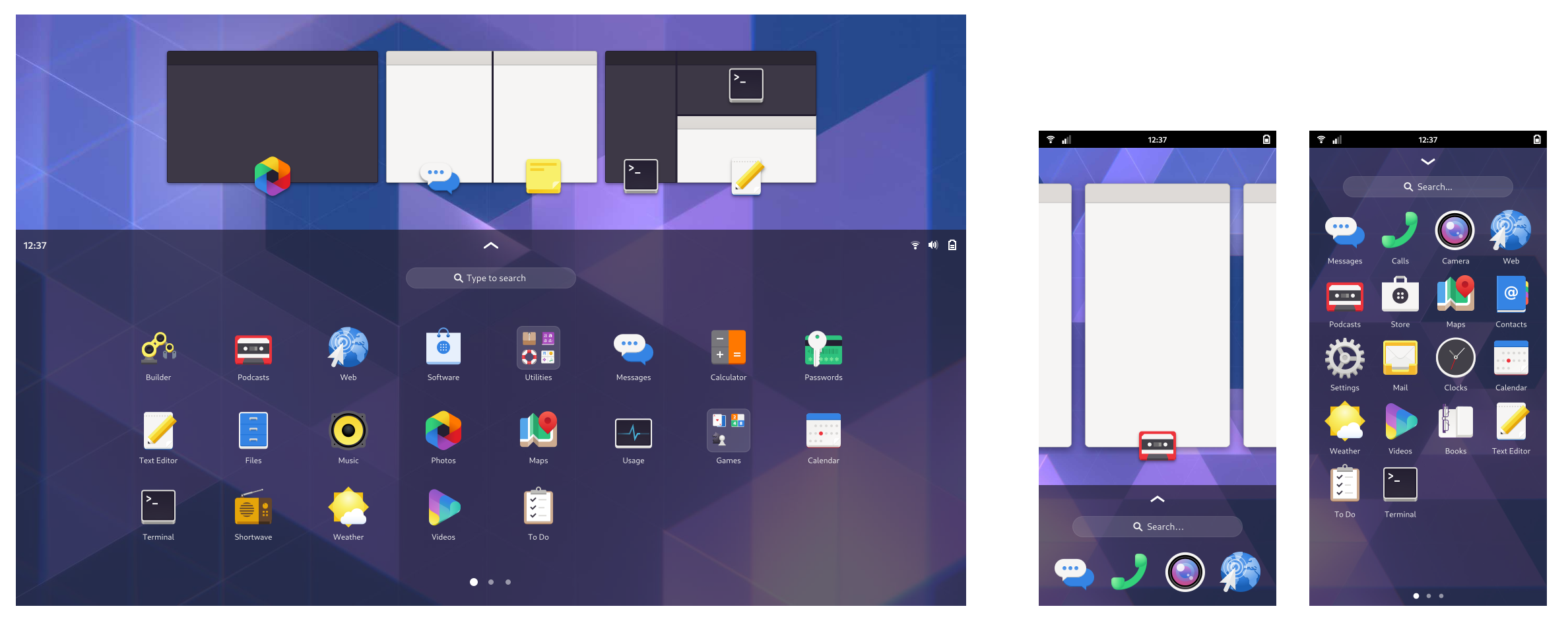

- Launching apps via terminal (vte issue #2788). The main issue is that the terminal executing a command does not know whether that process will present a window or not. For example, if you launch

vimthere’s no new window, but if you launchfirefoxthere is. - Launching apps via

Run a Commandin GNOME Shell (gnome-shell issue #7704) shares similar issues as running apps from the terminal - Apps launched via custom keyboard shortcut (e.g. set up in Settings > Keyboard > Keyboard Shortcuts)

- The lack of implementation of the appropriate protocols in apps or toolkits

Because the cases where a new window is opened are a significant percentage of the overall cases where focus prevention is triggered, this smart mode is making it appear as though apps actually implement the XDG Activation protocol, even if they don’t. While it does somewhat reduce annoyance for users, it gives developers the false impression that they don’t have to do anything.

It also makes it harder to debug issues where something doesn’t work as expected or is missing the correct implementation. For example, even in GTK4 the focus transferring is broken in some cases and took a long time to be discovered (gtk issue #6711).

Security implications

Unfortunately the current situation with smart as the default means that we’re not getting most of the benefits of focus stealing prevention. Apps are able to spawn a new window over your current one and grab keyboard focus, because the smart mode just gives the new window focus, circumventing the safety measures. This is trivial to exploit by malicious apps: All they need to do is open a new window, and focus stealing prevention doesn’t apply.

Next steps

While some people have asked for focus stealing prevention to be disabled completely until it’s implemented by most apps and toolkits, I’m not sure this is the best way forward. If we did that, nobody would notice which apps don’t implement it, so there’d be no reason for toolkits to do so.

On the other hand, there are some remaining issues around terminal applications and similar use cases that we don’t have a plan for yet, so just switching to strict to flush out app bugs isn’t ideal either at the moment.

- There is currently no consensus in the team as to how to proceed. The two main directions we could take are:

- Switch to

strictmode by default (mutter issue #3486) once a few remaining issues are resolved, perhaps with a “flag day” deadline so apps have time to implement it. - Slowly make the

smartmode stricter over time.

Either way we need to raise more awareness of the issue to get app and toolkit developers interested in improving things in this area, which this blogpost is a part of 🙂

It’d also be helpful if more people (especially developers) turn on strict mode on their system, so we get more testing for which apps work and which don’t. This is the relevant gsetting:

gsettings set org.gnome.desktop.wm.preferences focus-new-windows 'strict'

Thanks

Thanks to the Sovereign Tech Fund for allowing me to take the time to properly work through this as part of my broader effort around improving notifications. Thanks also to Sonny Piers and Tobias Bernard for organizing the STF project, Florian Müllner, Sebastian Wick, Carlos Garnacho, and the rest of the GNOME Shell team for reviewing my MRs, and Jonas Dreßler and Jonas Ådahl for reviewing the blogpost.

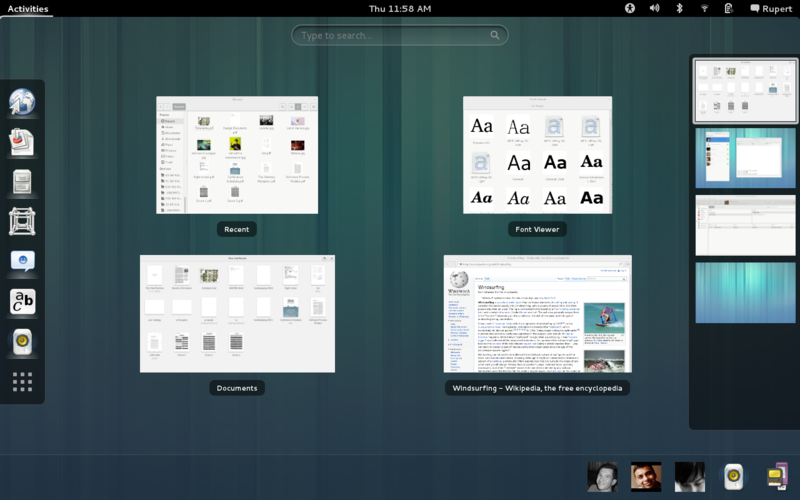

GNOME Classic has been updated to use that built-in support instead of compiling a separate stylesheet on its own.

That means that the gnome-shell-sass repository is no longer needed by the gnome-shell-extensions project, and will therefore no longer be updated upstream.

If you are building your own theme from those sources, you will either have to get them from the gnome-shell repository yourself, or coordinate with other 3rd party theme authors to keep the subproject updated.

GNOME Classic has been updated to use that built-in support instead of compiling a separate stylesheet on its own.

That means that the gnome-shell-sass repository is no longer needed by the gnome-shell-extensions project, and will therefore no longer be updated upstream.

If you are building your own theme from those sources, you will either have to get them from the gnome-shell repository yourself, or coordinate with other 3rd party theme authors to keep the subproject updated.