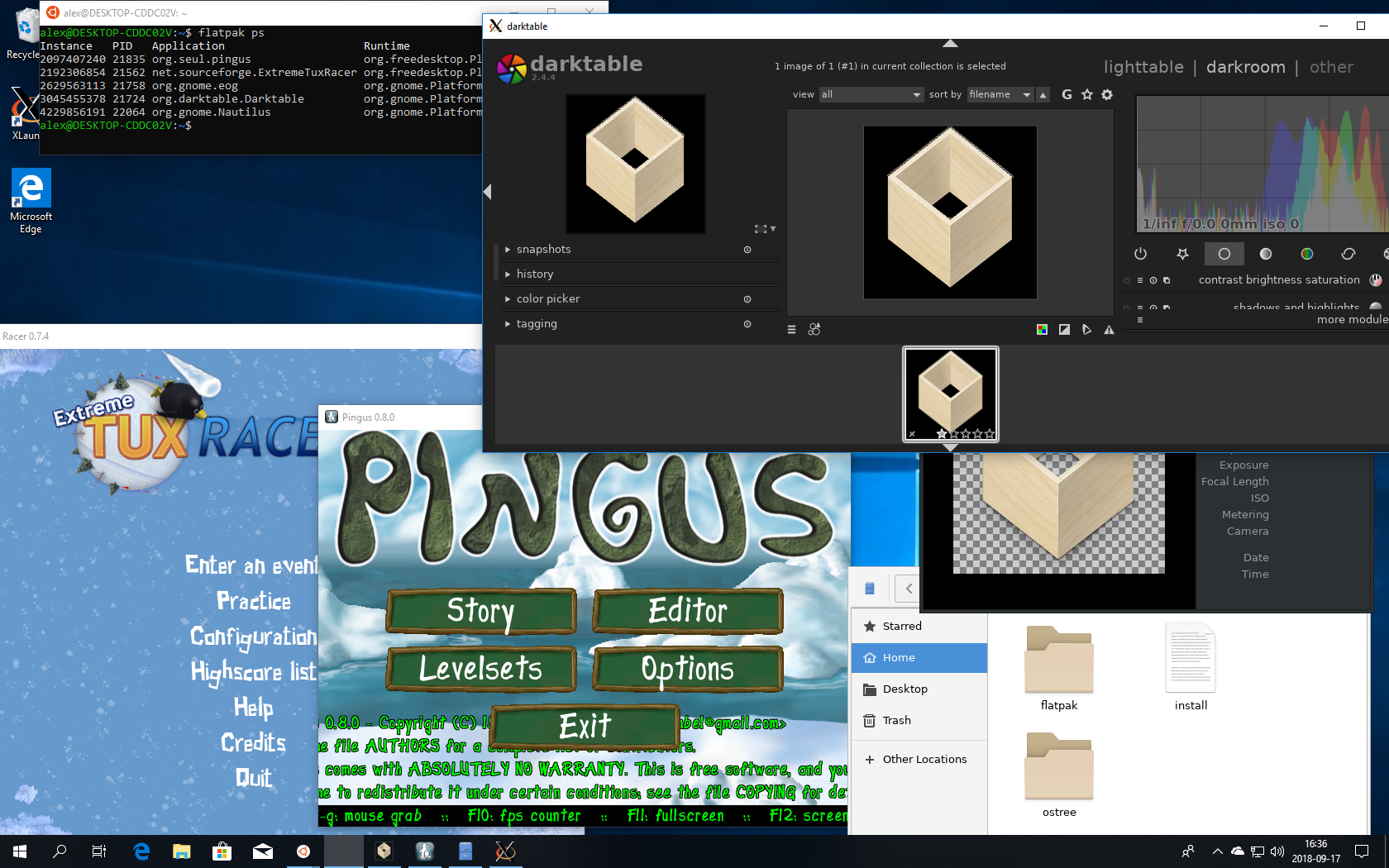

A long time ago I wrote a blog post about how to maintain a Flatpak repository.

It is still a nice, mostly up to date, description of how Flatpak repositories work. However, it doesn’t really have a great answer to the issue called syncing updates in the post. In other words, it really is more about how to maintain a repository on one machine.

In practice, at least on a larger scale (like e.g. Flathub) you don’t want to do all the work on a single machine like this. Instead you have an entire build-system where the repository is the last piece.

Enter flat-manager

To support this I’ve been working on a side project called flat-manager. It is a service written in rust that manages Flatpak repositories. Recently we migrated Flathub to use it, and its seems to work quite well.

At its core, flat-manager serves and maintains a set of repos, and has an API that lets you push updates to it from your build-system. However, the way it is set up is a bit more complex, which allows some interesting features.

Core concept: a build

When updating an app, the first thing you do is create a new build, which just allocates an id that you use in later operations. Then you can upload one or more builds to this id.

This separation of the build creation and the upload is very powerful, because it allows you to upload the app in multiple operations, potentially from multiple sources. For example, in the Flathub build-system each architecture is built on a separate machine. Before flat-manager we had to collect all the separate builds on one machine before uploading to the repo. In the new system each build machine uploads directly to the repo with no middle-man.

Committing or purging

An important idea here is that the new build is not finished until it has been committed. The central build-system waits until all the builders report success before committing the build. If any of the builds fail, we purge the build instead, making it as if the build never happened. This means we never expose partially successful builds to users.

Once a build is committed, flat-manager creates a separate repository containing only the new build. This allows you to use Flatpak to test the build before making it available to users.

This makes builds useful even for builds that never was supposed to be generally available. Flathub uses this for test builds, where if you make a pull request against an app it will automatically build it and add a comment in the pull request with the build results and a link to the repo where you can test it.

Publishing

Once you are satisfied with the new build you can trigger a publish operation, which will import the build into the main repository and do all the required operations, like:

- Sign builds with GPG

- Generate static deltas for efficient updates

- Update the appstream data and screenshots for the repo

- Generate flatpakref files for easy installation of apps

- Update the summary file

- Call out out scripts that let you do local customization

The publish operation is actually split into two steps, first it imports the build result in the repo, and then it queues a separate job to do all the updates needed for the repo. This way if multiple builds are published at the same time the update can be shared. This saves time on the server, but it also means less updates to the metadata which means less churn for users.

You can use whatever policy you want for how and when to publish builds. Flathub lets individual maintainers chose, but by default successful builds are published after 3 hours.

Delta generation

The traditional way to generate static deltas is to run flatpak build-update-repo --generate-static-deltas. However, this is a very computationally expensive operation that you might not want to do on your main repository server. Its also not very flexible in which deltas it generates.

To minimize the server load flat-manager allows external workers that generate the deltas on different machines. You can run as many of these as you want and the deltas will be automatically distributed to them. This is optional, and if no workers connect the deltas will be generated locally.

flat-manager also has configuration options for which deltas should be generated. This allows you to avoid generating unnecessary deltas and to add extra levels of deltas where needed. For example, Flathub no longer generates deltas for sources and debug refs, but we have instead added multiple levels of deltas for runtimes, allowing you to go efficiently to the current version from either one or two versions ago.

Subsetting tokens

flat-manager uses JSON Web Tokens to authenticate API clients. This means you can assign different permission to different clients. Flathub uses this to give minimal permissions to the build machines. The tokens they get only allow uploads to the specific build they are currently handling.

This also allows you to hand out access to parts of the repository namespace. For instance, the Gnome project has a custom token that allows them to upload anything in the org.gnome.Platform namespace in Flathub. This way Gnome can control the build of their runtime and upload a new version whenever they want, but they can’t (accidentally or deliberately) modify any other apps.

Rust

I need to mention Rust here too. This is my first real experience with using Rust, and I’m very impressed by it. In particular, the sense of trust I have in the code when I got it past the compiler. The compiler caught a lot of issues, and once things built I saw very few bugs at runtime.

It can sometimes be a lot of work to express the code in a way that Rust accepts, which makes it not an ideal language for sketching out ideas. But for production code it really excels, and I can heartily recommend it!

Future work

Most of the initial list of features for flat-manager are now there, so I don’t expect it to see a lot of work in the near future.

However, there is one more feature that I want to see; the ability to (automatically) create subset versions of the repository. In particular, we want to produce a version of Flathub containing only free software.

I have the initial plans for how this will work, but it is currently blocking on some work inside OSTree itself. I hope this will happen soon though.