Caching stuff

Generally 5min/job does not seem like a terribly long time to wait, but it can add up really quickly when you add couple of jobs to the pipeline. First let’s take a look where most of the time is spent. First of jobs currently are spawned in a clean environment, which means each time we want to build the Rust part of librsvg, we download the whole cargo registry and all of the cargo dependencies each time. That’s our first low hanging fruit! Apart from that another side-effect of the clean environment is that we build librsvg from scratch each time, meaning we don’t make use of the incremental compilation that modern compilers offer. So let’s get started.

Cargo Registry

According to the cargo docs there’s a cache of the registry is stored in $CARGO_HOME(default under $HOME/.cargo). Gitlab-CI though only allows you to cache things that exists inside your projects root (it does not need to be tracked by git). So we have to somehow relocate $CARGO_HOME somewhere where we can extract it. Thankfully that’s as easy as setting $CARGO_HOME to our desired path.

.test_template: &distro_test

before_script:

- mkdir -p .cargo_cache

# Only stuff inside the repo directory can be cached

# Override the CARGO_HOME variable to force it location

- export CARGO_HOME="${PWD}/.cargo_cache"

script:

- echo foo

cache:

paths:

- .cargo_cache/

What’s new in our template above compared to part 1, are the before_script: and cache: blocks. In the before_script: first we create the .cacrgo_cache folder if it not exists (cargo is probably smart enough to not need this but ccache isn’t! So better be safe I guess). And then we export the new $CARGO_HOME location. Then in the cache: block we set what folder we want to cache. That’s it, now our cargo registry and downloaded crates should persist across builds!

Caching Rust Artifacts

The only thing needed to cache the rustc build artifacts is to add target/ in the cache: block. That’s it I am serious.

cache:

paths:

- target/

Caching C Artifacts with ccache

C and ccache on the other hand are a completely different story sadly. To that it did not contribute that my knowledge of C and build systems is approximating 0. Thankfully while searching I found another post from Ted Gould where he describes how ccache was setup for Inkscape. The following config ended up working for librsvg‘s current autotools setup.

.test_template: &distro_test

before_script:

# ccache Config

- mkdir -p ccache

- export CCACHE_BASEDIR=${PWD}

- export CCACHE_DIR=${PWD}/ccache

- export CC="ccache gcc"

script:

- echo foo

cache:

paths:

- ccache/

I got stuck on how to actually call gcc through ccache since it depends on the build system you use (see export CC). Shout out to Christian Hergert for showing me how to do it!

Cache behavior

One last thing, is that we want our each of our job to have an independent cache as opposed to a shared one across the pipeline. This can be achieved by using the key: directive. I am not sure how it works and I wish the jobs would elaborate a bit more. In practice the following line will make sure that each job on each branch will have it’s own cache. For more complex configurations I suggest looking at the gitlab docs.

cache:

# JOB_NAME - Each job will have it's own cache

# COMMIT_REF_SLUG = Lowercase name of the branch

# ^ Keep different caches for each branch

key: "$CI_JOB_NAME-$CI_COMMIT_REF_SLUG"

Final config and results

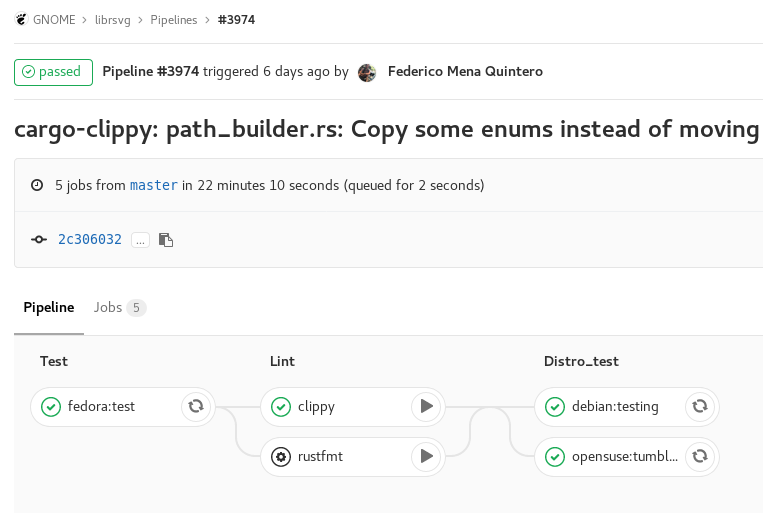

So here is the cache config as it exists today on Librsvg‘s master branch. This brought build of each job from ~4-5min, which we left it in part 2, to ~1-1:30min. Pretty damn fast! But what if you wanted to do a clean build or rule the out the possibility that the cache is causing bugs and failed runs? Well if you happen to use gitlab 10.4 or later (and GNOME is) you can do it from the Web GUI. If not you probably have to contact a gitlab administrator.

.test_template: &distro_test

before_script:

# CCache Config

- mkdir -p ccache

- mkdir -p .cargo_cache

- export CCACHE_BASEDIR=${PWD}

- export CCACHE_DIR=${PWD}/ccache

- export CC="ccache gcc"

# Only stuff inside the repo directory can be cached

# Override the CARGO_HOME variable to force it location

- export CARGO_HOME="${PWD}/.cargo_cache"

script:

- echo foo

cache:

# JOB_NAME - Each job will have it's own cache

# COMMIT_REF_SLUG = Lowercase name of the branch

# ^ Keep diffrerent caches for each branch

key: "$CI_JOB_NAME-$CI_COMMIT_REF_SLUG"

paths:

- target/

- .cargo_cache/

- ccache/