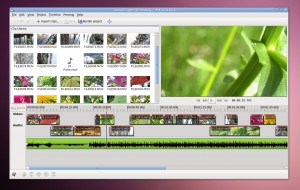

As some people noticed in the PiTiVi community, I haven’t been working that much on PiTiVi over the past few months. I did mention the work I was doing was somewhat related to PiTiVi and that hopefully I’d be able to talk about it openly.

That day has now arrived.

GStreamer editing services

The “GStreamer Editing Services” is a library to simplify the creation of multimedia editing applications. Based on the GStreamer multimedia framework and the GNonLin set of plugins, its goals are to suit all types of editing-related applications.

The GStreamer Editing Services are cross-platform and work on most UNIX-like platform as well as Windows. It is released under the GNU Library General Public License (GNU LGPL).

Why ?

Because writing audio/video-editors is a lot of work, and we should make it as easy as possible for people to write such applications while being able to leverage the power of GStreamer and not requiring a PhD in nuclear engineering.

The GStreamer Editing Services (GES) introduces 3 concepts:

- GESTimeline : This is your central container corresponding to a TimeLine, you can add Tracks and Layers to it. It is also a GStreamer Element, so you can use it in any GStreamer pipeline.

- GESLayer : This corresponds to the User-visible part of the Timeline. This is where the user lays out the various LayerObjects (files, transitions) he wishes to use. The LayerObjects can be as simple or advanced as required (ex : a FileSource can have a mute property, an ‘overlay’ property, a rotate video property, …) and those objects will take care of properly filing up the Tracks. Applications can create their own subclasses of LayerObjects for their custom usage, implementing the logic of what TrackObjects to create in the background and not have to worry about anything else).

- GESTrack : This corresponds to the media part of the Timeline. An audio editor will only require one audio Track, a video editor will require one Audio and one Video Track, etc … These parts don’t have to be visible to the user… nor the application developer 🙂

Why another library in addition to GNonLin?

The answer to that is that GNonLin will remain a media-agnostic set of elements whose goals are to be able to easily use parts of streams (i.e. from GStreamer elements) and arrange them through time. While this makes GNonLin very flexible… it also means there is quite a bit of extra code to write before getting to the ‘video-editing’ concepts.

Can I write a slideshow/audio/video/cutter/<crack-editor-idea> with it ?

Short answer : yes. Longer version : yes, but you might have to write your own LayerObject subclasses if you have some really specific use-cases in mind.

Where can I find it ?

The git repository is located here . Documentation can be generated in docs/libs/ if you have gtk-doc , and you can find some minimalistic examples in tests/examples/ . I will be gradually adding more documentation and examples.

GstDiscoverer, Profile System and EncodeBin

GES alone isn’t enough to end up with a functional editor. There are a couple of peripheral multimedia-related tasks that need to be done, and to solve that I’ve also been working on some other items. All of the following can be found in the gst-convenience repository.

GstDiscoverer

Those of you familiar with PiTiVi/Jokosher/Transmageddon/gst-python development might already be using the python variant of this code. The goal is to be able to get as much information (what’s the duration, what tags does it have, how many streams, of what type, using what codecs, …) from a given URI (file, network stream ,…) as fast as possible. While the code already existed, it was only python-based. So I rewrote one in C, with several improvements over it. It can be used synchronously or asynchronously, and comes with a command-line test application in tests/examples/

EncodeBin

Creating encoding pipelines, despite what many people might think, is not a trivial business and requires constantly thinking about a lot of little details. In order to make this as smooth as possible, I have written a convenience element for encoding : encodebin. It only has one required property : a GstEncodingProfile. Once you have set that property, you can then add that element to your pipeline, connect the various streams you wish to encode, connect to your sink element… and put the pipeline to PLAY.

Encoding profiles are not a new idea, and there has been many discussions in the past on how to properly solve this problem. Instead of concentrating on how to best store the various profiles for encoding… I decided to tackle the beast the other way round and, after having sent a RFC to the GStreamer mailing-list and collected as many use-cases as possible, came up with a proposal for a C based encoding profile description (see gst-libs/gst/profile/gstprofile.h). I still have some more use-cases to test and a few extra things to implement in encodebin, but so far the current profile system seems to fit all scenarios.

The remaining problem to solve… is to figure out how to store those encoding profiles in a persistent way for all applications to benefit from them.

Next steps

Using all of the above in PiTiVi 🙂 But also looking forward to seeing comments/feedback/requests from people who wish to use any of the above in their applications.

In addition to that, I will be at the Maemo Barcelona Long Weekend starting from Friday, where we will try to corner down the UI and code requirements for creating a video editor for Maemo. All of the above should make it much easier to do than anticipated 🙂