Today I woke up to a link of an interview from the current Fedora Project Leader, Matthew Miller. Brodie who conducted the interview mentioned that Miller was the one that reached out to him. The background of this video was the currently ongoing issue regarding OBS, Bottles and the Fedora project, which Niccolò made an excellent video explaining and summarizing the situation. You can also find the article over at thelibre.news. “Impressive” as this story is, it’s for another time.

What I want to talk in this post, is the outrageous, smearing and straight up slanderous statements about Flathub that the Fedora Project Leader made during the interview..

I am not directly involved with the Flathub project (A lot of my friends are), however I am a maintainer of the GNOME Flatpak Runtime, and a contributor to the Freedesktop-sdk and ElementaryOS Runtimes. I also maintain applications that get published on Flathub directly. So you can say I am someone invested in the project and that has put a lot of time into it. It was extremely frustrating to hear what would only qualify as reddit-level completely made up arguments with no base in reality coming directly from Matthew Miller.

Below is a transcript, slightly edited for brevity, of all the times Flathub and Flatpak was mentioned. You can refer to the original video as well as there were many more interesting things Miller talked about.

It starts off with an introduction and some history and around the 10-minute mark, the conversation starts to involve Flathub.

Miller: [..] long way of saying I think for something like OBS we’re not really providing anything by packaging that. Miller: I think there is an overall place for the Fedora Flatpaks, because Flathub part of the reason its so popular (there’s a double edged sword), (its) because the rules are fairly lax about what can go into Flathub and the idea is we want to make it as easy for developers to get their things to users, but there is not really much of a review

This is not the main reason why Flathub is popular, its a lot more involved and interesting in practice. I will go into this in a separate post hopefully soon.

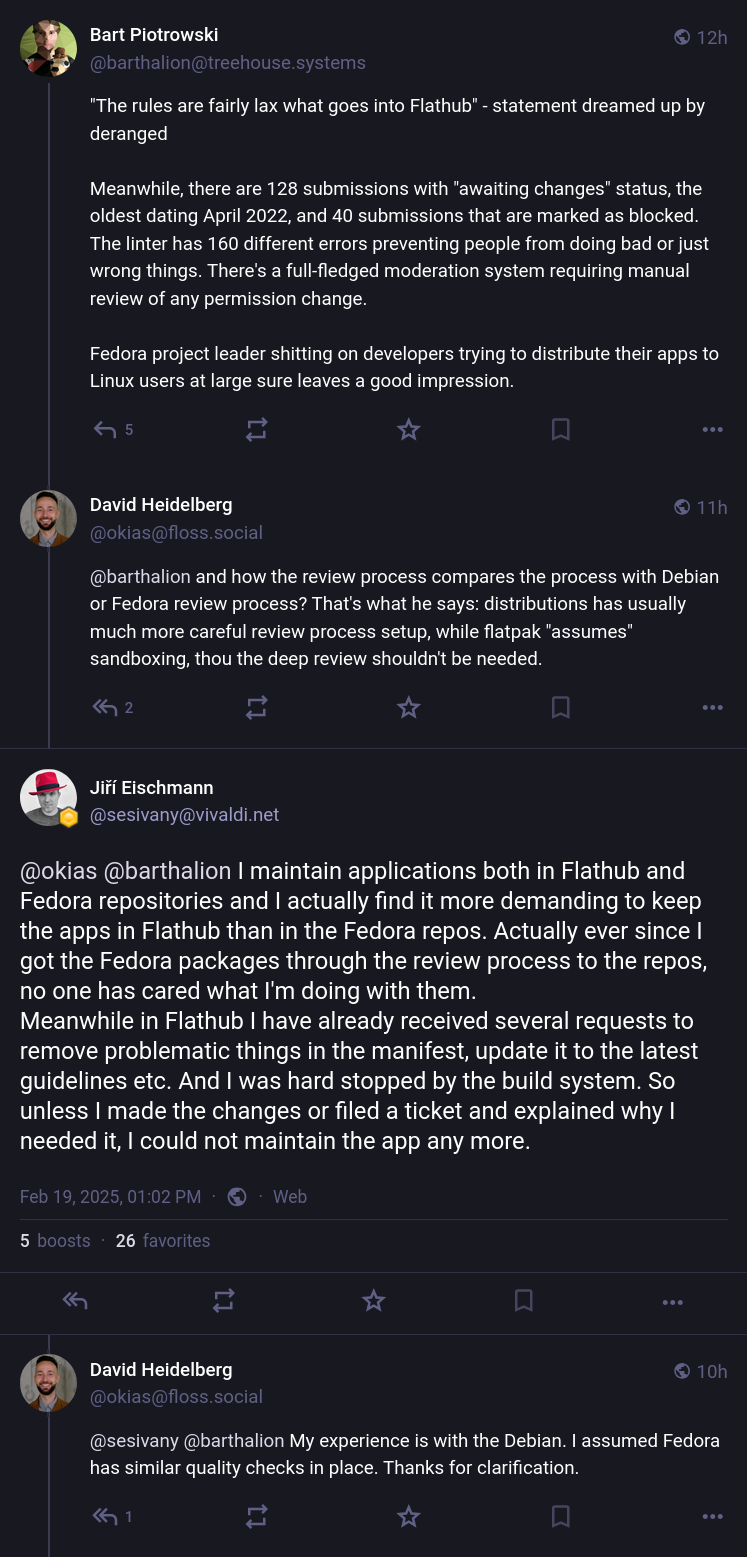

Claiming that Flathub does not have any review process or inclusion policies is straight up wrong and incredibly damaging. It’s the kind of thing we’ve heard ad nauseam from Flathub haters, but never from a person in charge of one of the most popular distributions and that should have really really known better.

You can find the Requirements in the Flathub documentation if you spend 30 seconds to google for them, along with the submission guidelines for developers. If those documents qualify as a wild west and free for all, I can’t possibly take you seriously.

I haven’t maintained a linux distribution package myself so I won’t go to comparisons between Flathub and other distros, however you can find people, with red hats even, that do so and talked about it. Of course this is one off examples and social bias from my part. But it proves how laughable of a claim is that things are not reviewed. Additionally, the most popular story I hear from developers is how Flathub requirements are often stricter and sometimes cause annoyances.

Additionally, Flathub has been the driving force behind encouraging applications to update their metadata, completely reworking the User Experience and handling off permissions and made them prominent to the user. (To the point where even network access is marked as potentially-unsafe).

Miller: [..] the thing that says verified just says that it’s verified from the developer themselves.

No, verified does not mean that the developer signed off into it. Let’s take another 30 seconds to look into the Flathub documentation page about exactly this.

A verified app on Flathub is one whose developer has confirmed their ownership of the app ID […]. This usually also may mean that either the app is maintained directly by the developer or a party authorized or approved by them.

It still went through the review process and all the rest of requirements and policies apply. The verified program is basically a badge to tell users this is a supported application by the upstream developers, rather than the free for all that exists currently where you may or may not get an application released from years ago depending on how stable your distribution is.

Sidenote, did you know that 1483/3003 applications on Flathub are verified as of the writing of this post? As opposed to maybe a dozen of them at best in the distributions. You can check for yourself

Miller: .. and it doesn’t necessarily verify that it was build with good practices, maybe it was built in a coffee shop on some laptop or whatever which could be infected with malware or whatever could happen

Again if Miller had done the bare minimum effort, he would have come across the Requirements page which describes exactly how an Application in Flathub is built, instead of further spreading made up takes about the infrastructure. I can’t stress enough how damaging it has been throughout the years to claim that “Flathub may be potential Malware”. Why it’s malware? Because I don’t like its vibes and I just assume so..

I am sure If I did the same about Fedora in a very very public medium with thousand of listeners I would probably end up with a Layers letter from Redhat.

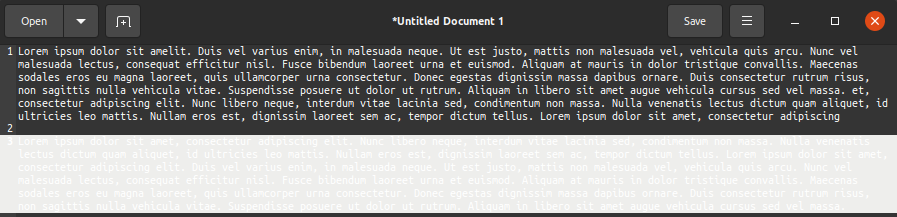

Now Applications in Flathub are all built without a network access, in Flathub’s build servers, using flatpak-builder and Flatpak Manifests which are a declarative format, which means all the sources required to build the application are known, validated/checksumed, the build is reproducible to the extend possible, you can easily inspect the resulting binaries and the manifest itself used to build the application ends up in /app/manifest.json which you can also inspect with the following command and use it to rebuild the application yourself exactly like how it’s done in Flathub.

$ flatpak run --command=cat org.gnome.TextEditor /app/manifest.json

{

"id" : "org.gnome.TextEditor",

"runtime" : "org.gnome.Platform",

"runtime-version" : "47",

"runtime-commit" : "d93ca42ee0c4ca3a84836e3ba7d34d8aba062cfaeb7d8488afbf7841c9d2646b",

"sdk" : "org.gnome.Sdk",

"sdk-commit" : "3d5777bdd18dfdb8ed171f5a845291b2c504d03443a5d019cad3a41c6c5d3acd",

"command" : "gnome-text-editor",

"modules" : [

{

...The exception to this, are proprietary applications naturally, and a handful of applications (under an OSI approved license) where Flathub developers helped the upstream projects integrate a direct publishing workflow into their Deployment pipelines. I am aware of Firefox and OBS as the main examples, both of which publish in Flathub through their Continues Deployment (CI/CD) pipeline the same way they generate their builds for other platforms they support and the code for how it happens is available on their repos.

If you have issues trusting Mozilla’s infrastructure, then how are you trusting Firefox in the first place and good luck auditing gecko to make sure it does not start to ship malware. Surely distribution packagers audit every single change that happens from release to release for each package they maintain and can verify no malicious code ever gets merged. The xz backdoor was very recent, and it was identified by pure chance, none of this prevented it.

Then Miller proceeds to describe the Fedora build infrastructure and afterward we get into the following:

Miller: I will give an example of something I installed in Flathub, I was trying to get some nice gui thing that would show me like my system Hardware stats […] one of them ones I picked seemed to do nothing, and turns out what it was actually doing, there was no graphical application it was just a script, it was running that script in the background and that script uploaded my system stats to a server somewhere.

Firstly we don’t really have many details to be able to identify which application it was, I would be very curious to know. Now speculating on my part, the most popular application matching that description it’s Hardware Probe and it absolutely has a GUI, no matter how minimal. It also asks you before uploading.

Maybe there is a org.upload.MySystem application that I don’t know about, and it ended up doing what was in the description, again I would love to know more and update the post if you could recall!

Miller: No one is checking for things like that and there’s no necessarily even agreement that that was was bad.

Second time! Again with the “There is no review and inclusion process in Flathub” narrative. There absolutely is, and these are the kinds of things that get brought up during it.

Miller: I am not trying to be down on Flathub because I think it is a great resource

Yes, I can see that, however in your ignorance you were something much worse than “Down”. This is pure slander and defamation, coming from the current “Fedora Project Leader”, the “Technically Voice of Fedora” (direct quote from a couple seconds later). All the statements made above are manufactured and inaccurate. Myths that you’d hear from people that never asked, looked or cared about any of these cause the moment you do you its obvious how laughable all these claims are.

Miller: And in a lot of ways Flathub is a competing distribution to Fedora’s packaging of all applications.

Precisely, he is spot on here, and I believe this is what kept Miller willfully ignorant and caused him to happily pick the first anit-flatpak/anti-flathub arguments he came across on reddit and repeat the verbatim without putting any thought into it. I do not believe Miller is malicious on purpose, I do truly believe he means well and does not know better.

However, we can’t ignore the conflict that arises from his current job position as an big influence to why incidents like this happened. Nor the influence and damage this causes when it comes from a person of Matthew Miller’s position.

Moving on:

Miller: One of the other things I wanted to talk about Flatpak, is the security and sandboxing around it. Miller: Like I said the stuff in the Flathub are not really reviewed in detail and it can do a lot of things:

Third time with the no review theme. I was fuming when I first heard this, and I am very very angry about still, If you can’t tell. Not only is this an incredibly damaging lie as covered above, it gets repeated over and over again.

With Flatpak basically the developer defines what the permissions are. So there is a sandbox, but the sandbox is what the person who put it there is, and one can imagine that if you were to put malware in there you might make your sandboxing pretty loose.

Brodie: One of the things you can say is “I want full file system access, and then you can do anything”

No, again it’s stated in the Flathub documentation, permissions are very carefully reviewed and updates get blocked when permissions change until another review has happened.

Miller: Android and Apple have pretty strong leverage against application developers to make applications work in their sandbox

Brodie: the model is the other way around where they request permissions and then the user grants them whereas Flatpak, they get the permission and then you could reject them later

This is partially correct, the first part about leverage will talk about in a bit, but here’s a primer on how permissions work in Flatpak and how it compares to the sandboxing technologies in iOS and Android.

In all of them we have a separation between Static and Dynamic permissions. Static are the ones the application always has access to, for example the network, or the ability to send you notifications. These are always there and are mentioned at install time usually. Dynamic permissions are the ones where the application has to ask the user before being able to access a resource. For example opening a file chooser dialog so the user can upload a file, the application the only gets access to the file the user consented or none. Another example is using the camera on the device and capturing photos/video from it.

Brodie here gets a bit confused and only mentions static permissions. If I had to guess it would be cause we usually refer to the dynamic permissions system in the Flatpak world as “Portals”.

Miller: it didn’t used to be that way and and in fact um Android had much weaker sandboxing like you could know read the whole file system from one app and things like that […] they slowly tightened it and then app developers had to adjust Miller: I think with the Linux ecosystem we don’t really have the way to tighten that kind of thing on app developers … Flatpak actually has that kind of functionality […] with portals […] but there’s no not really a strong incentive for developers to do that because, you know well, first of all of course my software is not going to be bad so why should I you know work on sandboxing it, it’s kind of extra work and I I don’t know I don’t know how to solve that. I would like to get to the utopian world where we have that same security for applications and it would be nice to be able to install things from completely untrusted places and know that they can’t do anything to harm your system and that’s not the case with it right now

As with any technology and adoption, we don’t get to perfection from day 1. Static permissions are necessary to provide a migration path for existing applications and until you have developed the appropriate and much more complex dynamic permissions mechanisms that are needed. For example up until iOS 18 it wasn’t possible to give applications access to a subset of your contacts list. Think of it like having to give access your entire filesystem instead of the specific files you want. Similarly partial-only access to your photos library arrived couple years ago in IOS and Android.

In an ideal world all permissions are dynamic, but this takes time and resources and adaptation for the needs of applications and the platform as development progresses.

Now about the leverage part.

I do agree that “the Linux ecosystem” as a whole does not have any leverage on applications developers. This is cause Miller is looking at the wrong place for it. There is no Linux ecosystem but rather Platforms developers target.

GNOME and KDE, as they distribute all their applications on Flathub absolutely have leverage. Similarly Flathub itself has leverage by changing the publishing requirements and inclusion guidelines. Which I kept being told they don’t exist.. Every other application that wants to publish also has to adhere by the rules on Flathub. ElementaryOS and their Appcenter has leverage on developers. Canonical does have the same pull as well with the Snapstore. Fedora on the other hand doesn’t have any leverage cause the Fedora Flatpak repository is irrelevant, broken and nobody wants to use it.

[..] The xz backdoor gets brought up when discussing dependencies and how software gets composed together.

Miller: we try to keep all of those things up to date and make sure everything is patched across the dist even when it’s even when it’s difficult. I think that really is one of the best ways to keep your system secure and because the sandboxing isn’t very strong that can really be a problem, you know like the XZ thing that happened before. If XZ is just one place it’s not that hard of an update but if you’ve got a 100 Flatpaks from different places […] and no consistency to it it’s pretty hard to manage that

I am not going to get in depth about this problem domain and the arguments over it. In fact I have been writing another blog post for a while. I hope to publish shortly. Till then I can not recommend high enough Emmanuele’s and Lennart’s blog posts, as well as one of the very early posts from Alex when Flatpak was in early design phase on the shortcomings of the current distribution model.

Now about bundled dependencies. The concept of Runtimes has served us well so far, and we have been doing a pretty decent job providing most of the things applications need but would not want to bundle themselves. This makes the Runtimes a single place for most of the high profile dependencies (curl, openssl, webkitgtk and so on) that you’d frequently update for security vulnerabilities and once it’s done they roll out to everyone without needing to do anything manual to update the applications or even rebuilt them.

Applications only need to bundle their direct dependencies,and as mentioned above, the flatpak manifest includes the exact definition of all of them. They are available to anyone to inspect and there’s tooling that can scan them and hopefully in the future alert us.

If the Docker/OCI model where you end bundling the entire toolchain, runtime, and now you have to maintain it and keep up with updates and rebuild your containers is good enough for all those enterprise distributions, then the Flatpak model which is much more efficient, streamlined and thought out and much much much less maintenance intensive, it is probably fine.

Miller: part of the idea of having a distro was to keep all those things consistent so that it’s easier for everyone, including the developers

As mentioned above, nothing that fundamentally differs from the leverage that Flathub and the Platform Developers have.

Brodie: took us 20 minutes to get to an explanation [..] but the tldr Fedora Flatpak is basically it is built off of the Fedora RPM build system and because that it is more well tested and sort of intended, even if not entirely for the Enterprise, designed in a way as if an Enterprise user was going to use it the idea is this is more well tested and more secure in a lot of cases not every case.

Miller: Yea that’s basically it

This is a question/conclusion that Brodie reaches with after the previous statements and by far the most enraging thing in this interview. This is also an excellent example of the damage Matthew Miller caused today and if I was a Flathub developer I would stop on nothing sort of a public apology from the Fedora project itself. Hell I want this just being an application developer that publishes on it. The interview has been basically shitting on both the Developers of Flathub and the people that choose to publish in it. And if that’s not enough there should be an apology just out of decency. Dear god..

Brodie: how should Fedora handle upstreams that don’t want to be packaged like the OBS case here where they did not want there to be a package in Fedora Flatpak or another example is obviously bottles which has made a lot of noise about the packaging

Lastly I want to touch on this closing question in light of recent events.

Miller: I think we probably shouldn’t do it. We should respect people’s wishes there. At least when it is an open source project working in good faith there. There maybe some other cases where the software, say theoretically there’s somebody who has commercial interests in some thing and they only want to release it from their thing even though it’s open source. We might want to actually like, well it’s open source we can provide things, we in that case we might end up you having a different name or something but yeah I can imagine situations where it makes sense to have it packaged in Fedora still but in general especially and when it’s a you know friendly successful open source project we should be friendly yeah. The name thing is something people forget history like that’s happened before with Mozilla with Firefox and Debian.

This is an excellent idea! But it gets better:

Miller: so I understand why they strict about that but it was kind of frustrating um you know we in Fedora have basically the same rules if you want to take Fedora Linux and do something out of it, make your own thing out of it, put your own software on whatever, you can do that but we ask you not to call it Fedora if it’s a fedora remix brand you can use in some cases otherwise pick your own name it’s all open source but you know the name is ours. yeah and I the Upstream as well it make totally makes sense.

Brodie: yeah no the name is completely understandable especially if you do have a trademark to already even if you don’t like it’s it’s common courtesy to not name the thing the exact same thing

Miller: yeah I mean and depending on the legalities like you don’t necessarily have to register a trademark to have the trademark kind of protections under things so hopefully lawyers you can stay out of the whole thing because that always makes the situations a lot more complicated, and we can just get along talking like human beings who care about making good software and getting it to users.

And I completely agree with all of these, all of it. But let’s break it down a bit because no matter how nice the words and intentions it hasn’t been working out this way with the Fedora community so far.

First, Miller agrees the Fedora project should be respecting of application developer’s wishes to not have their application distributed by fedora but rather it be a renamed version if Fedora wishes to keep distributing it.

However, every single time a developer has asked for this, they have been ridiculed, laughed at and straight up bullied by Fedora packagers and the rest of the Fedora community. It has been a similar response from other distribution projects and companies as well, it’s not just Fedora. You can look at Bottle’s story for the most recent example. It is very nice to hear Miller’s intentions but means nothing in practice.

Then Miller proceeds to assure us why he understand that naming and branding is such a big deal to those projects (unlike the rest of the Fedora community again). He further informs us how Fedora has the exact same policies and asks from people that want to fork Fedora. Which makes the treatment that every single application developer has received when asking about the same exact thing ever more outrageous.

What I didn’t know is that in certain cases you don’t even need to have a trademark yet to be covered by some of the protections, depending on jurisdiction and all.

And last we come into lawyers. Neither Fedora nor application developers would want it to ever come to this, and it was stated multiple times by Bottles developers that they don’t want to have to file for a trademark so they can be taken seriously. Similarly, OBS developers said how resorting to legal action would be the last thing they would want to do and would rather have the issue resolved before that. But it took until OBS, a project of a high enough profile, with the resources required to acquire a trademark and to threaten legal action before the Fedora Leadership cared to treat application developers like human beings and get the Fedora packagers and community members to comply. (Something which they had stated multiple times they simply couldn’t do).

I hate all of this. Fedora and all the other distributions need to do better. They all claim to care about their users but happily keep shipping broken and miss configured software to them over the upstream version, just cause it’s what aligns with their current interests. In this case is the promotion of Fedora tooling and Fedora Flatpaks over the application in Flathub they have no control over. In previous incidents it was about branding applications like the rest of the system even though it was making them unusable. And I can find you and list you with a bunch of examples from other distributions just as easily.

They don’t care about their users, they care about their bottom line first and foremost. Any civil attempts at fixing issues get ignored and laughed at, up until there is a threat of a legal action or a big enough PR damage, drama and shitshow that they can’t ignore it anymore and have to backtrack on them.

This is my two angry cents. Overall I am not exactly sure how Matthew Miller managed in a rushed and desperate attempt at damage control for the OBS drama, to not only to make it worse, but to piss off the entire Flathub community at the same time. But what’s done is done, let’s see what we can do to address the issues that have festered and persisted for years now.