Custom Images for everyone!

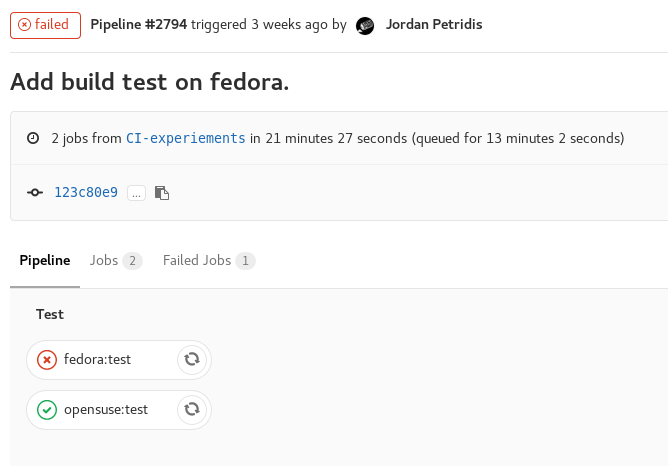

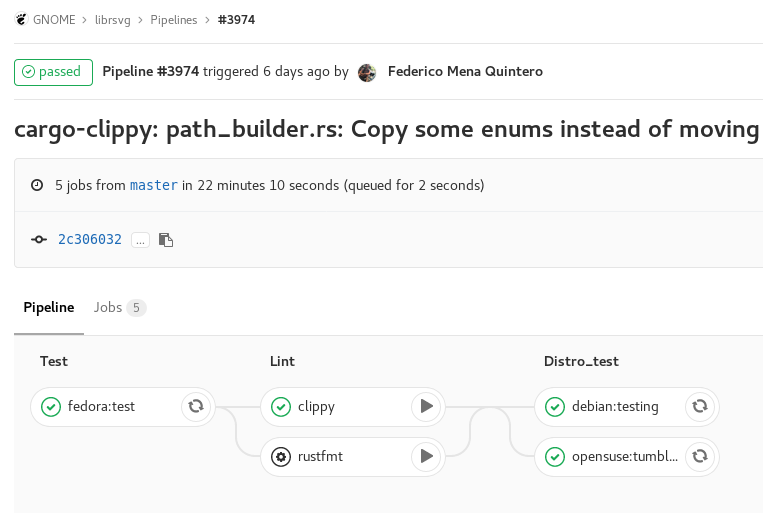

In the previous post we setup a small Pipeline that builds and runs the tests suite across some distributions, checks the code formatting and(Soon™) will deny clippy warnings.

In this part we will try to reduce the time it takes for the Pipeline to run by using custom container images bundled with the dependencies needed to built Librsvg instead of downloading and installing them each time.

Creating an image

First we will need to find a base image of the distro we want to build upon. Most distributions have official images published in Dockerhub. I am gonna use the fedora image but the same process can be done for any image.

We will create a new file named fedora_latest.yml, but the name doesn’t really matter. Then add the following content to it:

FROM fedora:latest

RUN dnf -y update && dnf -y upgrade && \

dnf install -y gcc rust rust-std-static cargo make vala \

automake autoconf libtool gettext itstool \

gdk-pixbuf2-devel gobject-introspection-devel \

gtk-doc git redhat-rpm-config gtk3-devel ccache \

libxml2-devel libcroco-devel cairo-devel pango-devel && \

dnf clean all

The first line FROM fedora:latest specified the base image we will use. The first part specifies the repository it will come from fedora, if no registry is passed it will by default look in Dockerhub I think.

Then in the rest of the file we gonna use the standard dnf commands to install the dependencies we want.

Last we will need to build the image with the following command:

docker build -f fedora_latest.yml -t librsvg/fedora:latest .

Notice the dot at the end. That cost me half an hour to debug the first time 🙁 (I would suggest looking at buildah for building OCI images instead of docker).

After that is complete docker images should have an entry like the following:

REPOSITORY TAG IMAGE ID CREATED SIZE

librsvg/fedora latest 3d92c1b0ea5a 6 minutes ago 992 MB

It’s now possible to emulate the environment the Gitlab CI with the following command:

docker run -ti librsvg/fedora:latest bash

This will give as a bash shell inside the image we just built from where we can test cloning the git repo and running the test suite. I plan on writing a separate post on how to use the containers we are going to build for compiling and testing librsvg in a bit more depth.

Pushing our image to a registry

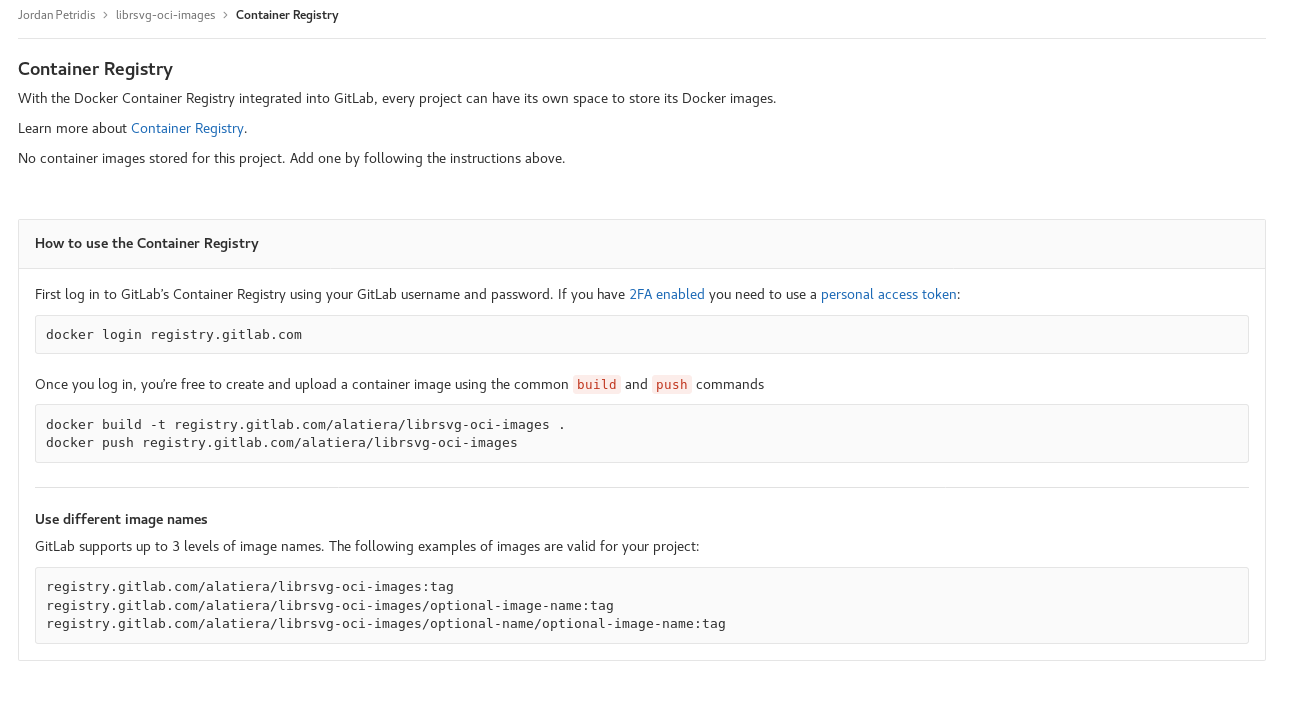

We built our image now we need some way for the Gitlab CI to fetch it and use it. One way is to push it in an online registry like Dockerhub. Ideally though we want to avoid depending Dockerhub or any other(possibly proprietary) registry that we have no control off. Turns out gitlab can have an integrated container registry FOR EACH PROJECT.

As of the time of writing this post, the GNOME gitlab migration is still ongoing and the container registry is not yet enabled. I plan on migrating the librsvg-oci-registry from gitlab.com as soon as the feature becomes available. For now and the rest of the post I will make use of the gitlab.com infrastructure but migrating or replicating the setup in the gitlab deployment of your choice(ex. gitlab.gnome.org or salsa.debian.org) should be identical apart from changing the base urls.

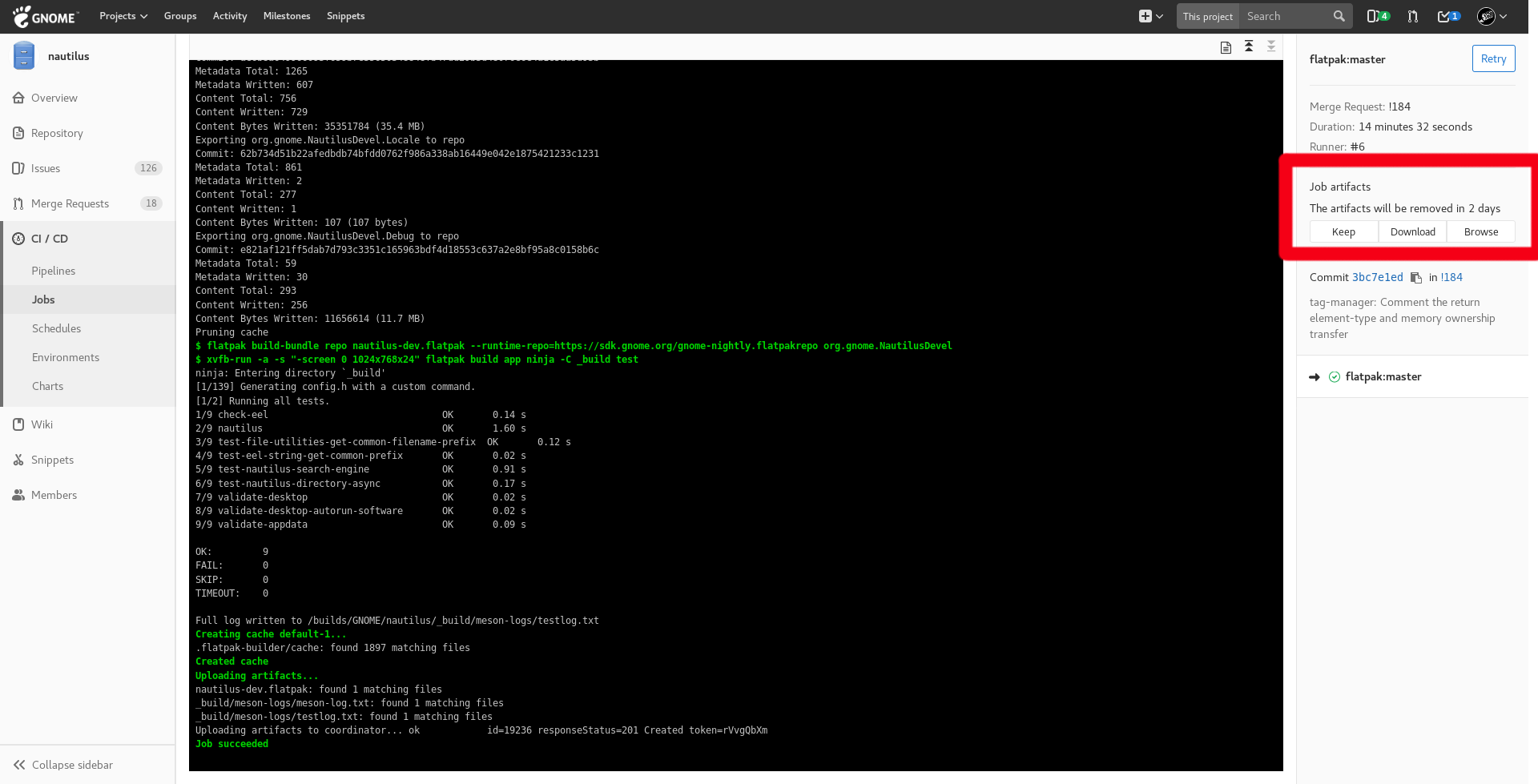

The only prerequisite to use the gitlab registry(apart from being enabled) is to have a gitlab project(a repository by Github terms). I’ve created the a new project under my gitlab.com account called librsvg-oci-images. When navigating to the Repository section(in the left bar in gitlab 10.x series) the following instructions are shown.

docker login registry.gitlab.com

Once you log in, you’re free to create and upload a container image using the common build and push commands

docker build -t registry.gitlab.com/alatiera/librsvg-oci-images .

docker push registry.gitlab.com/alatiera/librsvg-oci-images

Assuming you rebuilt the image with a tag that matches the registry’s namespace, that’s all it takes to push an image to it.

Automating Image builds

Building an image and pushing it manually can get tiresome and a long time (especially on slow internet connections). What if we could use Gitlab-CI to build and push the images automatically on regular basis?

It certainly possible, in fact there’s even a whole section about it in the gitlab docs. I’ve also found useful this post from .

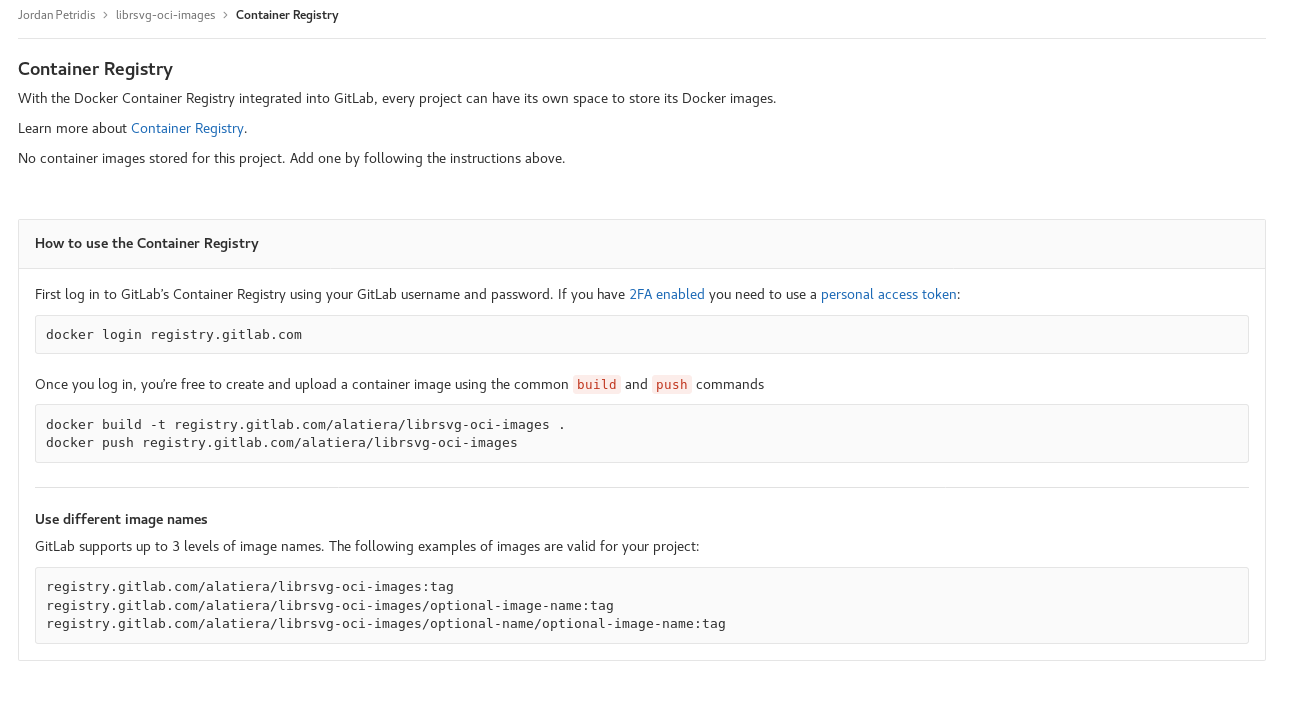

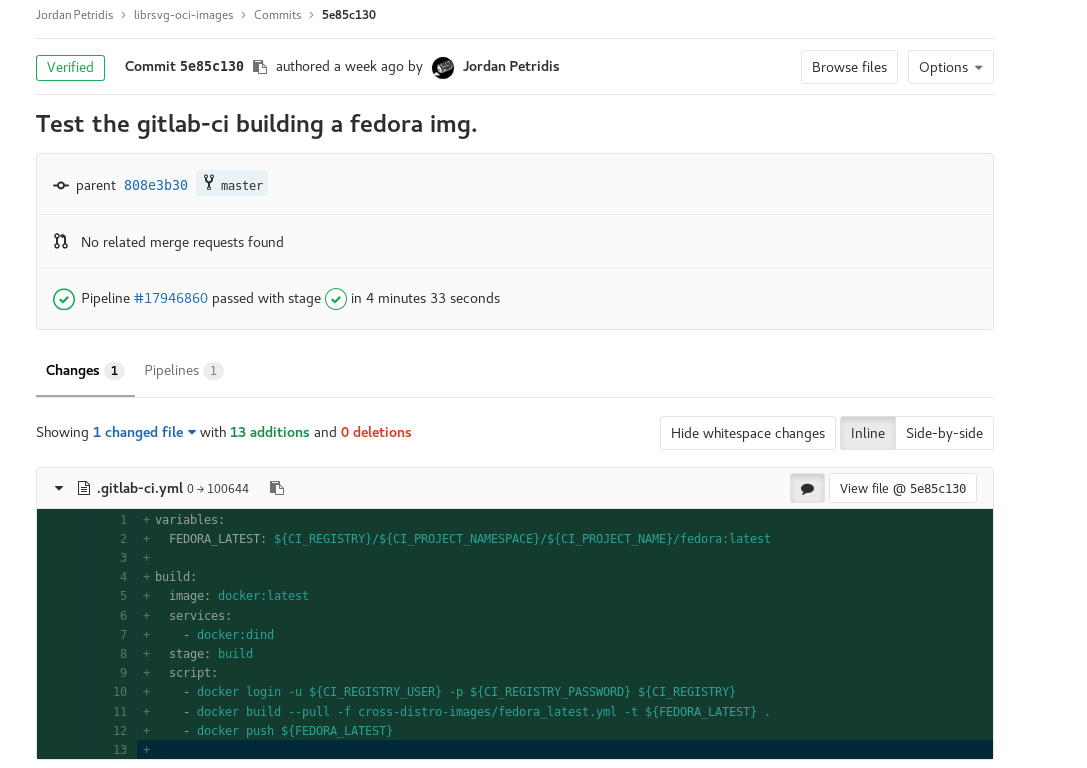

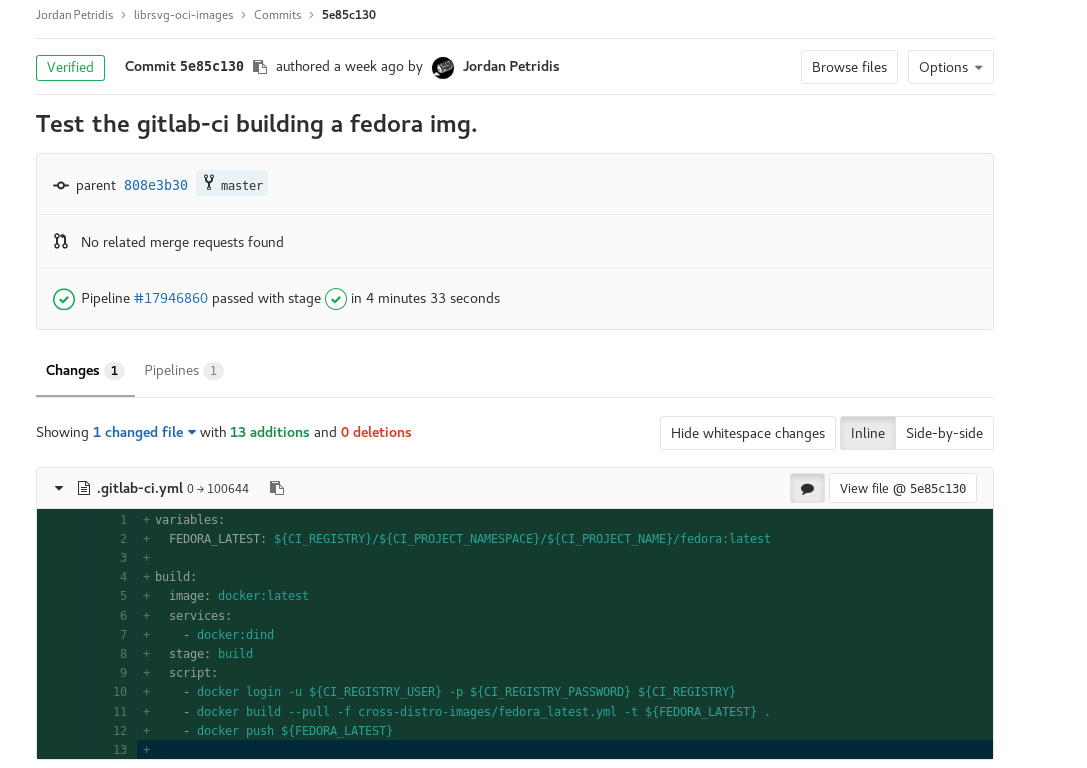

First we will commit the fedora.yml file we created earlier to the repo. Then will need to add a .gitlab-ci.yml file in the librsvg-oci-images repository. For now we will just put the following to assert it’s working.

variables:

FEDORA_LATEST: ${CI_REGISTRY}/${CI_PROJECT_NAMESPACE}/${CI_PROJECT_NAME}/fedora:latest

build:

image: docker:latest

services:

- docker:dind

stage: build

script:

- docker login -u ${CI_REGISTRY_USER} -p ${CI_REGISTRY_PASSWORD} ${CI_REGISTRY}

- docker build --pull -f cross-distro-images/fedora_latest.yml -t ${FEDORA_LATEST} .

- docker push ${FEDORA_LATEST}

Gitlab-CI set’s up some ENV variables on every run, which we can use to login and push to the registry. It’s almost a carbon copy of the examples in the gitlab docs. Though for now we’ve hardcoded the path the fedora Dockerfile yaml file(currently under cross-distro-images folder in the repo). If this works the resulting image will be under registry.gitlab.com/alatiera/librsvg-oci-images/fedora:latest.

Indeed the pipeline was successful and we can pull our new image with the following command:

docker pull registry.gitlab.com/alatiera/librsvg-oci-images/fedora:latest

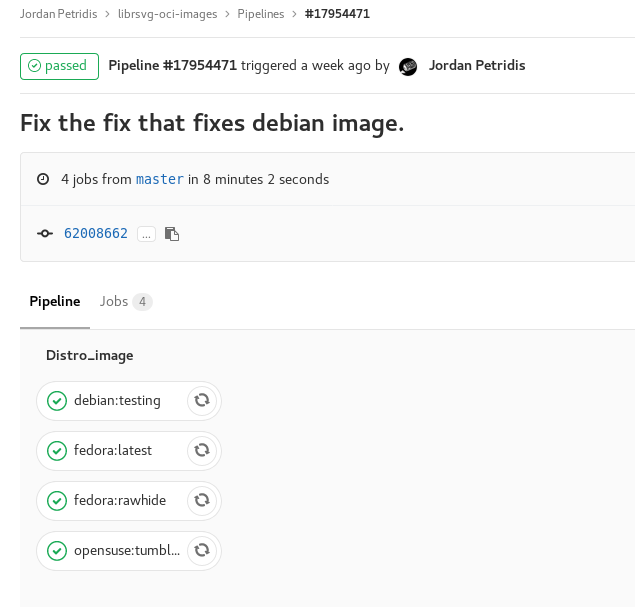

Alright, now that this works let’s add the rest of the images we want to build and refactor the .gitlab-ci.yml file a bit.

First we will add some more dockerfiles in the cross-distro-images folder in the repository root.

Then I will fast-forward a bunch of failed attempts to get this working. Eventually our .gitlab-ci.yml will look something like this:

stages:

- distro_image

# Expects $DISTRO_NAME variable which should be the name of the distro image ex. ubuntu

# Expects $DISTRO_VER variable which should be the version distro image ex. 18.04

# Expects $OCI_YML variable which should be the path to the dockerfile

.distro_template: &distro_build

before_script:

- export IMAGE=${CI_REGISTRY}/${CI_PROJECT_NAMESPACE}/${CI_PROJECT_NAME}/${DISTRO_NAME}:${DISTRO_VER}

script:

- docker login -u ${CI_REGISTRY_USER} -p ${CI_REGISTRY_PASSWORD} ${CI_REGISTRY}

- docker build --pull -f ${OCI_YML} -t ${IMAGE} .

- docker push ${IMAGE}

allow_failure: true

fedora:latest:

variables:

DISTRO_NAME: "fedora"

DISTRO_VER: "latest"

OCI_YML: "cross-distro-image/fedora_latest.yml"

<<: *distro_build

fedora:rawhide:

variables:

DISTRO_NAME: "fedora"

DISTRO_VER: "rawhide"

OCI_YML: "cross-distro-image/fedora_rawhide.yml"

<<: *distro_build

debian:testing:

variables:

DISTRO_NAME: "debian"

DISTRO_VER: "testing"

OCI_YML: "cross-distro-image/debian_testing.yml"

<<: *distro_build

opensuse:tumbleweed:

variables:

DISTRO_NAME: "opensuse"

DISTRO_VER: "tumbleweed"

OCI_YML: "across-distro-image/opensuse_tumbleweed.yml"

<<: *distro_build

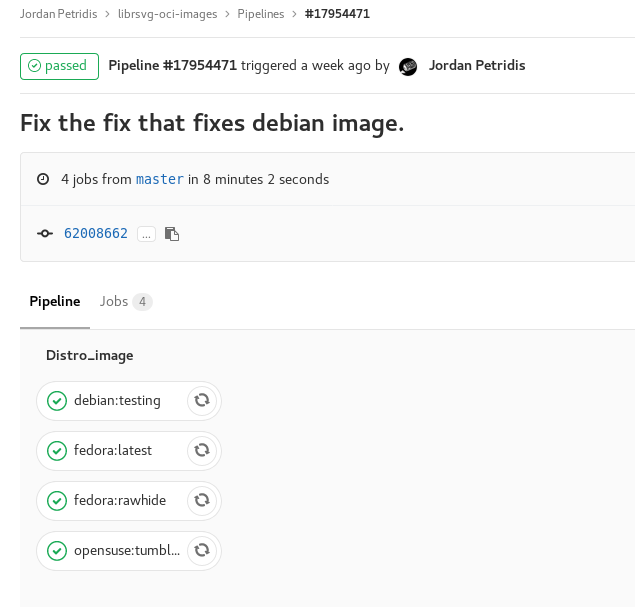

Basically what happened is that we made a generic template and used Environment Variables to pass it the arguments we want in each case. Here is the result after we push the file and the pipeline is run.

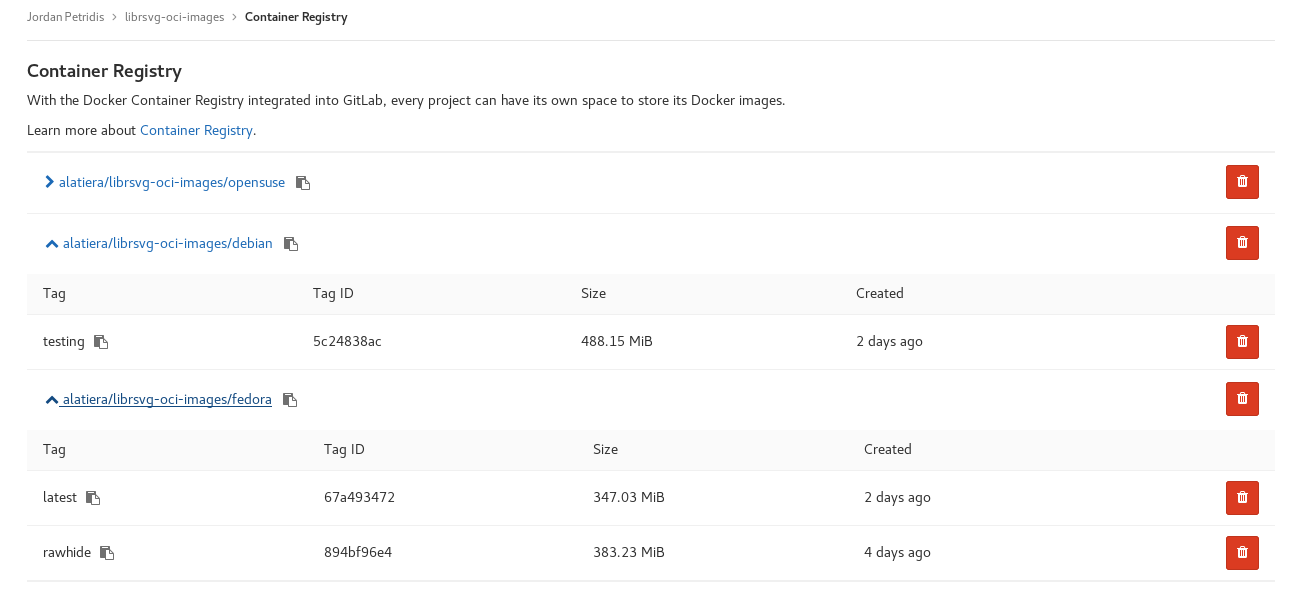

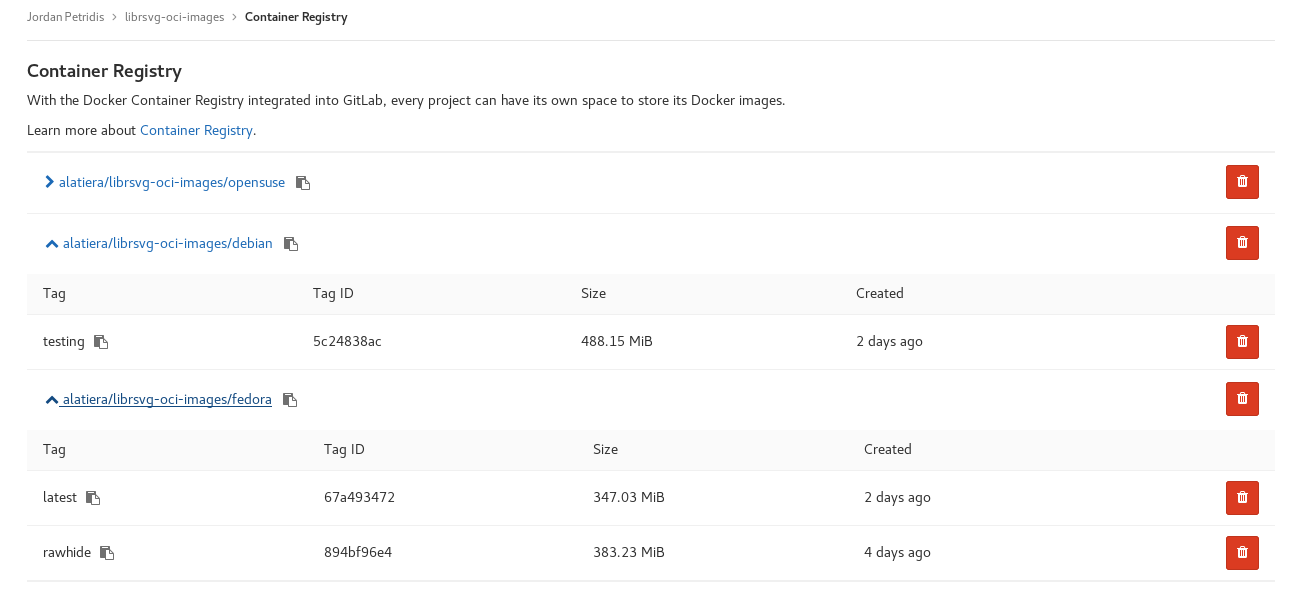

And here is how the registry looks like:

Now the only thing that’s left is to switch librsvg pipeline to use the custom images instead. To do so we simply have to change the image: key and delete the before_script: that used installed dependencies before since they are now included in the image.

So from this:

fedora:latest:

image: fedora:latest

before_script:

- dnf install -y gcc rust ... gtk3-devel

<<: *distro_test

It will become like this:

fedora:latest:

image: registry.gitlab.com/alatiera/librsvg-oci-images/fedora:latest

<<: *distro_test

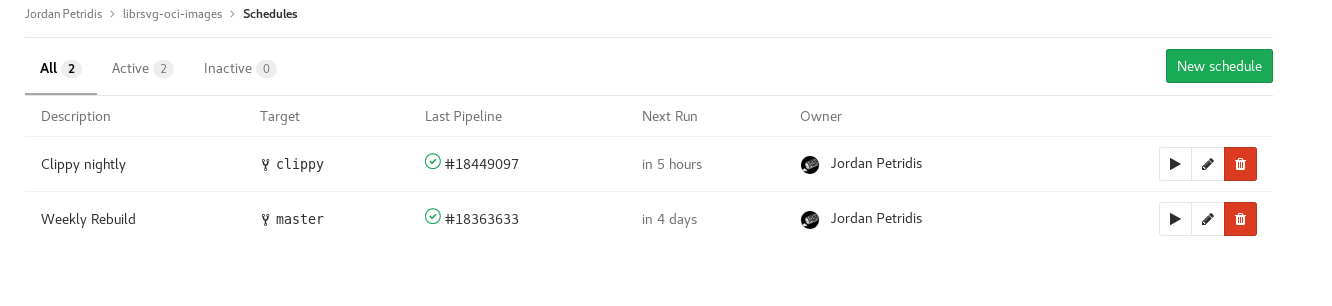

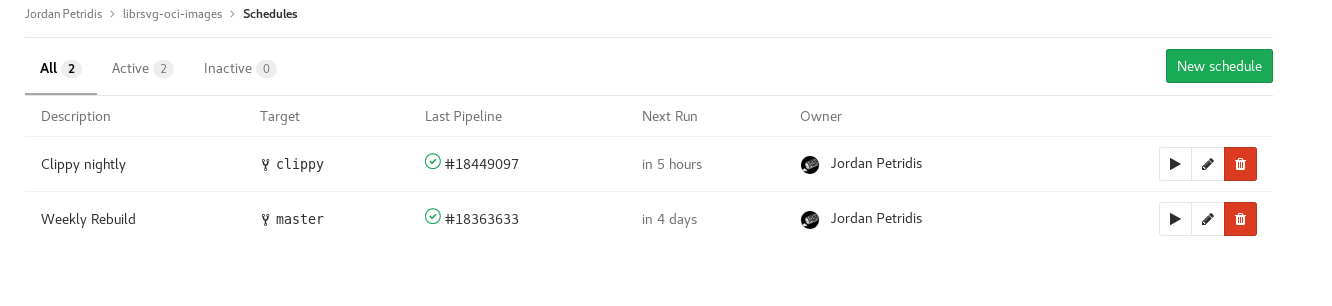

Automatically rebuilding and updating the Images

On each commit now the Gitlab-CI will build and push to the registry new images. But Gitlab-CI also has a nice feature where you can schedule Pipelines to run on regular time intervals. So we can have for example weekly/monthly rebuilds of updated images without having to push new commits or trigger manual rebuilds. When a new image is pushed the downstream CI in librsvg that uses the image should automatically pick it up. So that means as long the image builds don’t fail we won’t need to ever touch the repo again.

Setting up a scheduled Pipeline is straight forward for the most part. (They though cron-like syntax would make a good UX…). I won’t go into detail since there’s seem to be good documentation on howto do it in the Gitlab docs here.

Conclusion and Results

Using custom images and avoiding downloading and installing package dependencies in each run brought down the opensuse Job from ~20 min to ~5 minutes, and the fedora/debian jobs from ~10 minutes to ~4 minutes.

Which means we can run all the cross distro jobs now in less time than it initially took us to just test an opensuse build and still have some spare time!

Though we still download the whole cargo registry and all the rust dependencies each time. We also build librsvg from scratch in each pipeline run. In the next part we will see how to cache C and Rust artifacts across builds and have essentially do incremental builds.

Here is a link to the librsvg container registry, currently hosted at gitlab.com, if you want to take a closer look of the repo. Some stuff may be slightly different.