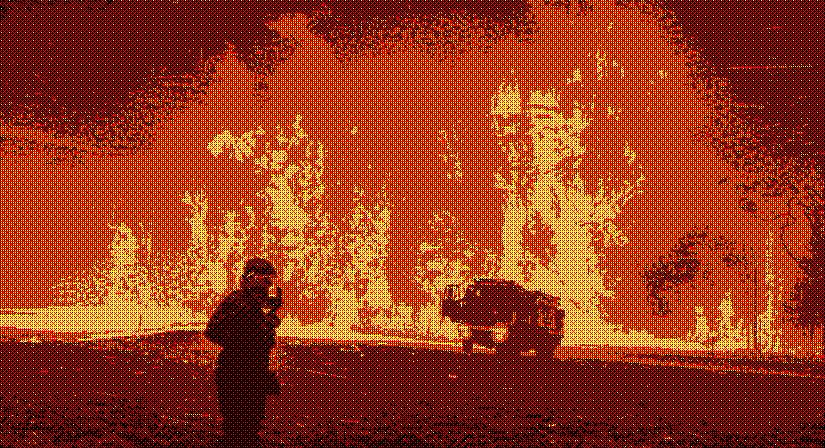

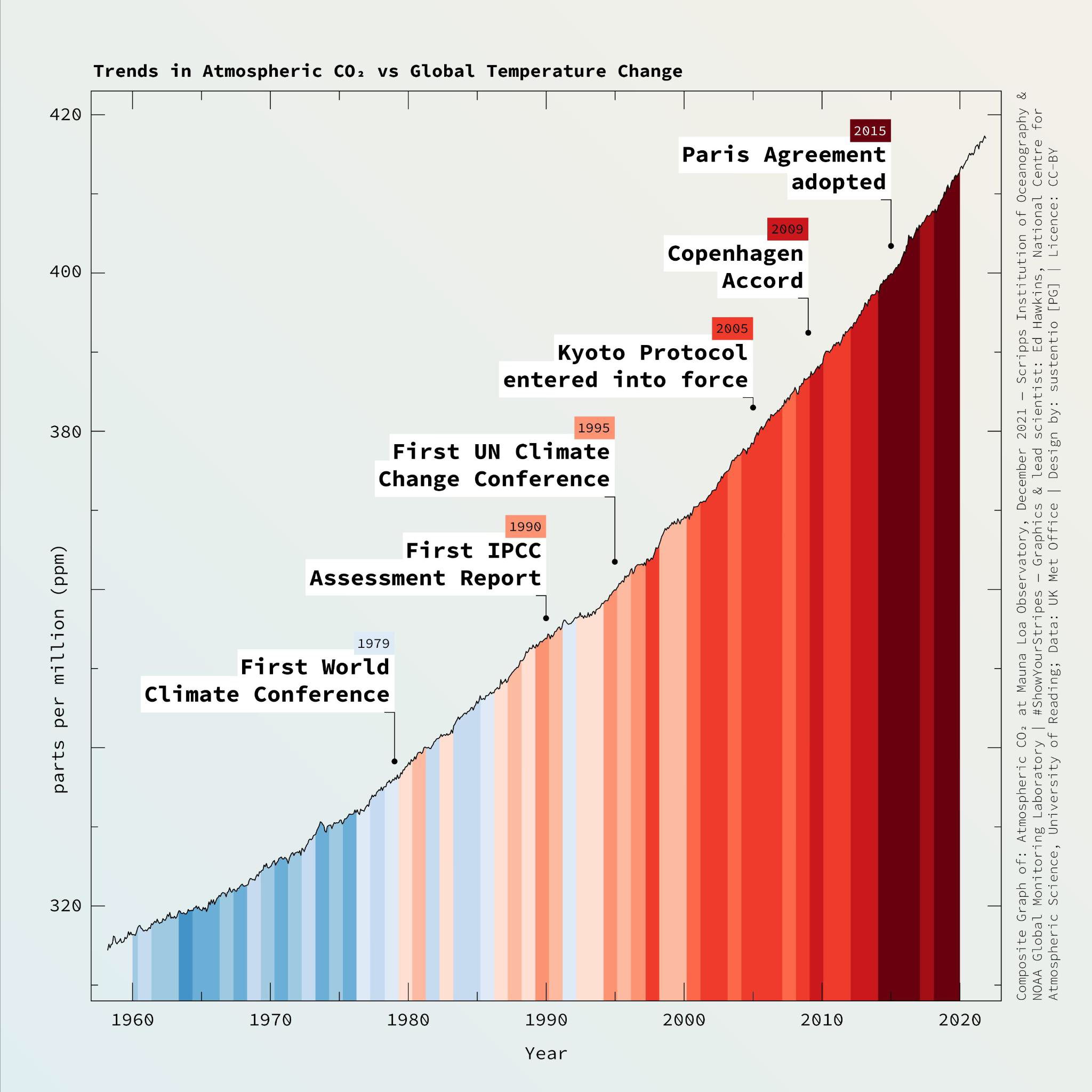

This is a lightly edited version of my GUADEC 2022 talk, given at c-base in Berlin on July 21, 2022. Part 1 briefly summarizes the horrors we’re likely to face as a result of the climate crisis, and why civil resistance is our best bet to still avoid some of the worst-case scenarios. Trigger Warning: Very depressing facts about climate and societal collapse.

While I think it’s critical to use the next few years to try and avert the worst effects of this crisis, I believe we also need to think ahead and consider potential failure scenarios.

What would it mean if we fail to force our governments to enact the necessary drastic climate action, both for society at large but also very concretely for us as free software developers? In other words: What does collapse mean for GNOME?

In researching the subject I discovered that there’s actually a discipline studying questions like this, called “Collapsology”.

Collapsology studies the ways in which our current global industrial civilization is fragile and how it could collapse. It looks at these systemic risks in a transdisciplinary way, including ecology, economics, politics, sociology, etc. because all of these aspects of our society are interconnected in complex ways. I’m far from an expert on this topic, so I’m leaning heavily on the literature here, primarily Pablo Servigne and Raphaël Stevens’ book “How Everything Can Collapse” (translated from the french original).

So what does climate collapse actually look like? What resources, infrastructure, and organizations are most likely to become inaccessible, degrade, or collapse? In a nutshell: Complex, centralized, interdependent systems.

There are systems like that in every part of our lives of course, from agriculture, to pharma, to energy production, and of course electronics. Because this talk’s focus is specifically the impact on free software, I’ll dig deeper on a few areas that affect computing most directly: Supply chains, the power grid, the internet, and Big Tech.

Supply Chains

As we’ve seen repeatedly over the past few years, the supply chains that produce and transport goods across the globe are incredibly fragile. During the first COVID lockdowns it was toilet paper, then we got the chip shortage affecting everything from Play Stations to cars, and more recently a baby formula shortage in the US, among others. To make matters worse, many industries have moved to just-in-time manufacturing over the past decades, making them even less resilient.

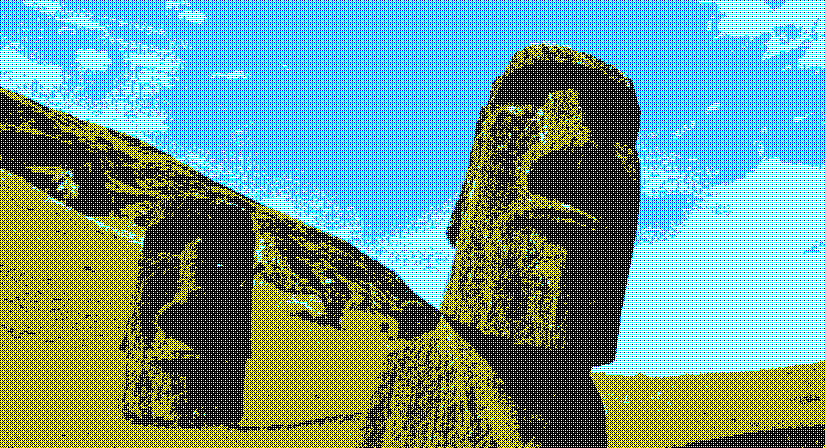

Now add to that more and more extreme natural disasters disrupting production and transport, wars and sanctions disrupting trade, and financial crises triggered or exacerbated by some of the above. It’s not hard to imagine goods that are highly dependent on global supply chains becoming prohibitively expensive or just impossible to get in parts of the world.

Computers are one of the most complex things manufactured today, and therefore especially vulnerable to supply chain disruption. Without a global system of resource extraction, manufacturing, and trade there’s no way we can produce chips anywhere near the current level of sophistication. On top of that chip supply chains are incredibly centralized, with most of global chip production being controlled by a single Taiwanese company, and the machines used for that production controlled by a single Dutch company.

Power Grid

Access to an unlimited amount of power, at any time, for very little money, is something we take for granted, but probably shouldn’t. In addition to disruptions by extreme weather events one important factor here is that in an ever-hotter world, air conditioning starts to put an increasing amount of strain on the power grid. In parts of the global south this is one of the reasons why power outages are a daily occurrence, and having power all the time is far from guaranteed.

In order to do computing we of course need power, not only to run/charge our own devices, but also for the data centers and networking infrastructure running a lot of the things we’re connecting to while using those devices.

Which brings us to our next point…

Internet

Having a reliable internet connection requires a huge amount of interconnected infrastructure, from undersea cables, to data centers, to the local cable infrastructure that goes to your neighborhood, and ultimately your router or a nearby cellular tower.

All of this infrastructure is at risk of being disrupted by sea level rise and extreme weather, taken over by political actors wanting to control the flow of information, abandoned by companies when it becomes unprofitable to operate in a certain area due to frequent extreme weather, and so on.

Big Tech

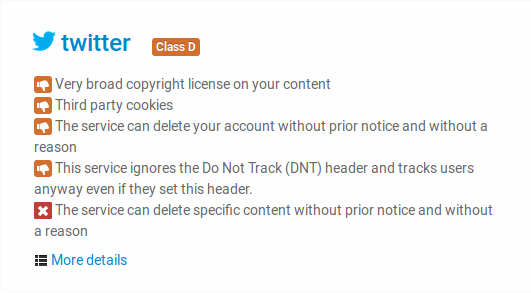

Finally, at the top of the stack there’s the actual applications and services we use. These, too, have become ever more centralized and fragile at all levels over the past decades.

At the most basic level there’s OS updates and app stores. There are billions of iOS devices out there that are literally unable to get security updates or install new software if they lose access to Apple’s servers. Apple collapsing seems unlikely in the short term, but, for example, what if they stop doing business in your country because of sanctions?

We used to warn about lock-in to proprietary software and formats, but at least Photoshop CS2 continues to run on your computer regardless of what happens to the company. With Figma et al you can not only not access your existing files anymore if the server isn’t accessible, you can’t even create new ones.

In order to get a few nice sharing and collaboration features people are increasingly just running all software in the cloud on someone else’s computer, whether it’s Google Slides for presentations, SketchUp for 3D modeling, Notion for note taking, Figma for design, and even games via game streaming services like Stadia.

From a free software perspective another particularly risky point of corporate centralization is Github, given that a huge number of important projects are hosted there. Even if you’re not actively using it yourself for development, you’re almost certainly depending on other projects hosted on Github. If something were to happen to it… yikes.

Failure Scenarios

So to summarize, this is a rough outline of a potential failure scenario, as applied to computing:

- No new hardware: It’s difficult and expensive to get new devices because there’s little to no new ones being made, or they’re not being sold where you live.

- Limited power: There’s power some of the time, but only a few hours a day or when there’s enough sun for your solar panels. It’s likely that you’ll want to use it for more important things than powering computers though…

- Limited connectivity: There’s still a kind of global internet, but not all countries have access to it due to both degraded infrastructure and geopolitical reasons. You’re able to access a slow connection a few times a month, when you’re in another town nearby.

- No cloud: Apple and Google still exist, but because you don’t have internet access often enough or at sufficient speeds, you can’t install new apps on your iOS/Android devices. The apps you do have on them are largely useless since they assume you always have internet.

This may sound like an unrealistically dystopian scenario, until you realize: Parts of the global south are experiencing this today. Of course a collapse of these systems at the global level would have a lot of other terrible consequences, but I think seeing the global south as a kind of preview of where everyone else is headed is a helpful reference point.

A Smaller World

The future is of course impossible to predict, but in all likelihood we’re headed for a world where everything is a lot more local, one way or the other. Whether by choice (to reduce emissions and be more resilient), or through a full-on collapse, our way of life is going to change drastically over the next decades.

The future we’re looking at is likely to be a lot more disconnected in terms of the movement of goods, people, as well as information. This will necessitate producing things locally, with whatever resources are available locally. Given the complexity of most supply chains, this means many things we build today probably won’t be produced at all anymore, so there will need to be a lot more repair, and a lot less consumption.

Above all though, this will necessitate much stronger communities at the local level, working together to keep things running and make life liveable in the face of the catastrophes to come.

To be Clear: Fuck Nazis

When discussing apocalyptic scenarios like these I think a lot of people’s first point of reference is the Hollywood version of collapse – People out for themselves, fighting for survival as rugged individuals. There are certain types of people attracted by that who hold other reprehensible views, so when discussing topics like preparing for collapse it’s important to distance oneself from them.

That said, individual prepping is also not an effective strategy, because real life is not a Hollywood movie. In crisis scenarios mutual aid is just as natural a response for people as selfishness, and it’s a much better approach to actually survive longer-term. Resilient communities of people helping each other is our best bet to withstand whatever worst case scenarios might be headed our way.

We’ll Still Need Computers…

If this future comes to pass, how to do computing will be far from our biggest concern. Having enough food, drinkable water, and other necessities of life are likely to be higher on our priority list. However, there will definitely be important things that we will need computers for.

The thing to keep in mind here is that we’re not talking about the far future here: The buildings, roads, factories, fields, etc. we’ll be working with in this future are basically what we have today. The factories where we’re currently building BMWs are not going away overnight, even if no BMWs are being built. Neither are the billions of Intel laptops and mid-range Android phones currently in use, even if they’ll be slow and won’t get updates anymore.

So what might we need computers for in this hyper-local, resource-constrained future?

Information Management

At the most basic level, a lot of our information is stored primarily on computers today, and using computers is likely to remain the most efficient way to access it. This includes everything from teaching materials for schools, to tutorials for DIY repairs, books, scientific papers, and datasheets for electronics and other machines.

The same goes for any kind of calculation or data processing. Computers are of course good at the calculations needed for construction/engineering (that’s kind of what they were invented for), but even things like spreadsheets, basic scripting, or accounting software are orders of magnitude more efficient than doing the same things without a computer.

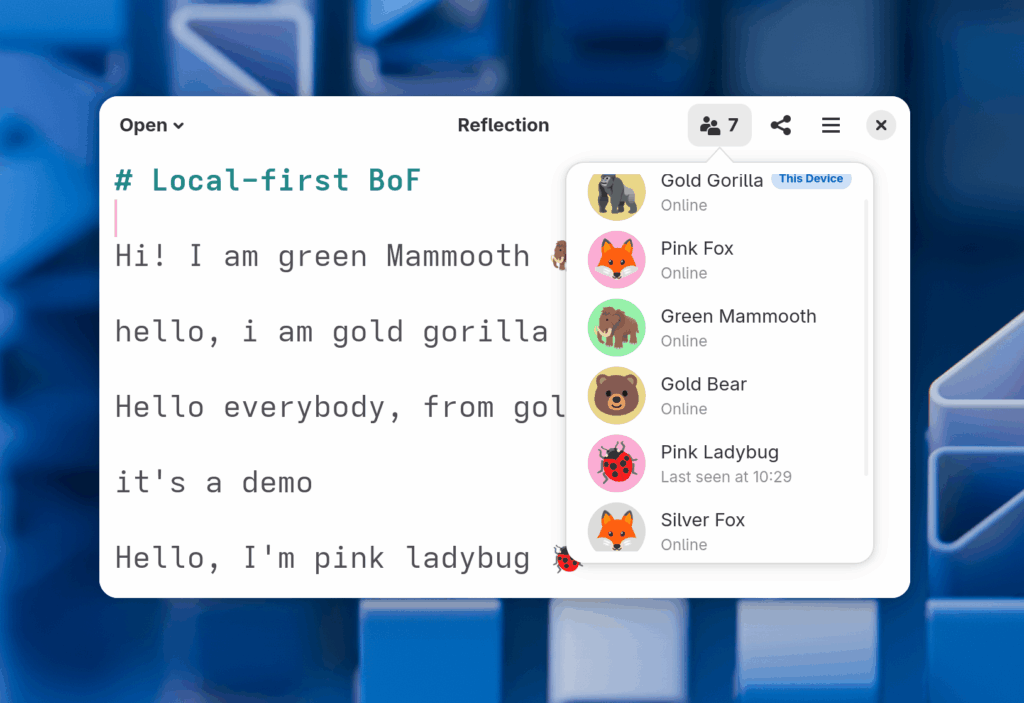

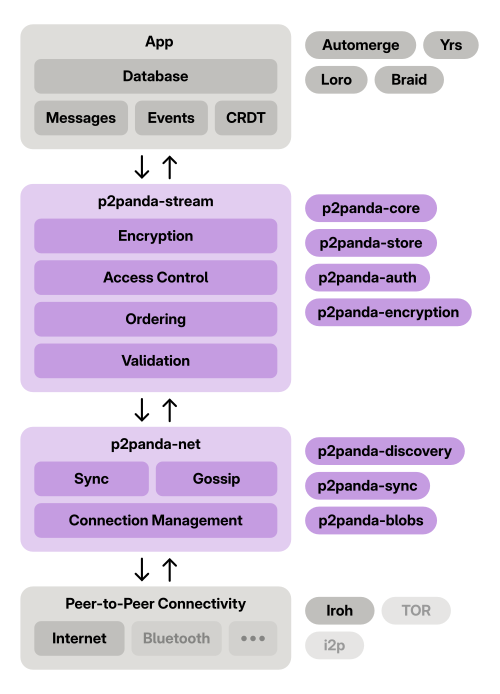

Local Networking

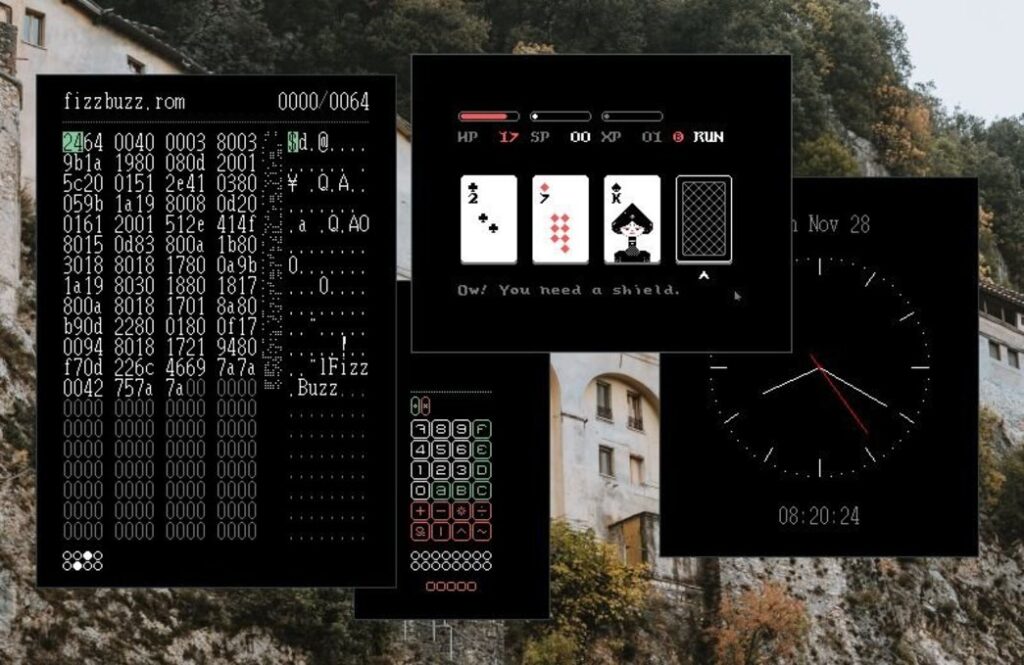

We’re used to networking always meaning “access to the entire internet”, but that’s not the only way to do networks – Our existing computers are perfectly capable of talking to each other on a local network at the level of a building or town, with no connection to a global internet.

There are lots of examples of potential use cases for local-only networking and communication, e.g. city-level mesh networks, or low-connectivity chat apps like Briar.

Reuse, Repair, Repurpose

Finally, there’s a ton of existing infrastructure and machinery that needs computers in order to be able to run, be repaired, or repurposed, including farm equipment, medical devices, public transit, and industrial tools.

I’m assuming – but this is conjecture on my part, it’s really not my area of expertise – the machines we’re currently using to build cars and planes could be repurposed to make something more useful, which can actually still be constructed with locally available resources in this future.

…Running Free Software?

As we’ve already touched on earlier, the centralized nature of proprietary software means it’s inherently less resilient than free software. If the company building it goes away or doesn’t sell you the software anymore, there’s not much you can do.

Given all the risks discussed earlier, it’s possible that free software will therefore have a larger role in a more localized future, because it can be adapted and repaired at the local level in ways that are impossible with proprietary software.

Assumptions to Reconsider?

However, while free software has structural advantages that make it more resilient than proprietary software, there are problematic aspects of current mainstream technology culture that affect us, too. Examples of assumptions that are pretty deeply ingrained in how most modern software (including free software) is built include:

- Fast internet is always available, offline/low-connectivity is a rare edge case, mostly relevant for travel

- New, better hardware is always around the corner and will replace the current hardware within a few years

- Using all the resources available (CPU, storage, power, bandwidth) is fine

Assumptions like these manifest in many subtle ways in how we work and what we build.

Dependencies and Package Managers

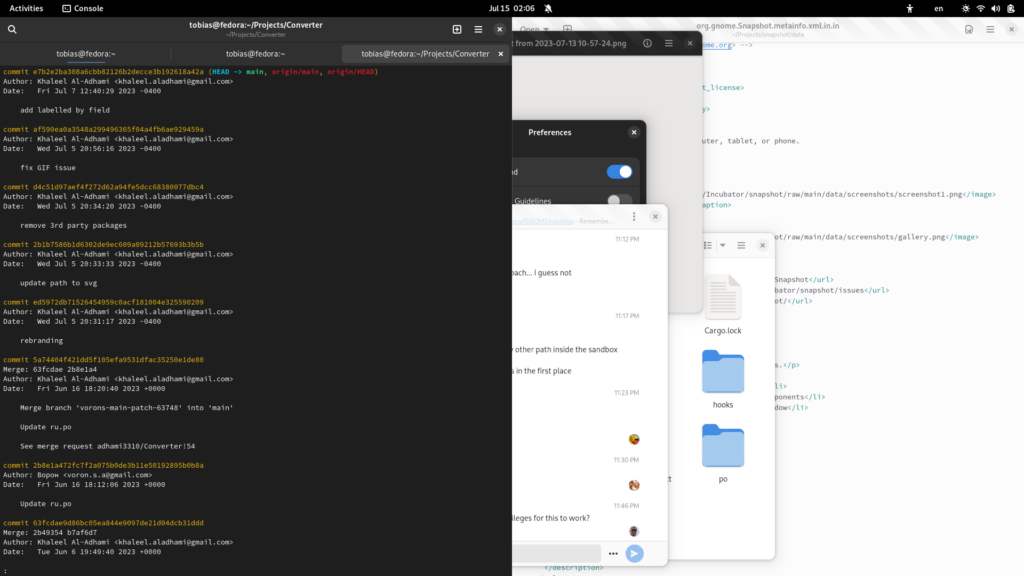

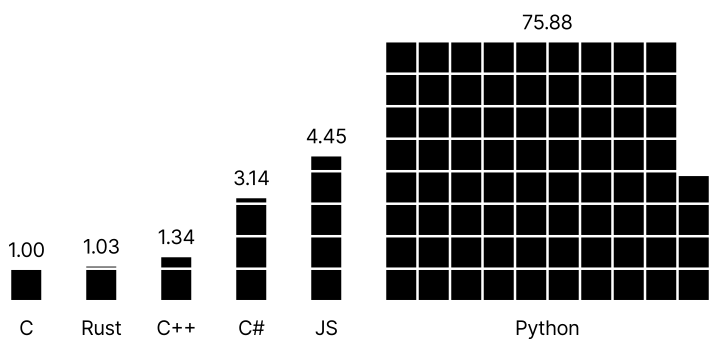

Over the past decade language-specific package managers such as npm and crates.io have taken off in an unprecedented way, leading to software with larger and more complex dependency graphs than ever before. This is the dominant paradigm for building software today, newer languages all come with their own built-in package manager.

However, just like physical supply chains, more complex dependency graphs are also less resilient. More dependencies, especially with pinned versions and lack of caching between projects means huge downloads and long build times when building software locally, resulting in lots of bandwidth, power, and disk space being used. Fully offline development is basically impossible, because every project you build needs to download its own specific version of every dependency.

It’s possible to imagine some kind of cross-project shared local dependency cache for this, but to my knowledge no language ecosystem is doing this by default at the moment.

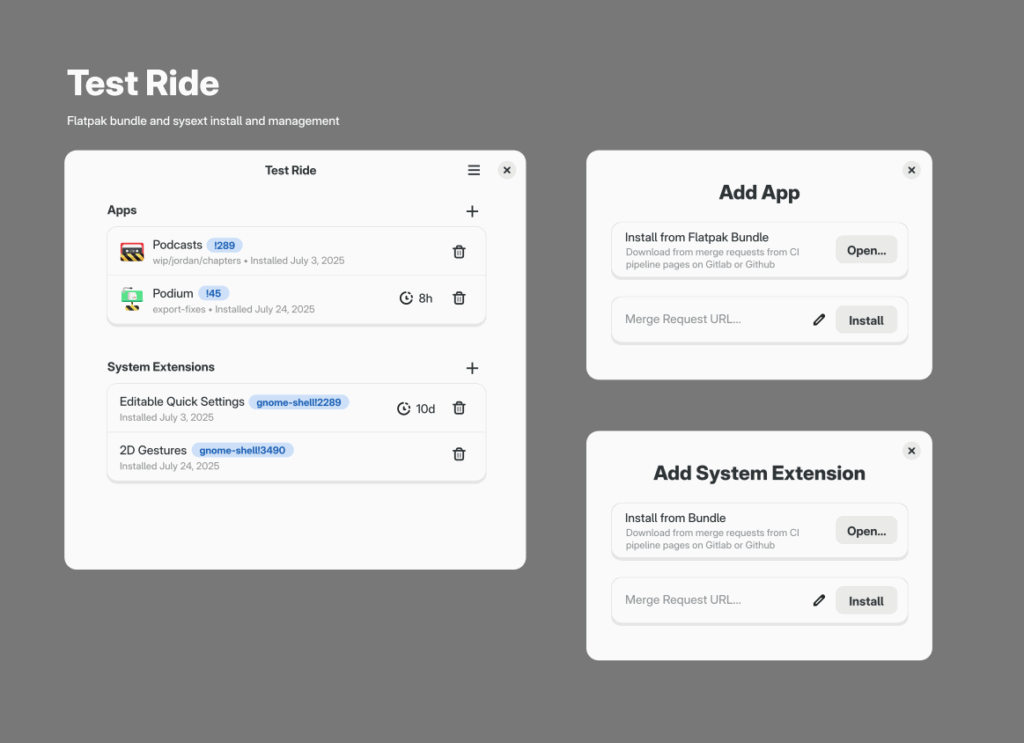

Core parts of the software development workflow are increasingly moving to web-based tools, especially around code forges like Github or Gitlab. Issue management, merge requests, CI, releases, etc. all happen on these platforms, which are primarily or exclusively used via very, very, slow websites. It’s hard to overstate this: Code forges are among the slowest, shittiest websites out there, basically unusable unless you have a fast connection.

This is, of course, not resilient at all and a huge problem given that we rely on these tools for many of our key workflows.

Cloud Storage & Streaming

As already discussed relying on data centers is problematic on a number of levels, but in practice most people (even in the free software community), have embraced cloud services in some areas, at least at a personal level.

Instead of local photo, music, and movie collections many of us just use Google Photos, Spotify, and Netflix nowadays, which of course affects which kinds of apps are being built. For example, there are no modern, actively developed apps to manage your photo collection locally anymore, but we do have a nice, modern Spotify client…

Scariest of all, I think, is imagining free software development without the internet. This movement came into existence and grew alongside the global internet in the 80s and 90s, and it’s almost impossible to imagine what it could look like without it.

Maybe the movement as a whole, as well as individual projects would splinter into smaller, local versions in the regions that are still connected? But would there be a sufficient amount of expertise in each of those regions? Would development at any real scale just stop, and instead people would only do small repairs to what they have at the local level?

I don’t have any smart answers here, but I believe it’s something we really ought to think about.

This was part two of a four-part series. In part 3 we’ll look at concrete ideas and examples of things we can work towards to make our software more resilient.