It’s good to dive into our shared history every now and again to learn something new. We want to build a customized shortcut engine for Builder and that means we need to have a solid understanding of all the ways to activate shortcuts in GTK+. So the following is a list of what I’ve found, as of GTK+ 3.22. I’ve included some pros and cons of each based on my experience using them in particularly large applications.

GtkAccelGroup and GtkAccelMap

The GtkAccelGroup class is remnant from the days before GtkUIManager was deprecated. It is a structure that is attached to a top-level GtkWindow and maps “paths” to closures. It’s purpose was to be used with a GtkAccelMap to ultimately bind accelerators (such as “<control>q”) to a closure (such as gtk_main_quit).

The GtkAccelMap is a singleton that maps accelerators to paths. When activating a keybinding, the accelerator machinery will try to match the path with one registered in the GtkAccelGroup on the window.

Pros

- Widgets can be activated no matter what the focus widget. However, the window must be focused.

- It is simple to display keyboard accelerators next to menu items.

- Creating an accelerator editor is straight forward. Lookup accel path, map to accelerator.

Cons

- Not deprecated, but much of the related machinery is deprecated.

- Fairly complex API usage. It doesn’t seem to be designed to be used by application developers.

Signal Actions

Signal actions are GObject signals that contain the G_SIGNAL_ACTION bit set. By marking this bit, we allow the GTK+ machinery to map an accelerator to a particular signal on the GtkWidget (or any ancestor widget in the hierarchy if it matches).

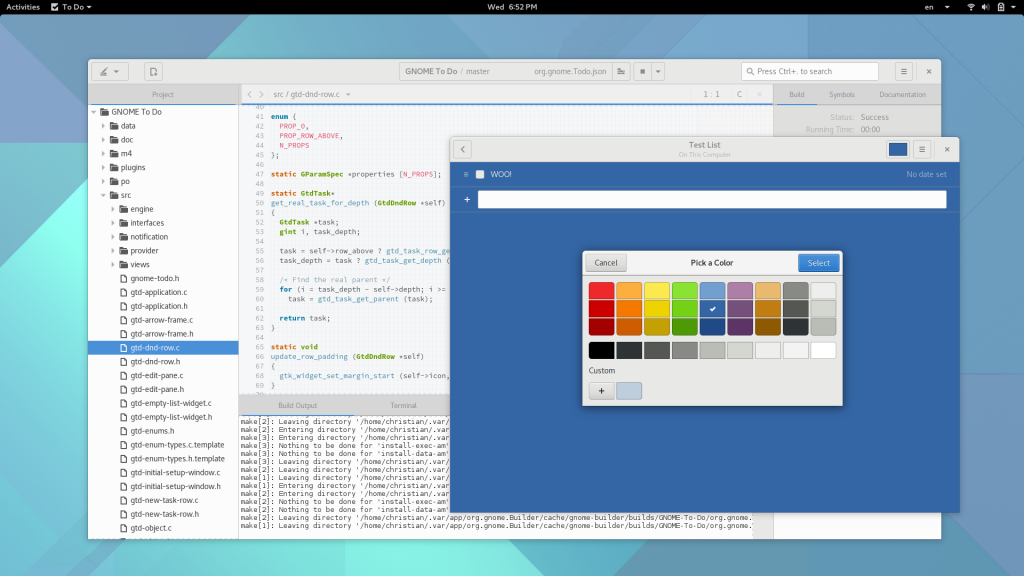

In the GTK+ 2.x days, we would control these keybindings using a gtkrc file. But today, we use GTK+ CSS to create binding sets and attach them to widgets using selectors. This is how the VIM implementation in Builder is done.

It should be noted that signal actions (and binding them from gtkrc/css) were meant to provide a way to provide key themes to GTK+ such as an emacs mode. It was not meant to be the generic way that applications should use to activate keybindings.

Pros

- You can use CSS selectors to apply binding sets (groups of accelerators) to widgets.

- Accelerators can include parameters to signals, meaning you can be quite flexible in what gets activated. With enough bong hits you’ll eventually land at vim.css.

Cons

- Only the highest-priority CSS selector can attach binding sets to the widget. So the highest-priority needs to know about all binding sets that are necessary. That reduces the effectiveness of this as a generic solution.

- This relies on the focus chain for activation. That means you can’t have a signal activate if another widget focused such as a something in the header bar.

- It feels like a layer violation. I could make arguments on both side of the fence for this, which is sort of indicative of the point.

- We have to “unbind” various default accelerators on widgets that collide with our key themes. This means that “key themes” are not only additive, but destructive as well.

GAction

Years back, we wanted the ability to activate actions inside of a program in a structured way. This allowed for external menu items as well as simplifying the process of single-instance applications. This was integrated into the GtkWidget hierarchy as well. Using gtk_application_set_accels_for_action() you can map an accelerator to a GAction. Using the current widget focus, the GTK+ machinery will work its way up the widget hierarchy until it finds a matching action. For example, if a widget has a “foo” GActionGroup associated with it, and that group contains an action named “bar”, then mapping “<control>b” to “foo.bar” would activate that action.

Pros

- Actions can have state, meaning that we can attach them to things like toggle buttons.

- Application level actions can be activated no matter what window is focused.

- It’s fairly easy to reason about what actions are discoverable and activatable from a node in the widget tree. Especially with GTK+ Inspector integration.

- Theoretically we could rebuild a11y on top of GAction and fix a lot of synchronous IPC to be async similar to how GMenu integrates with external processes. This would require a lot of external collaboration on various stacks though, so it isn’t an immediate “Pro”.

Cons

- Action activation requires the focus hierarchy to activate the action. This means to get something activatable from the entire window we need to attach the actions to the top-level. This can be a bit inconvenient, especially in multi-document scenarios which do not share widgetry.

- Actions are rather verbose to implement. Doing them cleanly means implementing proper API to perform the action, and then wrapping those using GActionEntry or similar. Additionally, to propagate state, the base API needs to know about the action so it can update action state. Coming up with a strategy for who is the “owner” of state is challenging for newcomers.

- The way to attach accelerators is via

gtk_application_set_accels_for_action() which is a bit weird for widgets that would want to provide accelerators. It means the applications are given more freedom to control how accelerators work at the cost of having to setup a lot of their own mechanics for activation.

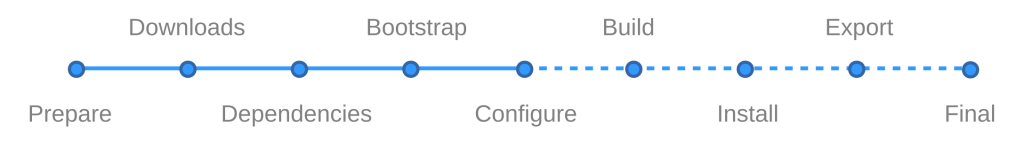

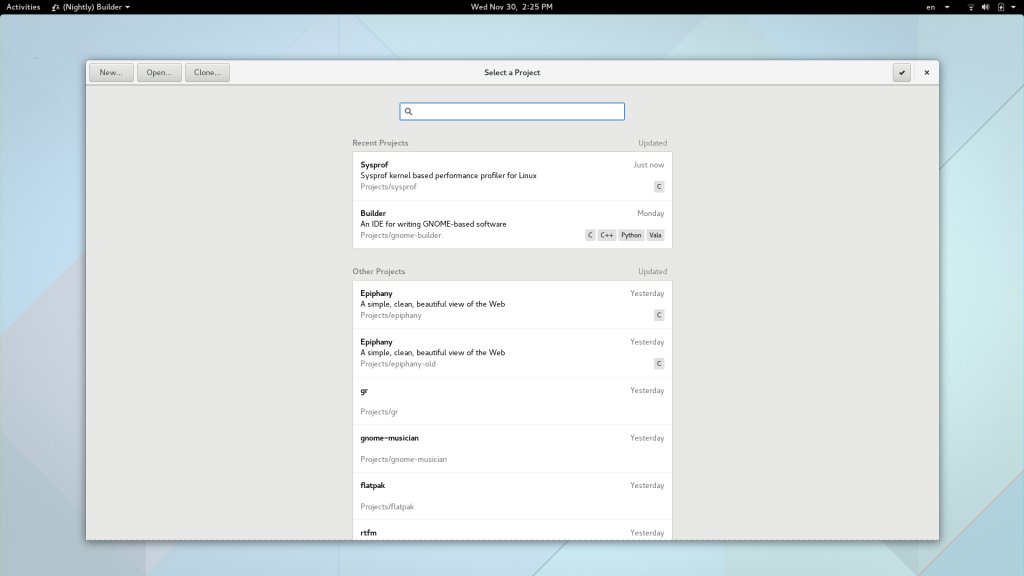

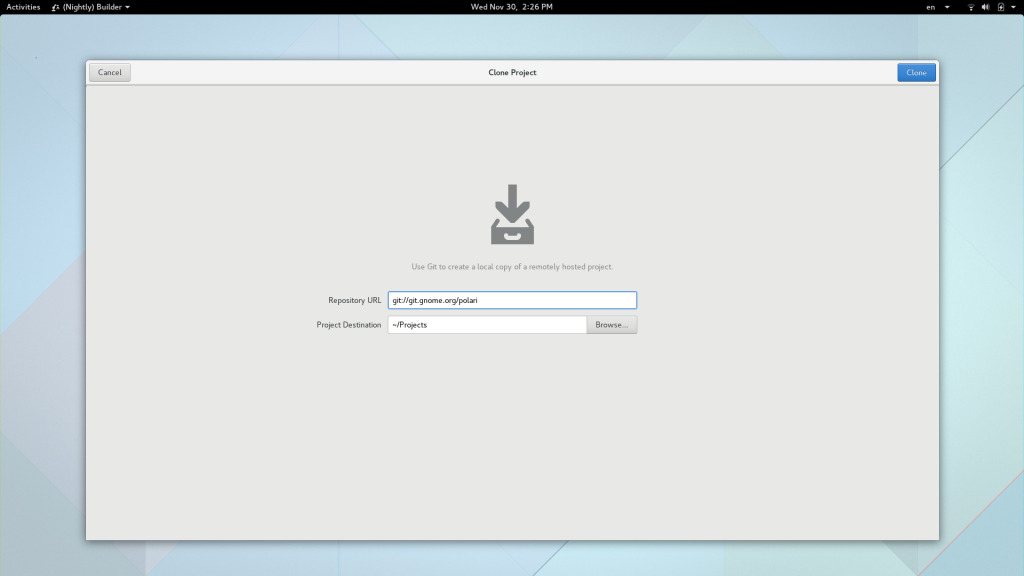

What do we need in Builder?

Builder certainly lies on the “more complex” side of things in terms of what is needed from the toolkit for accelerators. Let’s go over a few of those necessities to help create a wishlist for whatever code gets written.

VIM and Emacs Keybindings

These will never be 100% perfect, but we need reasonable implementations that allow Emacs and VIM users not scream at their computer while trying to switch to a new tool. That means we need to hit the 80% really well, even if that last 20% is diminishing returns. Today, we’ve found a way to mostly do this using G_SIGNAL_ACTION and a lot of custom GTK+ CSS. The con mentioned above of GTK+ CSS requiring that the -gtk-key-bindings: CSS property know about all binding sets that need to be applied from the selector make it unrealistic for us to keep pushing this further.

Custom Accelerators

We want the user to be able to start from a basic “key theme” such as Gedit, VIM, or Emacs and apply their own overrides. This means that our keybinding registration cannot be static, but flexible to account for changes while the application is running. We also need to know when there are collisions with other accelerators so that we can let the user know what they are doing may have side-effects.

GtkShortcutsWindow Integration

In Builder we do not account for the “key theme” in the shortcuts window. This can be confusing to users of Emacs and Vim mode as what they see may not actually activate the desired action. We would like a way to map the accelerators available (grouped and with proper sections) to be automatically reflected in our GtkShortcutsWindow.

Elevating Accelerator Visibility

The shortcuts window is nice, but making the accelerators available to the user while they are exploring the interface is far more beneficial (based on my experiences). So whatever we end up implementing needs to make it fairly trivial to display accelerators in the UI. Today, we do that manually by hard-coding these accelerators into the GtkBuilder UI files. That is less than ideal.

Window-wide Activation

If I’m focused on the source code editor, I may want to activate an accelerator from a panel that is visible. For example, <control><shift>F to activate the documentation search.

Using actions to do this today would require that all panel plugins register their actions on the top-level as well as keybindings using gtk_application_set_accels_for_action(). While this is acceptable for the underlying machinery, it’s almost certainly not the API we want to expose to plugin developers in Builder. It’s tedious and prone to breakage when multiple “key themes” are in play. So we need an abstraction layer here that can use the appropriate strategy for what the plugin has in mind and also allows for different accelerators based on the “key theme”.

Re-usability

There are other large applications in the GNOME eco-system that I care about. GIMP is one of those projects that has a hard time moving to GTK+ 3.x due to a few reasons, some of which are out of our control upstream. The sheer size of the application makes it difficult. Graphical tools often are full of custom widgetry which necessarily dives into implementation details. Another reason is how important accelerators are to an immersive, creativity-based application. I would like whatever we create to be useful to other projects (after a few API iterations). GIMP’s GTK+ 3 port is one obvious possibility.