I had the pleasure to be invited to the 2014 edition of the OpenSuSE Conference in Dubrovnik, Croatia. That event was flying under my radar for a long time and I am glad that I finally found out about it.

The first thing that impressed me was Dubrovik. A lovely city with a walled old town. Even a (rather high) watch tower is still there. The city manages to create an inspiring atmosphere despite all the crowds moving through the narrow streets. It’s clean and controlled, yet busy and wild. There are so many small cafés, pubs, and restaurants, so many walls and corners, and so many friendly people. It’s an amazing place for an amazing conference.

The conference itself featured three tracks, which is quite busy already. But in addition, an unconference was held as a fourth track. The talks were varying in topic, from community management, to MySQL deployment, and of course, GNOME. I presented the latest and greatest GNOME 3.12. Despite the many tracks, the hallway track was the most interesting one. I didn’t know too many faces and as it’s a GNU/Linux distribution conference which I have never attended before, many of the people I met had an interesting background which I was not familiar with. It was fun meeting new people who do exciting things. I hope to be able to stay in touch with many of them.

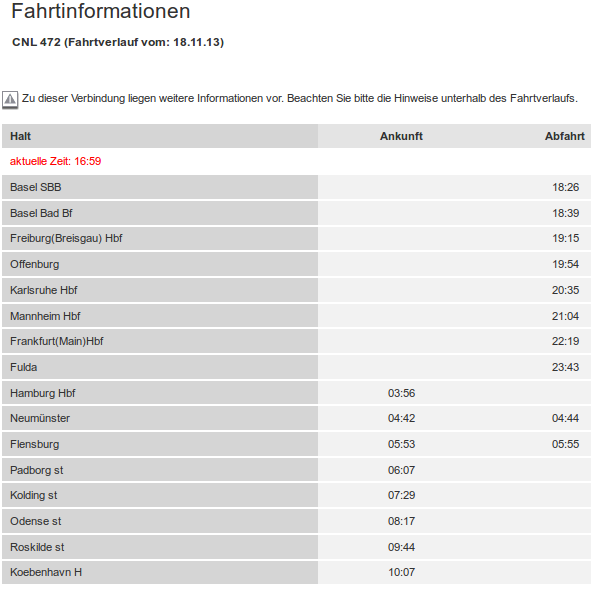

The conference was opened by the OpenSuSE Board. I actually don’t really know how OpenSuSE is governed and if there is any legal entity behind it. But the Board seems to be somehow elected by the community and was to announce a few changes to OpenSuSE. The title of the conference was “The Strength to Change” which is indeed inviting to announce radical changes. For better or worse, both the number and severity of the changes announced were limited. First and foremost, handling marketing materials is about to change. A new budget was put in place to allow for new materials to be generated to have a much bigger presence in the world. Also, the materials were created by SuSE’s designers on staff. So they are considered to be rather high quality. To get more contributors, they introduce formalised sponsorship program for people to attend conferences to present OpenSuSE. I don’t know what the difference to their Travel Support Program is, though. They will also reimburse for locally produced marketing materials which cannot be shipped around the world to encourage more people to spread the word about OpenSuSE. A new process will be put in place which will enable local contributors to produce materials up to 200 USD from a budget of 2000 USD per quarter. Something that will change, but not just yet, is the development and release model. Andrew Wafaa said that OpenSuSE was a victim of its own success. He mentioned the number of 7500 packages which should probably indicate that it is a lot for them to handle. The current release cycle of 8 months is to be discussed. There is a strong question of whether something new shall be tried. Maybe annual releases, or even longer to have more time for polish. Or maybe not do regular releases at all, like rolling releases or just take as long as it takes. A decision is expected after the next release which will happen as normal at the end of this year. There was an agreement that OpenSuSE wants to be easy to contribute to. The purpose of this conference is to grow the participants’ knowledge and connections in and about the FLOSS environment.

The next talk was Protect your MySQL Server by Georgi Kodinov. Being with MySQL since 2006 he talked about the security of MySQL in OpenSuSE. The first point he made was how the post-installation situation is on OpenSuSE 13.1. It ships version 5.6.12 which is not too bad because it is only 5 updates behind of what upstream released. Other distros are much further away from that, he said. Version 5.6 introduced cool security related features like expiring passwords, password strength policies, or SHA256 support. He urged the audience to stop using passwords on the command line and look into the 5.6 documentation instead. He didn’t make it any more concrete, though, but mentioned “login paths” later. He also liked that the server was not turned on by default which encourages you to use your self-made configuration instead of a default one. He also liked the fact that there is no pre-packaged database as that does not configure users that are not very well protected. Finally, he pointed out that he is pleased to see that no remote access is configured in the default configuration. However, he did not like that OpenSuSE does not ship the latest version. The newest upstream version 5.6.15 not only fixes around 25 security problems but also adds advanced AES functionalities such as keys being bigger than 128 bits. He also disliked that a mysql_secure_installation script is not run after installation. That script would put random passwords to the root account, would disallow anonymous access, and would do away with empty default passwords. Another regret he had was that mysql_config_editor is not packaged. That tool would help to get rid of passwords in scripts using MySQL by storing credentials in encrypted files. That way you would have to protect only one file, not a lot of scripts. For some reason OpenSuSE activates the “federated plugin” which is disabled upstream.

Another weird plugin is the archive plugin which, he said, is not needed. In fact, it is not even available so that the starting server throws errors… Also, authentication plugins which should only be used for testing are enabled by default which can be a problem as it could allow someone to log in as any user. After he explained how this was a threat, the actual attack seems to be a bit esoteric. Anyway, he concluded that you get a development installation when you install MySQL in OpenSuSE, rather than an installation suited for production use.

He went on to refer about how to harden it after installation. He proposed to run mysql_secure_installation as it wouldn’t cause any harm even if run multiple times. He also recommended to make it listen on specific interfaces only, instead of all interfaces which is does by default. He also wants you to generate SSL keys and certificates to allow for encrypted communication over the network.

Even more security can be achieved when turning off TCP access altogether, so you should do it if the environment allows it. If you do use TCP, he recommended to use SSL even if there is no PKI. An interesting advice was to use external authentication such as PAM or LDAP. He didn’t go into details how to actually do it, though. The most urgent tip he gave was to set secure_file_priv to a certain directory as it will restrict the paths MySQL can write to.

As for new changes that come with MySQL 5.7, which is the current development version accumulating changes over 18 months of development, he mentioned the option to log to syslog. Interestingly,

a --ssl option on the client is basically a no-op (sic!) but will actually enforce SSL in the upcoming version. The new version also adds more crypto functions such as RANDOM_BYTES() which interface with the SSL libraries. He concluded his talk with a quote: “Security is like plastic surgery. the more you invest, the prettier it gets.”.

Michael Meeks talked next on the history of the Document Foundation. He explained how it used to be in the StarOffice days. Apparently, they were very process driven and believed that the more processes with even more steps help the quality of the software they produced. He didn’t really share that view. The mind set was, he said, that people would go into a shop and buy a box with the software. He sees that behaviour declining steeply. So then hackers came and branched StarOffice into OpenOffice which had a much shorter release cycle than the original product and incorporated fixes and features of the future version. Everyone shipped that instead of the original thing. The 18 months of the original product were a bit of a long thing in the free software world, he said. He quoted someone saying “StarDivision a problem for every solution.”

He went on to rant about Contributor License Agreements and showed a graph of Fedora contributions which spiked off when they dropped the requirement of a CLA. The graph was impressive but really showed the number of active accounts in an unspecified system. He claimed that by now they have around the same magnitude of contributions as the kernel does and with set a new record with 3000 commits in February 2014. The dominating body of contributors is volunteers which is quite different when compared to the kernel. He talked about various aspects of the Document Foundation like the governance or the fact that they want to make it as easy to contribute to the project as possible.

The next talk was given on bcache by Oliver Neukum. Bcache is a disk cache which is probably primarily used to cache rotational disks with SSDs. He first talked about the principles of caching, like write-back, write-through, and write-around. That is, the cache is responsible for writing to the backing store, the cache places the data to be written in its buffer, or write to the backing storage, but not the cache, respectively. Subsequently, he explained how to actually use bcache. A demo given later revealed that it’s not fool proof and that you do need to get your commands straight in order to make it work properly. As to when to actually use Bcache, he explained that SSDs are cool as they are fast, but they are small and expensive. Fast, as he continued, can either mean throughput or latency. SSDs are good with regards to latency, but not necessarily with throughput. Other, probably similar options to Bcache are dm-cache, but it does not support safe writes. I guess that you cannot use it if you have the requirement of a write-through or write-around scenario. A different alternative is EnhanceIO, written originally by Facebook, which keeps hash structure of the data to be cached in RAM. Bcache, on the other hand, stores a b-tree on the SSD instead of in the RAM. It works on block devices, so anything goes. Tape drives, RAIDs, … It places a special superblock to indicate the partition is a bcache partition. A second block is created to indicate what the backing store is. Currently, the kernel does not auto detect these caches, hence making it work with the root filesystem is a bit tricky. He did a proper evaluation of the effects of the cache. So his statements were well founded which I liked a lot.

It was announced that the next year’s conference, oSC15, will be in The Hague, Netherlands. The city we had our GUADEC in, once. If you have some time in spring, probably in April, consider to go.