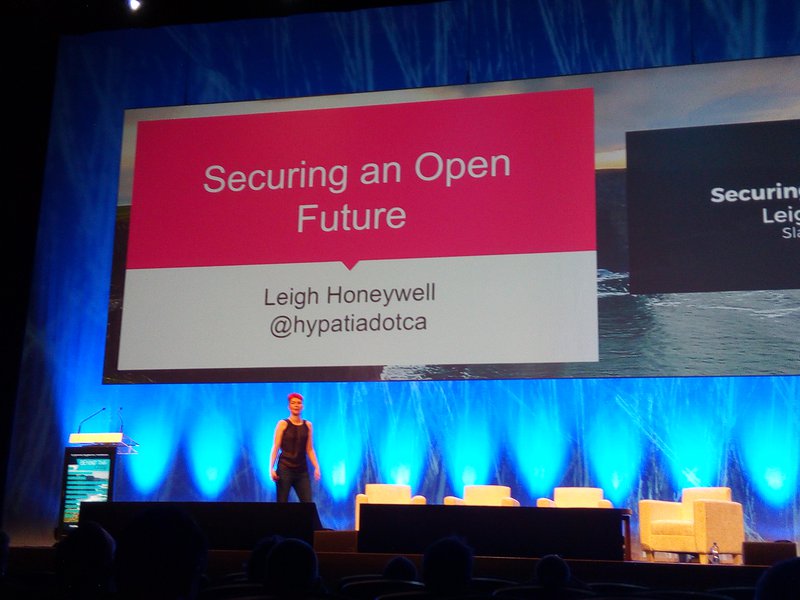

This year again, I attended the Chaos Communication Congress. It’s a fabulous event. It has become much more popular than a couple of years ago. In fact, it’s so popular, that the tickets (probably ~12000, certainly over 9000) have been sold out a week or so after the sales opened. It’s gotten huge.

This year has been different than the years before. Not only were you able to use your educational leave for visiting the CCCongress, but I was also giving a talk. Together with my colleague Christian Forler, we presented on Searchable Encryption. We had the first slot on the last day. I think that’s pretty much the worst slot in the schedule you could get 😉 Not only because the people are in Zombie mode, but also because you have received all those viruses and bacteria yourself. I was lucky enough, but my colleague was indeed sick on day 4. It’s a common thing to be sick after the CCCongress :-/ Anyway, we have hopefully entertained the crowd with what I consider easy slides, compared to the usual™ Crypto talk. We used a lot imagery and tried to allude to funny stuff. I hope people enjoyed it. If you have seen it, don’t forget to leave feedback! It was hard to decide on the appropriate technical level for the almost 1800 people. The feedback we’ve received so far is mixed, so I guess we’ve hit a good spot. The CCCongress was amazingly organised for speakers. They really did care for us and made sure everything was right. So everything was perfect expect for pdfpc which crashed whenever it was meant to display a certain slide… I used Evince then and it worked…

The days at the CCCongress were intense as you might be able to tell from the Fahrplan. It generally started at about 12:00 and ended at about 01:00. And that’s only the talks. You can’t avoid bumping into VIP (very interesting people) and thus spend time in the hallway. And then you have these amazing parties. This year, they had motor-homes and lasers in the dance hall (last year it was a water cannon…). Very crazy atmosphere. It’s highly recommended to spend a night there.

Anyway, day 1 started for me with the Keynote by Fatuma Musa Afrah. The speaker stretched her time a little, I felt. At the beginning I couldn’t really grasp what her topic was or what she wanted to tell us. She repeatedly told us that we had to “kill the time together” which killed my sympathy to some extent. The conference’s motto was Gated Communities. She encouraged us to find ways to open these gates by educating people and helping them. She said that we have to respect each other irrespective of the skin colour or social status. It was only later that she revealed being refugee who came to Germany. Although she told us that it’s “Newcomers”, not “refugees”. In fact, she grew up in Kenya where she herself was a newcomer. She fled to Kenya, so she fled twice. She told us stories about her arriving and living in Germany. I presume she is breaking open the gates which separate the communities she’s living in, but that’s speculation. In a sense she was connecting her refugee community with our hacker community. So the keynote was interesting for that perspective.

Joanna Rutkowska then talked about trustworthy laptops. The basic idea is to have no state on the laptop itself, i.e. no place where malware could be injected. The state should instead be kept on a personal storage medium, like an SD card or a pen drive. She said that laptops are inherently not trustworthy. Trust, she said can be broken up into Trusted, Secure, and Trustworthy. Secure is resistant to attacks. Trusted is something we, as Security community, do not want to have, like a Trusted third party. Trustworthy, she said, is something different, like the Intel Management Engine which might be resistant to attacks, yet it is not acting in the interest of the user. Application level security is meaningless, she said, when we cannot trust the Operating System, because it is the trusted part. If it is compromised then every effort is not useful. Her project, Qubes OS, attempts to reduce the Trusted Computing Base. What is Operating system to the application, is the hardware to the Operating system. The hardware, she said, has been assumed to be trusted. A single malicious peripheral, like a malicious wifi module, can compromise the whole personal computer, the whole digital life, she said. She was referring to problems with Intel x86 platforms. Present Intel processors integrate everything on the main chip. The motherboard has been made more or less only a holder for the CPU and the memory. The construction of those big chips is completely opaque. We have no control over what is inside. And we cannot look inside, even if we wanted to. The firmware is being loaded during boot from a discrete element on the mainboard. We cannot, however, verify what firmware really is on the chip. One question is how to enforce read-only-ness of the system or how to upload your own firmware. For many years, she and others believed that TPM, TXT, or UEFI secure boot could solve that problem. But all of them have shown to fail horribly, she said. Unfortunately, she didn’t mention how so. So as of today, there is no such thing as a secure boot. Inside the processor is a management engine which special, because it is the perfect entry for backdooring and zombification of personal computing. By zombification, she means that the involvement of the Apps (vs. OS vs. Hardware) is decreasing heavily and make the hardware have much more of a say. She said that Intel wants to make the Hardware fully control your computing by having much more logic in the management engine. The ME is, in a sense, a gated community, because you cannot, whatsoever, inspect it, tinker with it, or otherwise get in touch. She said that the war is lost on X86. Even if we didn’t have the management engine. Again, she didn’t say why. Her proposal is to move all those moving firmware parts out to a trusted storage. It was an interesting perspective on what I think is a “simple” Free Software problem. Because we allow proprietary software, we now have the problem to see what is loaded into the hardware. With Free Software we’d still have backdoors in hardware, but assuming that most functionality is encoded in firmware, we could see and modify the existing firmware and build, run, and share our “better” firmware.

Ilja van Sprundel talked about Windows driver security or rather their attack surface. I’m not necessarily interested in Windows per se, but getting some lower level knowledge sounded intriguing. He gave a more high level overview of what to do and what to not do when doing driver development for Windows. The details, he said, matter. For example whether the IOManager probes a buffer in *that* instance. The Windows kernel is made of several managers, he said. The Windows Driver Model (WDM) is the standard model for how drivers are written. The IO Manager proxies requests from user to (WDM drivers. It may or may not validate arguments. Another central piece in the architecture are IO Request Packets (IRPs). They are being delivered from the IO Manager to the driver and contain all the necessary information for the operation in question. He went through the architecture really fast and it was hard for a kernel newbie like me to follow all the concepts he mentioned. Interestingly though, the IO Manager seems to also care about transferring the correct amount of memory from userspace to kernel space (e.g. makes sure data does not overflow) if you want it to using METHOD_BUFFERED. But, as he said, most of the drivers use METHOD_NEITHER which does not check anything at all and is the endless source of driver bugs. It seems as if KMDF is an alternative framework which makes it harder to have bugs. However, you seem to need to understand the old framework in order to use that new one properly. He then went on to talk about the actual attack surface of drivers. The bugs are either privilege escalation, denial of service, or information leak. He said that you could avoid the problem of integer overflow by using the intsafe library. But you have to use them properly! Most importantly, you need to check their return type and use the actual values you want to have been made safe. During creation of a device, a driver can call either IoCreateDeviceSecure with an SDDL string or use an INF file to ACL the device. That is, however, done either rarely or wrongly, he said. You need to think about who needs to have access to your device. When you work with the IOManager, you need to check whether Irp->MdlAddress is NULL which can happen, he said, if it’s a zero sized buffer. Similarly, when using the safer METHOD_BUFFERED mentioned earlier, Irp->AssociatedIrp.SystemBuffer can also be NULL. So avoid having that false sense of security when using that safe API. Another area of bugs is the cancellation of IRPs. The userland can cancel requests which apparently is not handled gracefully by drivers and leads to deadlocks, memory leaks, race conditions, double frees, and other classes of bugs. When dealing with data from userland, you are supposed to “probe” the memory which is basically checking whether the pointers are valid and in the expected range. If you don’t do that, it’ll lead to you writing to arbitrary kernel memory. If you do validate the data from userspace, make sure you don’t fetch it again from user space assuming that it hasn’t changed. There might be race between your check and your usage (TOCTOU). So better capture, validate, and use the data. The same applies when using MDLs. That, however, is more tricky, because you have a double mapping and you are using a kernel pointer. So it is very subtle. When you do memory allocation you can either use ExAllocatePool or ExAllocatePoolWithQuota. The latter throws an exception instead of returning NULL. Your exception or NULL pointer handling needs to be double checked, he said. It was a very technical talk on Windows which was way out of my comfort zone. I only understood a tiny fraction of what he was presenting. But I liked it for the new insight on Windows drivers and that the same old classes of bug have not died yet.

High up on my list of awaited talks was the talk on train systems by the SCADA strangelove people. Railways, he said, is the biggest system built by mankind. It’s main components are signals and switches. Old switches are operated manually by pure force. Modern switches are interlocked with signals such that the signals display forbidden entry when switches are set in certain positions. On tracks, he said, signals are transmitted over the actual track by supplying them with AC or DC. The locomotive picks up the signals and supplies various systems with them. The Eurostar, they said, has about seven security systems on board, among them a “RPS”, a Reactor Protection System which alludes to nuclear trains… They said that lately the “Bahn Automatisierungssystem (SIBAS)” has been updated to use much more modern and less proprietary soft- and hardware such as VxWorks and x86 with ELF binaries as well as XML over HTTP or SS7. In the threat model they identified, they see several attack vectors. Among them are making someone plug a malicious USB device in controlling machines in some operation center. He showed pictures from supposedly real operation centers. The physical security, he said, is terrible. With close to no access control. In one photograph, he showed a screenshot from a documentary aired on TV which showed credentials sticking on the screen… Even if the security is quite good, like good physical security and formally proven programs, it’s still humans who write the software, he said, so there will be bugs to be exploited. For example, he showed screenshots of when he typed “railway” into Shodan and the result included a good number of railway stations. Another vector is GSM-R. If you jam the train’s GSM-R connection, the train will simply stop. Also, you might be able to inject SIM toolkit malware. Via that vector, you might make the modem identify as, e.g. a keyboard and then penetrate further into the systems. Overall an entertaining talk, but the claims were a bit over the top. So no real train hacking just yet.

The talk on memory corruption by Mathias Payer started off by saying that software is unsafe and insecure. Low level languages trade type safety and memory safety for performance. A large set of legacy applications, he said, are prone to memory vulnerabilities. There are, he continued too many bugs to find and fix manually. So we need a runtime system to ensure security. An invalid dereference or an out of bounds pointer is the core of memory unsafety problems. But according to the C language, he claimed, it’s only a violation if the invalid pointer is read, written to, or freed. So during runtime, there are tons and tons of dangling pointers which is perfectly fine. With such a vulnerability a control-flow attack could be executed. Several defenses exist: Data Execution Prevention prevents code from actually being executed. Address Space Layout Randomisation scrambles the memory locations of executable code which makes it harder to successfully exploit a vulnerable. Stack canaries are special values which are supposed to detect overflowing writes. Safe exception handlers ensure that exception code paths follow predefined patterns. The DEP can only work together with ASLR, he said. If you broke ASLR, you could re-use existing code; as it turns out, people do break ASLR every now and then. Two new mechanisms are Stack Integrity and Code Flow Integrity. Stack Integrity enforces to return to the actual caller by having a shadow stack. He didn’t mention how that actually works, though. I suppose you obtain a more secret stack address somewhere and switch the stack pointer before returning to check whether the return address is still correct. Control Flow Integrity builds a control flow graph during compilation and for every control flow change it checks at run time whether the target address is allowed. Apparently, many CFI implementations exist (eleven were shown). He said they’ve measured those and IFCC and Lockdown performed rather badly. To show how all of the protection mechanisms fail, he presented printf-oriented programming. He said that printf was Turing complete and presented a domain specific language. They have built a brainfuck interpreter with snprintf calls. Another rather technical talk by a good speaker. I remember that I was already impressed last year when he presented on these new defense mechanisms.

DJB and Tanja Lange started their “late night show” by bashing TLS as a “gigantic clusterfuck”. They were presenting on quantum computing and cryptography. They started by mentioning that the D-Wave quantum computer exists, but it’s not useful, he said. It doesn’t do the basic things, and can only do limited computations. It can especially not perform Shor’s algorithm. So there’s no “Shor monster coming”. They recommended the Timeline of Quantum Computing as a good reference of the massive research effort going into quantum computing. If there was a general quantum computer pretty much every public key scheme deployed on the Internet today will be broken. But also symmetric schemes are under attack due to Grover’s algorithm which speeds up brute force algorithms significantly. The solution could be physical crypto like using strong (physical) locks. But, he said, the assumptions of those systems are already broken. While Quantum Key Distribution is secure under certain assumptions, those assumptions are off, he said. Secure schemes that survive the quantum era were the topic of their talk. The first workshop on that workshop happened in 2006 and efforts are still being made, e.g. with EU projects on the topic. The time it takes for a crypto scheme to gain significant traction has been long, so far. They gave ECC as an example. It has been introduced in the 1980s, but it’s only now that it’s taking over the deployed crypto on the Internet. So the time it takes is long. They gave recommendations on what to do to have connections that are secure “for at least the next hundred years”. These include at least 256 bit keys for symmetric encryption. McEliece with binary Goppa codes n=6960 k=5413 t=119. An efficient implementation of such a code based scheme is McBits, she said. Hash based signatures with, e.g. XMSS or SPHINCS-256. All you need for those is a proper hash function. The stuff they recommend for the next 100 years, like the McEliece system, are things from the distant past, she said. He said that Post Quantum Cryptography will be the standard in a couple of years from now so he urged the cryptographers in the audience to “get used to this stuff”.

Next on my list was Markus’ talk on Landesverrat which is the incident of Netzpolitik.org being investigated for revealing secret documents. He referred on the history of the case, how it came around that they were suspected of revealing secret documents. He said that one of their believes is to publish their sources, even the secret ones. They want their work to be critically reviewed and they believe that it is only possible if the readers can inspect the sources. The documents which lead to the criminal investigations were about finances of the introduction of the XKeyscore software. Then, the president of the “state security” filed a case against because of revealing secret documents. They wanted to publish the investigation files, but they couldn’t see them, because they were considered to be more secret than the documents they have already published… From now on, he said, they are prepared for the police raiding their offices, which I suppose is good standard preparation. They were lucky, he said, because their case fell into the regular summer low of news which could make the case become quite popular in the media. A few weeks earlier or later and they were much less popular due to the refugees or Greece. During the press coverage, they had a second battleground where they threw out a Russian television team who entered their offices without having called or otherwise introduced themselves… For the future, he wants to see changes in what is considered to be a state secret. He doesn’t want the government to decide what such a secret is. He also wants to have much more protection for whistle blowers. Freedom of press should also hold for people who do not blog for their “occupation”, but also hobbyists.

Vincent Haupert was then talking on App-based TAN online banking methods. It’s a classic two factor method: Not only username and password, but also a TAN. These TAN methods have since evolved in various ways. He went on to explain the general online banking process: You log in with your credentials, you create a new wire transfer and are then asked to provide a TAN. While ChipTAN would solve many problems, he said, the banking industry seems to want their customers to be able to transfer money everywhere™. So you get to have two “Apps” on your mobile computer. The banking app and a TAN app. However, malware in “official” app stores are a reality, he said. The Google Playstore cannot protect against malware, as a colleague of him demonstrated during his bachelor thesis. This could also been by the “Brain Test” app which roots your device and then loads malware. Anyway, they hijacked the connection from the banking app to modify the recipient of the issued wire transfer and the TAN being pushed on the device. They looked at the apps and found that they “protected” their app with “Promon Shield“. That seems to be a strong obfuscation framework. Their attack involved tricking the root and hooks detection. For the root detection they check on the file system for certain binaries. He could simply change the filenames and was good to go. For the hooks (Xposed) it was pretty much the same with the exception of a few filenames which needed more work. With these modifications they could also “hack” the newer version 1.0.7. Essentially the biggest part of the problem is that the two factors are on one device. If the attacker hijacks that one device then ,

The talk by Christian Schaffner on Quantum Cryptography was introducing the audience to quantum mechanics. He said that a qubit can be imagined as the direction of a polarised photon. If you make the direction of the photons either horizontal or vertical, you can imagine that as representing 0 or 1. He was showing an actual physical experiment with a laser pointer and polarisation filters showing how the red dot of the laser pointer is either filtered or very visible. He also showed how actually measuring the polarisation changes the state of the photons! So yet another filter made the point in the back brighter. That was a bit weird, but that’s quantum mechanics. He showed a quantum random number generator based on that technology. One important concept is the no-cloning theorem which state that you can make a perfect copy of a quantum bit. He also compared current and “post quantum” crypto systems against efficient classical attackers and efficient quantum attackers. AES, SHA, RSA (or discrete logs) will be broken by quantum attacks. Hash-based signatures, McEliece, and lattice-based cryptography he considered to be resistant against quantum based attacks. He also mentioned that Quantum Key Distribution systems will also be against an exhaustive attacker who applies brute force. QKD is based on the no-cloning theorem so an eavesdropper cannot see the same bits as the communicating parties do. Finally, he asked how you could prove that you have been at a certain location to avoid the pizza delivery problem (i.e. to be certain about the place of delivery).

Fefe was talking on privileges. He said that software will be vulnerable. Various techniques should be applied such as simply fixing all the bugs (haha…) or make exploitation harder by applying ASLR or ROP protection. Another idea is to put the program in a straight jacket and withdraw privileges. That sounds a lot like containerisation. Firstly, you can drop your privileges from superuser down to the least privileges you need, then do privilege separation. Another technique is the admin confining the app in a jail instead of the app confining itself. Also, you can implement access control via a broker service by splitting up your process into, say, a left half which opens and reads files and a right half which processes data. When doing privilege separation, the idea is to split up the process into several separately running programs. Jailing is like firewall rules for syscalls which, he said, is impossible for complex programs. He gave Firefox as an example of it being impossible to write a rule set for. The app containing itself is like a werewolf chaining itself to the wall before midnight, he said. You restrict yourself from opening files, creating socket, or from attaching yourself as a debugger to other processes. The broker service is probably like a reference monitor. He went on showing how old-school privilege dropping works. You could do it as easily as seteuid(getuid()), but that’s not enough, because there is the saved UID, so you need to setresuid and not forget to check the return code. Because the call can fail if, for example, the target UID had already been running too many processes for its quota. He said that you should fail the build if your target platform does not provide setresuid. However, dropping privileges is more than setting your UID. It’s also about freeing resources you don’t necessarily need. Common approaches to jailing your process are to have a fake filesystem with only the necessary files, so your process cannot ever access anything that it shouldn’t. On Linux, that would probably be chroot. However, you can escape using fchdir . Also, mounting your /proc into the chroot, information about the host is exposed. So you need to do more work than calling chroot. The BSDs, he said, have Securelevel which is a kernel mode that only increases which withdraws certain privileges. They also have jails which is a chroot on steroids, he said. It leaks some information due the PIDs, though, he said.

The next talk was on Shellphish, an automatic exploitation framework. This is really fascinating stuff. It’s been used for various Capture the Flag contests which are basically about hacking other teams’ software services. In fact, the presenters were coming from the UCSB which is hosting the famous iCtF. They went from solving security challenges to developing a system which solves security challenges. From a vulnerability binary, they (automatically) develop an exploit and a patched binary which is immune to the exploit, but preserves the functionality of the program. They automatically find vulnerabilities, patches, and test both the exploits and the patches. For the automated vulnerability component, they presented Angr. It has a symbolic execution engine looking for memory accesses outside allocated regions and unconstrained instruction pointer which is a jump controlled by user input (JMP eax). They have written a paper for NDSS about “Augmenting Fuzzing Through Selective Symbolic Execution“. Angr is a Python library and they showed how to use it for identifying the overhyped Back to 28 vulnerability. Actually, there is too much state for a regular symbolic executor to find this problem. Angr does “veritesting“. He showed that his Angr script found the vulnerability by him having excluded many paths of execution that don’t really generate new state with a few lines of code. He didn’t show though what the lines of code were and how he determined how the states are not adding any new information.

The next talk was given by the people behind Intelexit was about convincing NSA agents to stop their work and serve democracy instead. They rented a van with big mottoes printed on them, like “Listen to your heart, not to private phone calls”. They also stuck the constitution on the “constitution protection office” which then got torn apart. Another action they did was to fly over the dagger complex and to release flyers about leaving the secret services. They want to have a foundation helping secret service agents to leave their job or to blow the whistle. They also want an anonymous call service where agents can call to talk about their job. I recommend browsing their photos.

Another artsy talk was on a cheap Facebook army. Actually it was on Instagram followers. The presenter is an artist himself and he’d buy Instagram followers for fellow artists “to make them all equal”. He dislikes the fact that society seems to measure the value or quality of art in followers or likes on social media.

Around the CCCongress were also other artsy installations like this one called “machine learning”:

It’s been a fabulous event. I really admire the people organising this event each and every year. Thank you so much and see you next year, hopefully.