To give the wider community a chance to see what happened during the GStreamer hackfest last weekend I put together this blog post is based on an summary written by Wim Taymans, so a big thanks to Wim for letting me reuse parts of his summary.)

So last weekend 21 GStreamer hackers got together at the Google office in Munich to spend the weekend hacking on their favourite GStreamer bits. At this point in time we didn’t have any major basic plumbing tasks that needed tackling so the time was spent hacking on a lot of different projects and using the opportunity to discuss design and challenges with each other.

We where 3 people attending from Red Hat and Fedora; Wim Taymans, Alberto Ruiz and myself.

With the Release of GStreamer 1.0 in September 2012, we drastically changed the way memory is handled in the multimedia pipeline and the large body of work is still in exploring, improving and porting elements to this new memory model. We’re also mostly working on improving the existing elements with comparatively little new infrastructure work.

We’re also seeing a lot of people from different companies that contribute significant amounts of code to the official GStreamer repositories. This has traditionally been a much more closed effort with various pieces of code living in multiple repositories, especially for the hardware acceleration bits. It is good to see that the 1.0 series brings all these efforts together again with more coordination and a more coherent story.

HW acceleration

One of the large ongoing tasks is to improve our support for hardware accelerated decoding, effects and display. With 1.0 we can finally get this done cleanly and efficiently in very many use cases.

Matthew Waters to flew in from Australia to move the gst-plugins-gl set of plugins to the core GStreamer plugins packages. He has been working on these plugins for a while now. Their goal is to use OpenGL to apply operations on the video, like rotation on a cube or applying a shader. With the 1.0 memory management it becomes possible to do this efficiently with a minimal amount of texture upload/downloads. More work is needed here, we can optimize things some more by delaying the work and running the shaders as part of the rendering operation.

Andoni Morales (Fluendo) has also been working on improving hardware acceleration on android. He used some of the new features of 1.0 to make the android codecs use zero-copy by implementing the texture-upload meta data on buffers. This allows the video sink to efficiently create a texture from the decoded data for display. Andoni also ported winks, a video capture source on Windows, to GStreamer 1.0.

Nicolas Dufresne (Collabora) has been working on adding a new set of decoders based on the mem2mem API in v4l2. Not many drivers provide this API yet but it is implemented in some Samsung Exynos SOCs. We would also like to support other m2m operations later, such as color conversion but for that we need to make some of our base classes support the required asynchronous behaviour of mem2mem. The memory management in our v4l2 elements has been gone through several iterations of improvements during the 1.0 cycle but it still is not entirely what it should be. We agreed on what we should do to fix this in the near future. We also briefly discussed the need for a new event that can be used to reclaim memory from a pipeline; many elements that use hardware buffers need to free those before they can negotiate a new format with the hardware so we need a way to make that possible.

Mathieu Bourron (Collabora) has been working on libva, the library for GPU based video decoding and encoding on Intel hardware, and spent his time at the Hackfest fixing up the SPU overlay element to enable hardware accelerated subpicture overlays in the video sink. Traditionally GStreamer would use the CPU to overlay the subpictures (of DVD, for example) on top of the video images. With new GL-based sinks, and hardware accelerated decoders this is very undesirable and can be done much more efficient as part of the final rendering. In 1.0 we have the infrastructure to delay this overlay operation by attaching extra metadata (with the subpicture) to the video images when the video sink knows how to overlay them. We have been doing this with subtitles in cluttersink and other sinks for a while now and soon we can also do this with subpictures.

Plugin Hacking

Arun Raghavan, GStreamer hacker and Pulse Audio maintainer, worked on disabling the audio and video filters in playbin when passthrough mode was selected. In passthrough mode, a video or audio sink can directly handle the encoded media (think a bluetooth headset that can handle mp3 directly or a hardware sink that takes encoded data). He expaned on that work in blog entry.

As a cool hack, Arun also made a source element to read from torrent files so you can watch a movie while you torrent it. He provides more information on that element in his blog, it is actually really cool.

Thiago Santos (Collabora) was continuing with his work to improve the DASH demuxer, reworking the buffering code to make it buffer less and smoother. Dash is one of the new formats (with HLS and MSS) to stream media over HTTP while adapting to bandwidth changes. On the server side, it makes media available in various bitrates while a client switches between bitrates depending on its measured network conditions. Andoni Morales also worked on a new dashsink element that implements the server side of the DASH

format.

Mathieu Duponchelle, a former GSoC student was trying to improve support for seeking in MPEG Transport Streams in order to use them in PiTiVi. Seeking in MPEG TS is not an easy thing because they are really optimized for streaming only. He got help from Thibault Saunier (Collabora), who was also hacking on PiTiVi and who was preparing a new release of gnonlin, GES and gst-python 1.2 (which he released on Sunday). Mathieu is one of the developers able to work fulltime on PiTiVi now thanks to the PiTiVi fundraiser, so be sure to contribute to that!

Jan Schmidt (Centricular), a long time GStreamer core hacker was working on debugging some DVB issues and also ended up taking part in a lot of the general design and troubleshooting discussions happening during the hackfest, helping other people move forward with their projects.

Long time GStreamer hacker Edward Hervey (Collabora) was planning to do a lot of DVB hacking but had to give up on that effort when it was clear that Google had signal isolated the office for security reasons, so there was no DVB signal in the Google office. Instead he worked on merging some pending DVB patches and implemented GAP support in the mpeg transport stream plugin. GAP support deals with streams that have long periods of no media (like missing audio for some time in DVD). It makes sure that downstream elements keeps processing the silence instead of waiting for more data.

Applications

Meg Ford, a GSOC student mentored by Sebastian Dröge (Centricular) was working on Gnome Sound Recorder and fixing up the last bugs, preparing it for a new release.

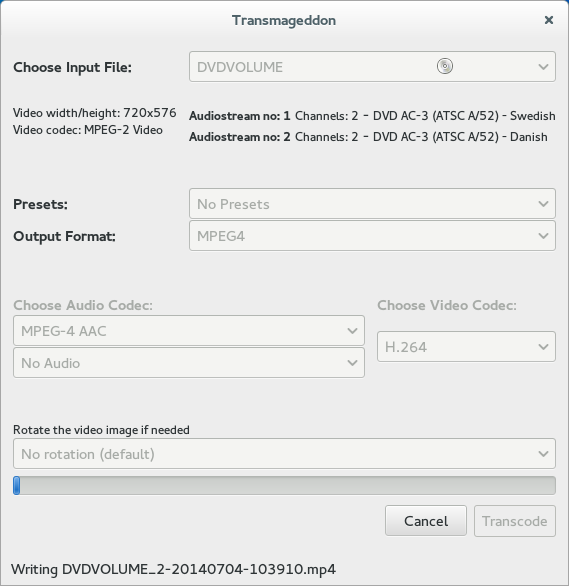

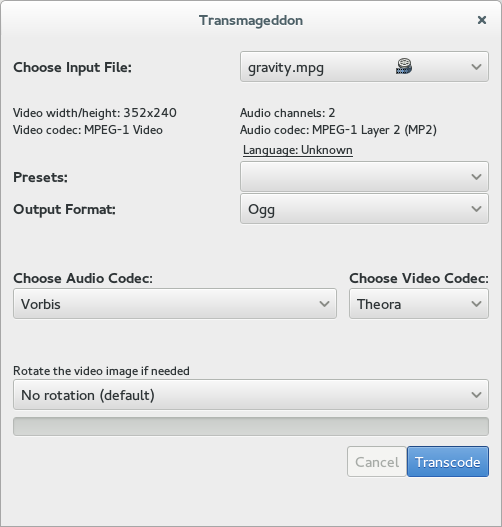

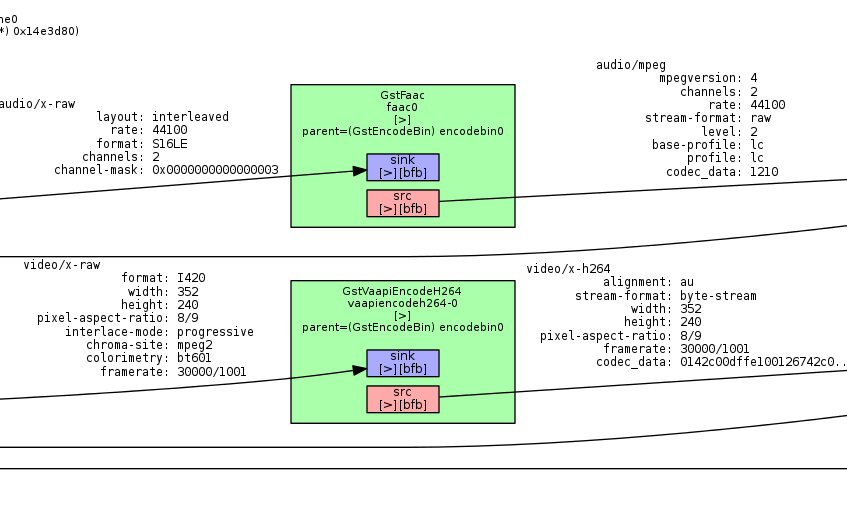

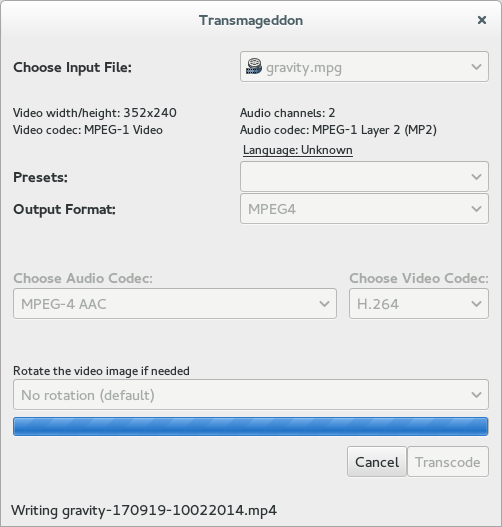

Myself, Christian Schaller (Red Hat) was on a bug fixing spree in Transmageddon (a transcoding application written in python and GStreamer) and managed to reduce the number of known bugs to only 1. Fixed that last bug once I got home, so now I just need to hammer at Transmageddon for a bit to make sure I caught all the corner case issues so I can do a major new release with new features such as handling files with multiple audio streams, handling DVD ripping, handling VP9 encoding, handling setting audio stream language information, reducing decoding overhead for streams that we are going to throw away and more. Also had help reviewing and cleaning up the Transmageddon code from Alberto Ruiz, freeing Transmageddon from some ugly code that had survived many library updates and rewrites.

Alessandro Decina(Spotify) kept working on his patches to update the Firefox GStreamer backend to GStreamer 1.0. We hope to deploy this work in Fedora in the not to distant future. As a hack for the hackfest he provided patches to implement audio and video capture.

Wim Taymans (Red Hat) was hacking on a new library that can parse and generate MIKEY messages (RFC 3830). He want to use this in the GStreamer RTSP server to negotiate SRTP (secure RTP) encryption parameters.

We had 2 people from the Swedish company AXIS, who provide network cameras that all run GStreamer and who contribute on a regular basis to the RTP and RTSP elements and libraries. Ognyan Tonchev was mostly writing some unit tests for RTSP and multicast handling in the RTSP server. Sebastian Rasmussen had been hacking on our watchdog element and the payloaders.

Infrastructure

Long time GStreamer hacker Stefan Sauer (Google) gave a demo of his idea for a tracing infrastructure in GStreamer. The idea is to place trace macros at strategic places that would send structured data to pluggable tracer modules. Some of the tracer modules could, for example measure CPU usage of a plugin or measure the latency. The idea would be to gradually replace our extensive (but unstructured) logging with this new trace infrastructure. This would allow us to do new interesting things, like send debug log to a remote machine or produce STF (Structured Trace Format) to analyse with standard tools. No immediate plans were made to merge this but there seems to be very little resistance to get this merged soon.

Core hacker Sebastian Dröge (Centricular) has been going over the current Stream selection ideas. One of the long outstanding issues is that of switching streams between different languages: you have a movie in different languages and you want to switch between them. To achieve low-latency old data should be kept around for the streams that are not currently selected and be quickly and sent to the audio device. The idea is a combination of events to select a stream and to have the demuxer seek back in the stream on

switches. No final conclusion or plan that can solve all requirements has been reached yet.

Also investigations have begun to make decodebin deal with renegotiations. For example, when a new stream is selected, we might need to use a different decoder for this stream but also when new input is received, decodebin should be able to reconfigure itself. The decodebin code is a complicated beast so any change to it should be done carefully.

GStreamer maintainer Tim-Philipp Müller (Centricular) spent his time merging the new device probing and monitoring API (written by Olivier Crête from Collabora) that had been sitting in bugzilla for a while now. The purpose is to be able to probe devices and their capabilities such as v4l2 and ALSA devices. It’s also possible to be notified when devices appear and disappear in the system. An implementation for pulseaudio devices and another for v4l2 devices using gudev has been committed as well. This reimplements a

feature that was in 0.10, but got cut from 1.0 due to us not being happy with the old design. One of the complications with that was the fact that we ran out of bits in one of our enums so we needed to find a good solution for that.

We briefly discussed how to implement the SKIP seek flag. This extra flag can be used when doing fast forward or reverse and instructs the decoders that it is allowed to throw away data to more efficiently perform the trick mode (at reduced accuracy). There was a prototype for AVI playback that I implemented once that we discussed a bit. We’ll see if someone takes up the task to finalize this work and implement SKIP mode in more demuxers.

I took some photos during the event to capture the spirit and put them on Google Plus for your viewing pleasure.

A big thank you to Google for hosting us and providing us with free lunch and free drinks through the weekend.