As those of you following the GStreamer development mailing list or the GStreamer Google Plus profile know, we have been having a GStreamer hackfest in Milan over the last few days. We have 17 people here, all hammering away at our laptops or discussing various technical challenges sitting at a nice place called the Milan Hub.

A lot of progress has been made during these days with some highlights including work on fixing the use of Gnonlin with GStreamer 1.0, which is a prerequisite for getting PiTiVi and Jokosher running with GStreamer 1.0. Jeff Fortin, Thibault Saunier, Nicolas Dufresne, Edward Hervey, Peteris Krishanis and Emanuele Aina has all been helping out with this in addition to fixing various other issues in PiTiVi and Jokosher.

Sebastian Dröge has put a lot of work during the hackfest into providing the basic building blocks for doing hardware codecs nicely in GStreamer, and Víctor Jáquez has been working on making VAAPI work well using these building blocks, with the plan among other things to make sure you have hardware accelerated decoding working with WebKit. In that regards Philippe Normand has spent the hackfest investigating and improving various bits of the GStreamer backend in Webkit, like improving the on-disk buffering method used. Also in terms of hardware codec support Edward Hervey also found a bit of time to work a little on the VDPAU plugins.

Speaking of web browsers Alessandro Decina has been working on porting Firefox to GStreamer 1.0, he has also been our local host making sure we have found places to eat lunch and dinner that where able to host our big group. So a big thank you to Alessandro for this.

Wim Taymans has been working on properly dealing with chroma keying in GStreamer, improving picture quality significantly in some cases, in addition to being constantly barraged with questions and discussions about various enhancements, bugs and other challenges.

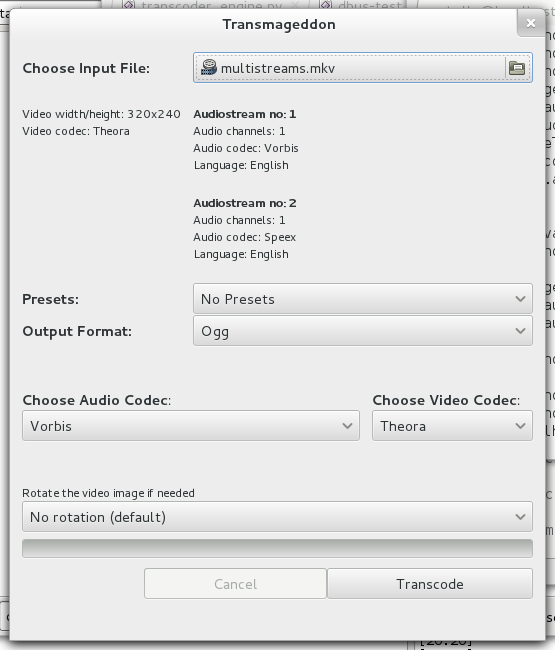

Edward Hervey has in addition to help out with GNonlin also been working on improvements in our DVB support and improving encodebin so that you can now request a named profile when requesting pads, the last item being a crucial piece in terms of allowing me to proceed with Transmageddons multistream support.

Stefan Sauer spent time on fixing various bugs in the GStreamer 1.0 port of Buzztard and a first stab at designing a tracing framework for GStreamer.

Arun Raghavan was working on various bugs related to Pulse Audio and GStreamer and also implemented a SBC RTP depayloader element for GStreamer.

Tim-Philipp Müller has been working on implementing a stream selection flag in order for GStreamer player to be able to follow any in-file hints about which streams to default to or to not default to for that matter.

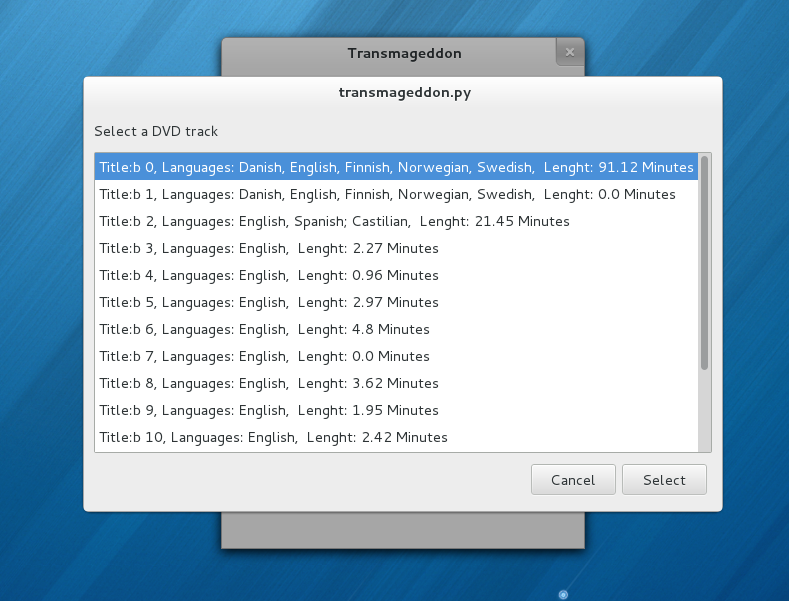

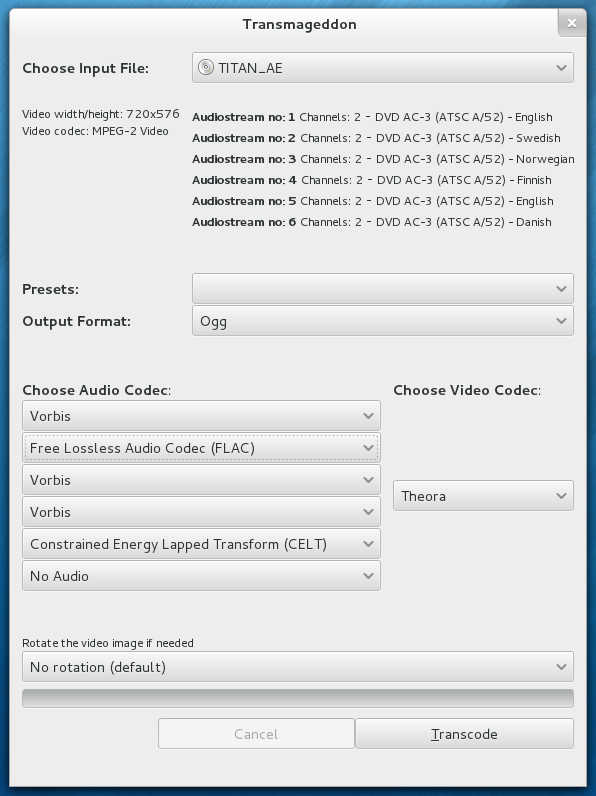

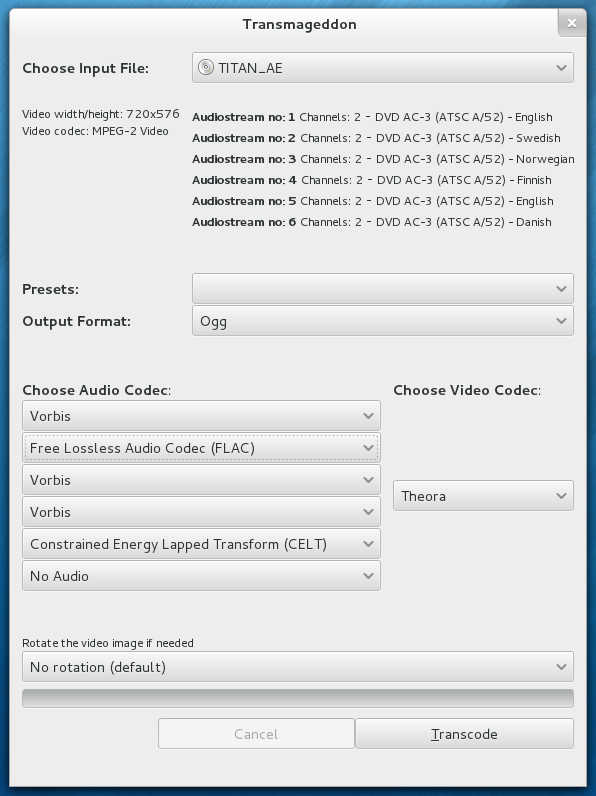

As for myself I been mostly working on Transmageddon trying to get the multistream and DVD support working. Thanks to some crucial bugfixes from Edward Hervey and Wim Taymans I was able to make good progress and I have ripped my first DVD with Transmageddon now. There is still a lot of work that needs doing, both in terms of presentation, features and general robustness, but I am very pleased by the progress made.

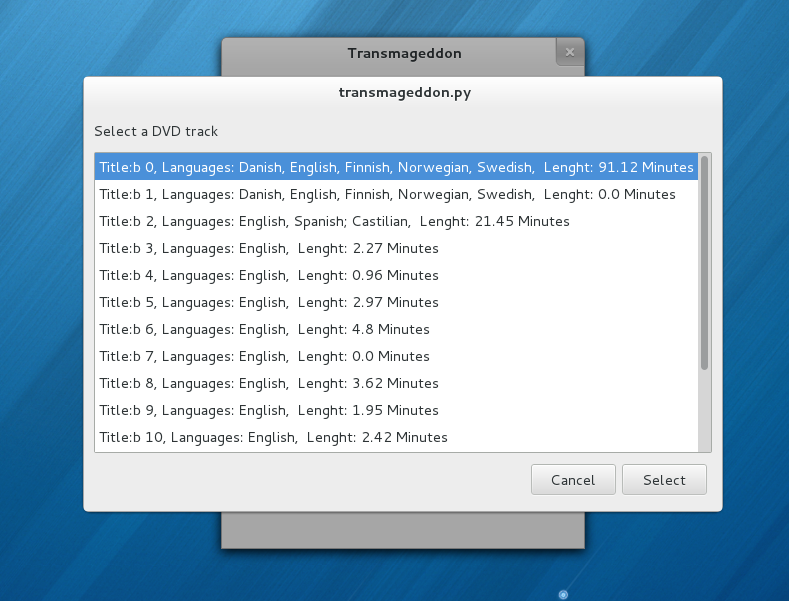

Title selection screen, needs a bit more polish, but getting there.

As you see above you can now choose to transcode to different codecs for each sound stream, or drop the streams you don’t care about. The main usecase for different codecs is to you a different codecs for surround sound as opposed to stereo or mono streams.

A big thank you to Collabora and Fluendo for sponsoring us with dinner during the hackfest.

Also a big thank you to Collabora, Fluendo, Google, Igalia, Red Hat and Spotify for letting their employees attend the hackfest.